들어가며

안녕하세요! Devlos입니다.

이번 포스팅은 CloudNet@ 커뮤니티에서 주최하는 Cilium Study 5주 차 주제인 "BGP Control Plane & Cluster Mesh"에 대해서 정리한 내용입니다.

실습환경 구성

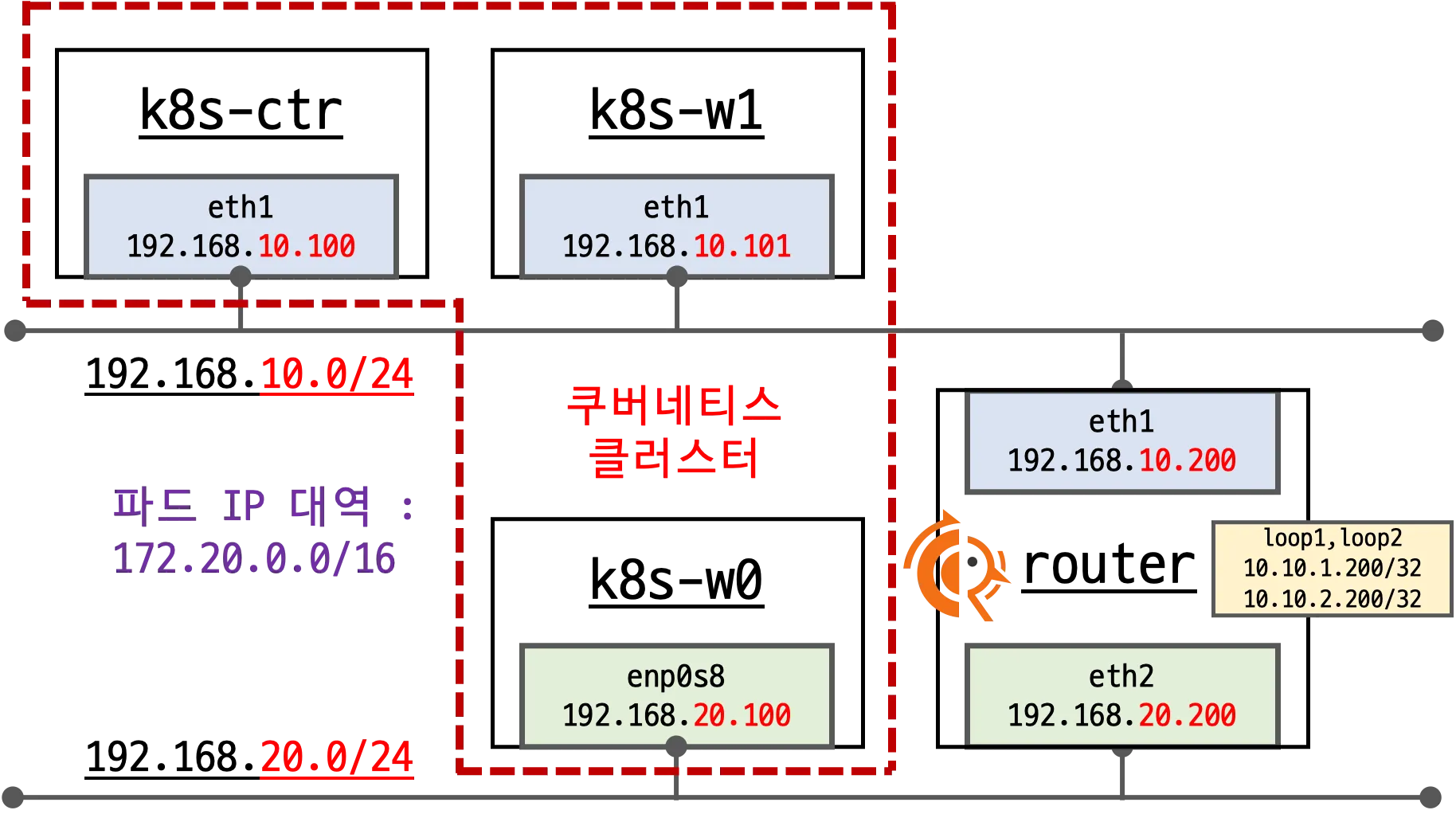

이번 주차 실습 환경은 mac M3 Pro max 환경에서 실습을 진행했고, VirtualBox + Vagrant로 환경을 구성했어요.

- Control Plane (k8s-ctr): Kubernetes 마스터 노드, API 서버 및 컨트롤 플레인 컴포넌트 실행

- Worker Node (k8s-w0): 실제 워크로드가 실행되는 워커 노드

- Worker Node (k8s-w1): 실제 워크로드가 실행되는 워커 노드

- Router (router): 192.168.10.0/24 ↔ 192.168.20.0/24 대역 라우팅 역할, k8s 에 join 되지 않은 서버, BGP 동작을 위한 FRR 툴 설치됨

BGP란?

BGP(Border Gateway Protocol)는 인터넷의 핵심 라우팅 프로토콜로, 서로 다른 자율시스템(AS) 간에 네트워크 경로 정보를 교환하는 표준 프로토콜입니다.

특히 클라우드 환경과 온프레미스 네트워크 간의 연결, 또는 여러 데이터센터 간의 라우팅 정보 공유에 널리 사용됩니다.

FRR이란?

출처 - https://docs.frrouting.org/en/stable-10.4/about.htm

FRR(Free Range Routing)은 오픈소스 라우팅 소프트웨어 스위트로, BGP, OSPF, IS-IS 등 다양한 라우팅 프로토콜을 지원합니다.

기존의 Quagga 프로젝트를 포크하여 개발되었으며, 네트워크 운영자들이 상용 라우터 없이도 고급 라우팅 기능을 구현할 수 있게 해주는 강력한 툴입니다.

FRR은 BGP 프로토콜을 구현하는 오픈소스 라우팅 소프트웨어로, 네트워크 장비 없이도 BGP 라우팅 기능을 제공할 수 있습니다.

실습 환경에서는 FRR을 설치하여 라우터 역할을 하는 서버에서 BGP 세션을 구성하고, Kubernetes 클러스터와 외부 네트워크 간의 라우

팅 정보를 교환합니다.

실습환경 구성

실습 환경은 가시다님의 lab환경을 설치하였습니다.

mkdir cilium-lab && cd cilium-lab

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/Vagrantfile

vagrant up실습환경에서의 주요 내용은 다음과 같습니다.

Vagrantfile : 가상머신 정의, 부팅 시 초기 프로비저닝 설정

Vagrantfile은 실습 환경의 가상머신들을 정의하고 자동으로 프로비저닝하는 설정 파일입니다.

Control Plane, Worker Node, Router 등 총 4개의 가상머신을 생성하며, 각각의 역할에 맞는 네트워크 설정과 초기화 스크립트를 자동으로 실행합니다.

VirtualBox를 기반으로 하며, Ubuntu 24.04 베이스 이미지를 사용하여 일관된 환경을 구성합니다.

# Variables

K8SV = '1.33.2-1.1' # Kubernetes Version : apt list -a kubelet , ex) 1.32.5-1.1

CONTAINERDV = '1.7.27-1' # Containerd Version : apt list -a containerd.io , ex) 1.6.33-1

CILIUMV = '1.18.0' # Cilium CNI Version : https://github.com/cilium/cilium/tags

N = 1 # max number of worker nodes

# Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/ubuntu-24.04

BOX_IMAGE = "bento/ubuntu-24.04"

BOX_VERSION = "202508.03.0"

Vagrant.configure("2") do |config| # Vagrant 설정 시작

#-ControlPlane Node

config.vm.define "k8s-ctr" do |subconfig| # Control Plane 노드 정의

subconfig.vm.box = BOX_IMAGE # 베이스 이미지 설정

subconfig.vm.box_version = BOX_VERSION # 이미지 버전 설정

subconfig.vm.provider "virtualbox" do |vb| # VirtualBox 프로바이더 설정

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] # VirtualBox 그룹 설정

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] # 네트워크 인터페이스 프로미스큐어스 모드 설정

vb.name = "k8s-ctr" # 가상머신 이름

vb.cpus = 2 # CPU 코어 수

vb.memory = 2560 # 메모리 크기 (MB)

vb.linked_clone = true # 링크드 클론 사용으로 빠른 생성

end

subconfig.vm.host_name = "k8s-ctr" # 호스트명 설정

subconfig.vm.network "private_network", ip: "192.168.10.100" # 프라이빗 네트워크 IP 설정

subconfig.vm.network "forwarded_port", guest: 22, host: 60000, auto_correct: true, id: "ssh" # SSH 포트 포워딩

subconfig.vm.synced_folder "./", "/vagrant", disabled: true # 폴더 동기화 비활성화

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/init_cfg.sh", args: [ K8SV, CONTAINERDV ] # 초기 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/k8s-ctr.sh", args: [ N, CILIUMV, K8SV ] # Control Plane 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/route-add1.sh" # 라우팅 설정 스크립트 실행

end

#-Worker Nodes Subnet1

(1..N).each do |i| # 워커 노드 개수만큼 반복

config.vm.define "k8s-w#{i}" do |subconfig| # 워커 노드 정의

subconfig.vm.box = BOX_IMAGE # 베이스 이미지 설정

subconfig.vm.box_version = BOX_VERSION # 이미지 버전 설정

subconfig.vm.provider "virtualbox" do |vb| # VirtualBox 프로바이더 설정

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] # VirtualBox 그룹 설정

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] # 네트워크 인터페이스 프로미스큐어스 모드 설정

vb.name = "k8s-w#{i}" # 가상머신 이름

vb.cpus = 2 # CPU 코어 수

vb.memory = 1536 # 메모리 크기 (MB)

vb.linked_clone = true # 링크드 클론 사용

end

subconfig.vm.host_name = "k8s-w#{i}" # 호스트명 설정

subconfig.vm.network "private_network", ip: "192.168.10.10#{i}" # 프라이빗 네트워크 IP 설정 (동적 할당)

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh" # SSH 포트 포워딩

subconfig.vm.synced_folder "./", "/vagrant", disabled: true # 폴더 동기화 비활성화

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/init_cfg.sh", args: [ K8SV, CONTAINERDV] # 초기 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/k8s-w.sh" # 워커 노드 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/route-add1.sh" # 라우팅 설정 스크립트 실행

end

end

#-Router Node

config.vm.define "router" do |subconfig| # 라우터 노드 정의

subconfig.vm.box = BOX_IMAGE # 베이스 이미지 설정

subconfig.vm.box_version = BOX_VERSION # 이미지 버전 설정

subconfig.vm.provider "virtualbox" do |vb| # VirtualBox 프로바이더 설정

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] # VirtualBox 그룹 설정

vb.name = "router" # 가상머신 이름

vb.cpus = 1 # CPU 코어 수 (라우터는 1개)

vb.memory = 768 # 메모리 크기 (MB)

vb.linked_clone = true # 링크드 클론 사용

end

subconfig.vm.host_name = "router" # 호스트명 설정

subconfig.vm.network "private_network", ip: "192.168.10.200" # 첫 번째 네트워크 인터페이스 IP

subconfig.vm.network "forwarded_port", guest: 22, host: 60009, auto_correct: true, id: "ssh" # SSH 포트 포워딩

subconfig.vm.network "private_network", ip: "192.168.20.200", auto_config: false # 두 번째 네트워크 인터페이스 IP (수동 설정)

subconfig.vm.synced_folder "./", "/vagrant", disabled: true # 폴더 동기화 비활성화

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/router.sh" # 라우터 설정 스크립트 실행

end

#-Worker Nodes Subnet2

config.vm.define "k8s-w0" do |subconfig| # 서브넷2 워커 노드 정의

subconfig.vm.box = BOX_IMAGE # 베이스 이미지 설정

subconfig.vm.box_version = BOX_VERSION # 이미지 버전 설정

subconfig.vm.provider "virtualbox" do |vb| # VirtualBox 프로바이더 설정

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] # VirtualBox 그룹 설정

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] # 네트워크 인터페이스 프로미스큐어스 모드 설정

vb.name = "k8s-w0" # 가상머신 이름

vb.cpus = 2 # CPU 코어 수

vb.memory = 1536 # 메모리 크기 (MB)

vb.linked_clone = true # 링크드 클론 사용

end

subconfig.vm.host_name = "k8s-w0" # 호스트명 설정

subconfig.vm.network "private_network", ip: "192.168.20.100" # 서브넷2 네트워크 IP 설정

subconfig.vm.network "forwarded_port", guest: 22, host: 60010, auto_correct: true, id: "ssh" # SSH 포트 포워딩

subconfig.vm.synced_folder "./", "/vagrant", disabled: true # 폴더 동기화 비활성화

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/init_cfg.sh", args: [ K8SV, CONTAINERDV] # 초기 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/k8s-w.sh" # 워커 노드 설정 스크립트 실행

subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/route-add2.sh" # 서브넷2 라우팅 설정 스크립트 실행

end

endinit_cfg.sh : args 참고하여 설치

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Setting Profile & Bashrc"

echo 'alias vi=vim' >> /etc/profile

echo "sudo su -" >> /home/vagrant/.bashrc

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # Change Timezone

echo "[TASK 2] Disable AppArmor"

systemctl stop ufw && systemctl disable ufw >/dev/null 2>&1

systemctl stop apparmor && systemctl disable apparmor >/dev/null 2>&1

echo "[TASK 3] Disable and turn off SWAP"

swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

echo "[TASK 4] Install Packages"

apt update -qq >/dev/null 2>&1

apt-get install apt-transport-https ca-certificates curl gpg -y -qq >/dev/null 2>&1

# Download the public signing key for the Kubernetes package repositories.

mkdir -p -m 755 /etc/apt/keyrings

K8SMMV=$(echo $1 | sed -En 's/^([0-9]+\.[0-9]+)\..*/\1/p')

curl -fsSL https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/ /" >> /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.d/k8s.conf

# enable br_netfilter for iptables

modprobe br_netfilter

modprobe overlay

echo "br_netfilter" >> /etc/modules-load.d/k8s.conf

echo "overlay" >> /etc/modules-load.d/k8s.conf

echo "[TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)"

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update >/dev/null 2>&1 # 패키지 목록 업데이트

# apt list -a kubelet ; apt list -a containerd.io

apt-get install -y kubelet=$1 kubectl=$1 kubeadm=$1 containerd.io=$2 >/dev/null 2>&1 # Kubernetes 컴포넌트 설치

apt-mark hold kubelet kubeadm kubectl >/dev/null 2>&1 # 패키지 버전 고정

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml # containerd 기본 설정 생성

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml # systemd cgroup 사용 설정

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml # crictl 설정 파일 생성

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd # containerd 서비스 재시작 및 활성화

systemctl enable --now kubelet # kubelet 서비스 활성화 및 즉시 시작

echo "[TASK 6] Install Packages & Helm"

export DEBIAN_FRONTEND=noninteractive

apt-get install -y bridge-utils sshpass net-tools conntrack ngrep tcpdump ipset arping wireguard jq yq tree bash-completion unzip kubecolor termshark >/dev/null 2>&1

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash >/dev/null 2>&1

echo ">>>> Initial Config End <<<<"kubeadm-init-ctr-config.yaml

#!/usr/bin/env bash

echo ">>>> K8S Controlplane config Start <<<<"

echo "[TASK 1] Initial Kubernetes"

curl --silent -o /root/kubeadm-init-ctr-config.yaml https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/kubeadm-init-ctr-config.yaml

K8SMMV=$(echo $3 | sed -En 's/^([0-9]+\.[0-9]+\.[0-9]+).*/\1/p')

sed -i "s/K8S_VERSION_PLACEHOLDER/v${K8SMMV}/g" /root/kubeadm-init-ctr-config.yaml

kubeadm init --config="/root/kubeadm-init-ctr-config.yaml" >/dev/null 2>&1

echo "[TASK 2] Setting kube config file"

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown $(id -u):$(id -g) /root/.kube/config

echo "[TASK 3] Source the completion"

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'source <(kubeadm completion bash)' >> /etc/profile

echo "[TASK 4] Alias kubectl to k"

echo 'alias k=kubectl' >> /etc/profile

echo 'alias kc=kubecolor' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

echo "[TASK 5] Install Kubectx & Kubens"

git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

echo "[TASK 6] Install Kubeps & Setting PS1"

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 >/dev/null 2>&1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=true

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab" >/dev/null 2>&1

echo "[TASK 7] Install Cilium CNI"

NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

helm repo add cilium https://helm.cilium.io/ >/dev/null 2>&1

helm repo update >/dev/null 2>&1

helm install cilium cilium/cilium --version $2 --namespace kube-system \

--set k8sServiceHost=192.168.10.100 --set k8sServicePort=6443 \

--set ipam.mode="cluster-pool" --set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} --set ipv4NativeRoutingCIDR=172.20.0.0/16 \

--set routingMode=native --set autoDirectNodeRoutes=false --set bgpControlPlane.enabled=true \

--set kubeProxyReplacement=true --set bpf.masquerade=true --set installNoConntrackIptablesRules=true \

--set endpointHealthChecking.enabled=false --set healthChecking=false \

--set hubble.enabled=true --set hubble.relay.enabled=true --set hubble.ui.enabled=true \

--set hubble.ui.service.type=NodePort --set hubble.ui.service.nodePort=30003 \

--set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.metrics.enableOpenMetrics=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" \

--set operator.replicas=1 --set debug.enabled=true >/dev/null 2>&1

echo "[TASK 8] Install Cilium / Hubble CLI"

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz >/dev/null 2>&1

tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz

HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

HUBBLE_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz >/dev/null 2>&1

tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin

rm hubble-linux-${HUBBLE_ARCH}.tar.gz

echo "[TASK 9] Remove node taint"

kubectl taint nodes k8s-ctr node-role.kubernetes.io/control-plane-

echo "[TASK 10] local DNS with hosts file"

echo "192.168.10.100 k8s-ctr" >> /etc/hosts

echo "192.168.10.200 router" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

for (( i=1; i<=$1; i++ )); do echo "192.168.10.10$i k8s-w$i" >> /etc/hosts; done

echo "[TASK 11] Dynamically provisioning persistent local storage with Kubernetes"

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.31/deploy/local-path-storage.yaml >/dev/null 2>&1

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' >/dev/null 2>&1

# echo "[TASK 12] Install Prometheus & Grafana"

# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.18.0/examples/kubernetes/addons/prometheus/monitoring-example.yaml >/dev/null 2>&1

# kubectl patch svc -n cilium-monitoring prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}' >/dev/null 2>&1

# kubectl patch svc -n cilium-monitoring grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}' >/dev/null 2>&1

# echo "[TASK 12] Install Prometheus Stack"

# helm repo add prometheus-community https://prometheus-community.github.io/helm-charts >/dev/null 2>&1

# cat <<EOT > monitor-values.yaml

# prometheus:

# prometheusSpec:

# scrapeInterval: "15s"

# evaluationInterval: "15s"

# service:

# type: NodePort

# nodePort: 30001

# grafana:

# defaultDashboardsTimezone: Asia/Seoul

# adminPassword: prom-operator

# service:

# type: NodePort

# nodePort: 30002

# alertmanager:

# enabled: false

# defaultRules:

# create: false

# prometheus-windows-exporter:

# prometheus:

# monitor:

# enabled: false

# EOT

# helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 75.15.1 \

# -f monitor-values.yaml --create-namespace --namespace monitoring >/dev/null 2>&1

echo "[TASK 13] Install Metrics-server"

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/ >/dev/null 2>&1

helm upgrade --install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system >/dev/null 2>&1

echo "[TASK 14] Install k9s"

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

wget https://github.com/derailed/k9s/releases/latest/download/k9s_linux_${CLI_ARCH}.deb -O /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1

apt install /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1

echo ">>>> K8S Controlplane Config End <<<<"k8s-ctr.sh : kubeadm init, Cilium CNI 설치, 편리성 설정(k, kc), k9s, local-path-sc, metrics-server

#!/usr/bin/env bash

echo ">>>> K8S Controlplane config Start <<<<"

echo "[TASK 1] Initial Kubernetes"

curl --silent -o /root/kubeadm-init-ctr-config.yaml https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/kubeadm-init-ctr-config.yaml

K8SMMV=$(echo $3 | sed -En 's/^([0-9]+\.[0-9]+\.[0-9]+).*/\1/p')

sed -i "s/K8S_VERSION_PLACEHOLDER/v${K8SMMV}/g" /root/kubeadm-init-ctr-config.yaml

kubeadm init --config="/root/kubeadm-init-ctr-config.yaml" >/dev/null 2>&1

echo "[TASK 2] Setting kube config file"

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown $(id -u):$(id -g) /root/.kube/config

echo "[TASK 3] Source the completion"

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'source <(kubeadm completion bash)' >> /etc/profile

echo "[TASK 4] Alias kubectl to k"

echo 'alias k=kubectl' >> /etc/profile

echo 'alias kc=kubecolor' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

echo "[TASK 5] Install Kubectx & Kubens"

git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

echo "[TASK 6] Install Kubeps & Setting PS1"

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 >/dev/null 2>&1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=true

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab" >/dev/null 2>&1

echo "[TASK 7] Install Cilium CNI"

NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

helm repo add cilium https://helm.cilium.io/ >/dev/null 2>&1

helm repo update >/dev/null 2>&1

helm install cilium cilium/cilium --version $2 --namespace kube-system \

--set k8sServiceHost=192.168.10.100 --set k8sServicePort=6443 \ # Kubernetes API 서버 주소 및 포트 설정

--set ipam.mode="cluster-pool" --set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} --set ipv4NativeRoutingCIDR=172.20.0.0/16 \ # IPAM 모드를 cluster-pool로 설정하고 Pod CIDR 범위 지정

--set routingMode=native --set autoDirectNodeRoutes=false --set bgpControlPlane.enabled=true \ # 네이티브 라우팅 모드 활성화 및 BGP 컨트롤 플레인 활성화

--set kubeProxyReplacement=true --set bpf.masquerade=true --set installNoConntrackIptablesRules=true \ # kube-proxy 대체, BPF 마스커레이딩, 연결 추적 규칙 비활성화

--set endpointHealthChecking.enabled=false --set healthChecking=false \ # 엔드포인트 헬스 체킹 비활성화

--set hubble.enabled=true --set hubble.relay.enabled=true --set hubble.ui.enabled=true \ # Hubble 관찰성 도구 활성화

--set hubble.ui.service.type=NodePort --set hubble.ui.service.nodePort=30003 \ # Hubble UI를 NodePort 타입으로 설정하고 포트 지정

--set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.metrics.enableOpenMetrics=true \ # Prometheus 메트릭 수집 활성화

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" \ # 수집할 메트릭 종류 및 라벨 컨텍스트 설정

--set operator.replicas=1 --set debug.enabled=true >/dev/null 2>&1 # Operator 복제본 수 및 디버그 모드 활성화

echo "[TASK 8] Install Cilium / Hubble CLI"

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz >/dev/null 2>&1

tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz

HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

HUBBLE_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz >/dev/null 2>&1

tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin

rm hubble-linux-${HUBBLE_ARCH}.tar.gz

echo "[TASK 9] Remove node taint"

kubectl taint nodes k8s-ctr node-role.kubernetes.io/control-plane-

echo "[TASK 10] local DNS with hosts file"

echo "192.168.10.100 k8s-ctr" >> /etc/hosts

echo "192.168.10.200 router" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

for (( i=1; i<=$1; i++ )); do echo "192.168.10.10$i k8s-w$i" >> /etc/hosts; done

echo "[TASK 11] Dynamically provisioning persistent local storage with Kubernetes"

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.31/deploy/local-path-storage.yaml >/dev/null 2>&1

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' >/dev/null 2>&1

echo "[TASK 13] Install Metrics-server"

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/ >/dev/null 2>&1

helm upgrade --install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system >/dev/null 2>&1

echo "[TASK 14] Install k9s"

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

wget https://github.com/derailed/k9s/releases/latest/download/k9s_linux_${CLI_ARCH}.deb -O /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1

apt install /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1

echo ">>>> K8S Controlplane Config End <<<<"kubeadm-join-worker-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4

kind: JoinConfiguration

discovery:

bootstrapToken:

token: "123456.1234567890123456"

apiServerEndpoint: "192.168.10.100:6443"

unsafeSkipCAVerification: true

nodeRegistration:

criSocket: "unix:///run/containerd/containerd.sock"

kubeletExtraArgs:

- name: node-ip

value: "NODE_IP_PLACEHOLDER"k8s-w.sh : kubeadm join

#!/usr/bin/env bash

echo ">>>> K8S Node config Start <<<<"

echo "[TASK 1] K8S Controlplane Join"

curl --silent -o /root/kubeadm-join-worker-config.yaml https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/2w/kubeadm-join-worker-config.yaml # kubeadm join 설정 파일 다운로드

NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}') # 노드 IP 주소 추출

sed -i "s/NODE_IP_PLACEHOLDER/${NODEIP}/g" /root/kubeadm-join-worker-config.yaml # 설정 파일에 노드 IP 적용

kubeadm join --config="/root/kubeadm-join-worker-config.yaml" > /dev/null 2>&1 # 클러스터에 워커 노드 참여

echo ">>>> K8S Node config End <<<<"route-add1.sh : k8s node 들이 내부망과 통신을 위한 route 설정

#!/usr/bin/env bash

echo ">>>> Route Add Config Start <<<<"

chmod 600 /etc/netplan/01-netcfg.yaml # netplan 설정 파일 권한 설정

chmod 600 /etc/netplan/50-vagrant.yaml # vagrant netplan 설정 파일 권한 설정

cat <<EOT>> /etc/netplan/50-vagrant.yaml # 라우팅 설정 추가

routes:

- to: 192.168.20.0/24 # 서브넷2 네트워크

via: 192.168.10.200 # 라우터 게이트웨이

# - to: 172.20.0.0/16

# via: 192.168.10.200

EOT

netplan apply # 네트워크 설정 적용

echo ">>>> Route Add Config End <<<<"route-add2.sh : k8s node 들이 내부망과 통신을 위한 route 설정

#!/usr/bin/env bash

echo ">>>> Route Add Config Start <<<<"

chmod 600 /etc/netplan/01-netcfg.yaml # netplan 설정 파일 권한 설정

chmod 600 /etc/netplan/50-vagrant.yaml # vagrant netplan 설정 파일 권한 설정

cat <<EOT>> /etc/netplan/50-vagrant.yaml # 라우팅 설정 추가

routes:

- to: 192.168.10.0/24 # 서브넷1 네트워크

via: 192.168.20.200 # 라우터 게이트웨이

# - to: 172.20.0.0/16

# via: 192.168.20.200

EOT

netplan apply # 네트워크 설정 적용

echo ">>>> Route Add Config End <<<<"router.sh : router(frr - BGP) 역할, 간단 웹 서버 역할

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 0] Setting eth2"

chmod 600 /etc/netplan/01-netcfg.yaml # netplan 설정 파일 권한 설정

chmod 600 /etc/netplan/50-vagrant.yaml # vagrant netplan 설정 파일 권한 설정

cat << EOT >> /etc/netplan/50-vagrant.yaml # 두 번째 네트워크 인터페이스 설정

eth2:

addresses:

- 192.168.20.200/24 # 서브넷2 네트워크 IP 설정

EOT

netplan apply # 네트워크 설정 적용

echo "[TASK 1] Setting Profile & Bashrc"

echo 'alias vi=vim' >> /etc/profile # vi 별칭 설정

echo "sudo su -" >> /home/vagrant/.bashrc # 자동 root 전환 설정

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # 타임존 설정

echo "[TASK 2] Disable AppArmor"

systemctl stop ufw && systemctl disable ufw >/dev/null 2>&1 # 방화벽 비활성화

systemctl stop apparmor && systemctl disable apparmor >/dev/null 2>&1 # AppArmor 비활성화

echo "[TASK 3] Add Kernel setting - IP Forwarding"

sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/g' /etc/sysctl.conf

sysctl -p >/dev/null 2>&1

echo "[TASK 4] Setting Dummy Interface"

modprobe dummy

ip link add loop1 type dummy

ip link set loop1 up

ip addr add 10.10.1.200/24 dev loop1

ip link add loop2 type dummy

ip link set loop2 up

ip addr add 10.10.2.200/24 dev loop2

echo "[TASK 5] Install Packages"

export DEBIAN_FRONTEND=noninteractive

apt update -qq >/dev/null 2>&1

apt-get install net-tools jq yq tree ngrep tcpdump arping termshark -y -qq >/dev/null 2>&1

echo "[TASK 6] Install Apache"

apt install apache2 -y >/dev/null 2>&1

echo -e "<h1>Web Server : $(hostname)</h1>" > /var/www/html/index.html

echo "[TASK 7] Configure FRR"

apt install frr -y >/dev/null 2>&1

sed -i "s/^bgpd=no/bgpd=yes/g" /etc/frr/daemons

NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

cat << EOF >> /etc/frr/frr.conf

!

router bgp 65000

bgp router-id $NODEIP

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24

EOF

systemctl daemon-reexec >/dev/null 2>&1

systemctl restart frr >/dev/null 2>&1

systemctl enable frr >/dev/null 2>&1

echo ">>>> Initial Config End <<<<"실리움의 네이티브 라우팅 모드를 활성화 하고, BGP 컨트롤 플레인을 활성화하여 Kubernetes 클러스터와 외부 네트워크 간의 라우팅 정보를 자동으로 교환할 수 있도록 설정했습니다.

네이티브 라우팅 모드는 오버레이 네트워크 없이 노드의 기본 네트워크 인터페이스를 통해 직접 통신하는 방식으로, 네트워크 성능을 향상시키고 복잡성을 줄여줍니다.

BGP 컨트롤 플레인은 Cilium이 BGP 프로토콜을 통해 외부 라우터와 라우팅 정보를 자동으로 공유하여, LoadBalancer 서비스의 외부 접근성을 제공합니다.

기본 정보 확인

#

cat /etc/hosts

for i in k8s-w0 k8s-w1 router ; do echo ">> node : $i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@$i hostname; echo; done

# 클러스터 정보 확인

kubectl cluster-info

kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

kubectl describe cm -n kube-system kubeadm-config

kubectl describe cm -n kube-system kubelet-config

# 노드 정보 : 상태, INTERNAL-IP 확인

ifconfig | grep -iEA1 'eth[0-9]:'

kubectl get node -owide

# 파드 정보 : 상태, 파드 IP 확인

kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

kubectl get ciliumnode -o json | grep podCIDRs -A2

kubectl get pod -A -owide

kubectl get ciliumendpoints -A

# ipam 모드 확인

cilium config view | grep ^ipam

# iptables 확인

iptables-save

iptables -t nat -S

iptables -t filter -S

iptables -t mangle -S

iptables -t raw -S✅ 실행 결과 요약

# 호스트 파일 확인 - 네트워크 설정 정보

(⎈|HomeLab:N/A) root@k8s-ctr:~# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 vagrant

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.2.1 k8s-ctr k8s-ctr

192.168.10.100 k8s-ctr

192.168.10.200 router

192.168.20.100 k8s-w0

192.168.10.101 k8s-w1

# 각 노드별 호스트명 확인 - SSH 연결 테스트

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in k8s-w0 k8s-w1 router ; do echo ">> node : $i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@$i hostname; echo; done

>> node : k8s-w0 <<

Warning: Permanently added 'k8s-w0' (ED25519) to the list of known hosts.

k8s-w0

>> node : k8s-w1 <<

Warning: Permanently added 'k8s-w1' (ED25519) to the list of known hosts.

k8s-w1

>> node : router <<

Warning: Permanently added 'router' (ED25519) to the list of known hosts.

router

# 클러스터 정보 확인 - API 서버 주소 및 네트워크 설정

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl cluster-info

Kubernetes control plane is running at https://192.168.10.100:6443

CoreDNS is running at https://192.168.10.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

...

# 클러스터 CIDR 및 서비스 CIDR 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

"--service-cluster-ip-range=10.96.0.0/16",

"--cluster-cidr=10.244.0.0/16",

# kubeadm 설정 확인 - 네트워크 설정 정보

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe cm -n kube-system kubeadm-config

Name: kubeadm-config

Namespace: kube-system

...

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/16

proxy: {}

scheduler: {}

# kubelet 설정 확인 - DNS 및 클러스터 도메인 설정

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe cm -n kube-system kubelet-config

Name: kubelet-config

Namespace: kube-system

Labels: <none>

Annotations: kubeadm.kubernetes.io/component-config.hash: sha256:0ff07274ab31cc8c0f9d989e90179a90b6e9b633c8f3671993f44185a0791127

Data

====

kubelet:

----

apiVersion: kubelet.config.k8s.io/v1beta1

...

# 네트워크 인터페이스 확인 - 노드별 IP 주소

(⎈|HomeLab:N/A) root@k8s-ctr:~# ifconfig | grep -iEA1 'eth[0-9]:'

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

--

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.10.100 netmask 255.255.255.0 broadcast 192.168.10.255

# 노드 상태 및 IP 정보 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-ctr Ready control-plane 7m40s v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-71-generic containerd://1.7.27

k8s-w0 Ready <none> 2m8s v1.33.2 192.168.20.100 <none> Ubuntu 24.04.2 LTS 6.8.0-71-generic containerd://1.7.27

k8s-w1 Ready <none> 5m42s v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-71-generic containerd://1.7.27Cilium 설치 정보 확인

# Cilium ConfigMap 확인 - 설치 설정 정보

kubectl get cm -n kube-system cilium-config -o json | jq

# Cilium 상태 확인 - 에이전트 및 구성 요소 상태

cilium status

# BGP 관련 설정 확인 - BGP 컨트롤 플레인 활성화 여부

cilium config view | grep -i bgp

# Cilium 에이전트 상세 상태 확인 - 디버그 정보 포함

kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg status --verbose

# Cilium 메트릭 목록 확인 - 모니터링 가능한 메트릭들

kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg metrics list

# Cilium 패킷 모니터링 - 실시간 네트워크 트래픽 확인

kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor

# 상세 모니터링 - 더 많은 정보 출력

kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor -v

# 최대 상세 모니터링 - 모든 디버그 정보 출력

kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor -v -v✅ 실행 결과 요약

# Cilium ConfigMap 확인 - 설치 설정 정보

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get cm -n kube-system cilium-config -o json | jq

{

"apiVersion": "v1",

"data": {

"agent-not-ready-taint-key": "node.cilium.io/agent-not-ready",

"auto-direct-node-routes": "false",

"bgp-router-id-allocation-ip-pool": "",

...

# Cilium 상태 확인 - 에이전트 및 구성 요소 상태

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: disabled

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

...

# BGP 관련 설정 확인 - BGP 컨트롤 플레인 활성화 여부

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -i bgp

bgp-router-id-allocation-ip-pool

bgp-router-id-allocation-mode default

bgp-secrets-namespace kube-system

enable-bgp-control-plane true

enable-bgp-control-plane-status-report true

# Cilium 에이전트 상세 상태 확인 - 디버그 정보 포함

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg status --verbose

KVStore: Disabled

Kubernetes: Ok 1.33 (v1.33.2) [linux/arm64]

Kubernetes APIs: ["EndpointSliceOrEndpoint", "cilium/v2::CiliumCIDRGroup", "cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Pods", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: True [eth0 10.0.2.15 fd17:625c:f037:2:a00:27ff:fe6f:f7c0 fe80::a00:27ff:fe6f:f7c0, eth1 192.168.10.100 fe80::a00:27ff:fe71:ba6e (Direct Routing)]

...

# Cilium 메트릭 목록 확인 - 모니터링 가능한 메트릭들

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg metrics list

Metric Labels Value

cilium_act_processing_time_seconds 0s / 0s / 0s

cilium_agent_api_process_time_seconds method=GET path=/v1/config return_code=200 2.5ms / 4.5ms / 4.95ms

cilium_agent_api_process_time_seconds method=GET path=/v1/endpoint return_code=200 2.5ms / 4.5ms / 4.95ms

cilium_agent_api_process_time_seconds method=GET path=/v1/healthz return_code=200 2.5ms / 4.5ms / 4.95ms

cilium_agent_api_process_time_seconds method=POST path=/v1/ipam return_code=201 2.5ms / 4.5ms / 4.95ms

cilium_agent_api_process_time_seconds method=PUT path=/v1/endpoint return_code=201 1.75s / 2.35s / 2.485s

...

# Cilium 패킷 모니터링 - 실시간 네트워크 트래픽 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor

Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

time=2025-08-15T04:46:50.569716626Z level=info msg="Initializing dissection cache..."

-> endpoint 12 flow 0x9c02bef2 , identity host->31648 state new ifindex lxc2dd4f54f1d9c orig-ip 172.20.0.123: 172.20.0.123:59112 -> 172.20.0.174:10250 tcp SYN

-> stack flow 0xe2731918 , identity 31648->host state reply ifindex 0 orig-ip 0.0.0.0: 172.20.0.174:10250 -> 172.20.0.123:59112 tcp SYN, ACK

-> endpoint 12 flow 0x9c02bef2 , identity host->31648 state established ifindex lxc2dd4f54f1d9c orig-ip 172.20.0.123: 172.20.0.123:59112 -> 172.20.0.174:10250 tcp ACK

...

# 상세 모니터링 - 더 많은 정보 출력

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor -v -v

Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

------------------------------------------------------------------------------

time=2025-08-15T04:46:59.215297051Z level=info msg="Initializing dissection cache..."

Ethernet {Contents=[..14..] Payload=[..62..] SrcMAC=08:00:27:71:ba:6e DstMAC=08:00:27:e3:cc:dc EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=43469 Flags=DF FragOffset=0 TTL=63 Protocol=TCP Checksum=4128 SrcIP=172.20.0.174 DstIP=192.168.20.100 Options=[] Padding=[]}

TCP {Contents=[..40..] Payload=[] SrcPort=58510 DstPort=10250 Seq=1925301461 Ack=0 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checksum=33277 Urgent=0 Options=[..5..] Padding=[] Multipath=false}

CPU 01: MARK 0x470ee21e FROM 12 to-network: 74 bytes (74 captured), state established, interface eth1, , identity 31648->remote-node, orig-ip 0.0.0.0

------------------------------------------------------------------------------

...

Received an interrupt, disconnecting from monitor...네트워크 정보 확인: autoDirectNodeRoutes=false

# router 네트워크 인터페이스 정보 확인

sshpass -p 'vagrant' ssh vagrant@router ip -br -c -4 addr

# k8s node 네트워크 인터페이스 정보 확인

ip -c -4 addr show dev eth1

for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c -4 addr show dev eth1; echo; done

# 라우팅 정보 확인

sshpass -p 'vagrant' ssh vagrant@router ip -c route

ip -c route | grep static

## 노드별 PodCIDR 라우팅이 없다!

ip -c route

for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c route; echo; done

# 통신 확인

ping -c 1 192.168.20.100 # k8s-w0 eth1실행 결과

# 라우터 네트워크 인터페이스 정보 확인 - 멀티 네트워크 대역 구성

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

eth0 UP 10.0.2.15/24 metric 100

eth1 UP 192.168.10.200/24

eth2 UP 192.168.20.200/24

loop1 UNKNOWN 10.10.1.200/24

loop2 UNKNOWN 10.10.2.200/24

# 컨트롤 플레인 노드 eth1 인터페이스 확인 - 192.168.10.100/24 대역

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c -4 addr show dev eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s9

inet 192.168.10.100/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

# 워커 노드들 eth1 인터페이스 확인 - 서로 다른 네트워크 대역 사용

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c -4 addr show dev eth1; echo; done

>> node : k8s-w1 <<

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s9

inet 192.168.10.101/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

>> node : k8s-w0 <<

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

altname enp0s9

inet 192.168.20.100/24 brd 192.168.20.255 scope global eth1

valid_lft forever preferred_lft forever

# 라우터 라우팅 테이블 확인 - 네트워크 대역별 라우팅 정보

(⎈|HomeLab:N/A) root@k8s-ctr:~# sshpass -p 'vagrant' ssh vagrant@router ip -c route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

# 컨트롤 플레인 노드 정적 라우팅 확인 - 192.168.20.0/24 대역 라우팅

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route | grep static

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static #auto direct node routing을 껐기 때문에 같은 네트워크 대역이라도 연결된 Pod cidr 정보가 없음

# 컨트롤 플레인 노드 전체 라우팅 테이블 확인 - Cilium 네이티브 라우팅 포함

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.20.0.0/24 via 172.20.0.123 dev cilium_host proto kernel src 172.20.0.123 #워커 노드 1도 같은 대역에 있지만 등록되지 않은것을 확인

#172.20.1.0/24 (worker 1 pod cidr 라우팅 정보 없음)

172.20.0.123 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

# 워커 노드들 라우팅 테이블 확인 - 노드별 Cilium Pod CIDR 라우팅

(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c route; echo; done

>> node : k8s-w1 <<

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.20.1.0/24 via 172.20.1.198 dev cilium_host proto kernel src 172.20.1.198 #워커 노드들 역시 각각 자신의 pod CIDR 정보만 가지고 있음

172.20.1.198 dev cilium_host proto kernel scope link

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

>> node : k8s-w0 <<

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.20.2.0/24 via 172.20.2.36 dev cilium_host proto kernel src 172.20.2.36 #워커 노드들 역시 각각 자신의 pod CIDR 정보만 가지고 있음

172.20.2.36 dev cilium_host proto kernel scope link

192.168.10.0/24 via 192.168.20.200 dev eth1 proto static

192.168.20.0/24 dev eth1 proto kernel scope link src 192.168.20.100

# 노드 간 통신 확인 - k8s-w0 노드로 ping 테스트

(⎈|HomeLab:N/A) root@k8s-ctr:~# ping -c 1 192.168.20.100 # k8s-w0 eth1

PING 192.168.20.100 (192.168.20.100) 56(84) bytes of data.

64 bytes from 192.168.20.100: icmp_seq=1 ttl=63 time=0.881 ms

--- 192.168.20.100 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.881/0.881/0.881/0.000 ms샘플 어플리케이션을통해 통신 문제 확인

애플리케이션 배포

# 샘플 애플리케이션 배포

cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 3

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# k8s-ctr 노드에 curl-pod 파드 배포

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 배포 확인

kubectl get deploy,svc,ep webpod -owide

kubectl get endpointslices -l app=webpod

kubectl get ciliumendpoints # IP 확인

# 통신 문제 확인 : 노드 내의 파드들 끼리만 통신되는 중!

kubectl exec -it curl-pod -- curl -s --connect-timeout 1 webpod | grep Hostname

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# cilium-dbg, map

kubectl exec -n kube-system ds/cilium -- cilium-dbg ip list

kubectl exec -n kube-system ds/cilium -- cilium-dbg endpoint list

kubectl exec -n kube-system ds/cilium -- cilium-dbg service list

kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf lb list

kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf nat list

kubectl exec -n kube-system ds/cilium -- cilium-dbg map list | grep -v '0 0'

kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_services_v2

kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_backends_v3

kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_reverse_nat

kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_ipcache_v2✅ 실행 결과 요약

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep webpod -owide

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/webpod 3/3 3 3 10s webpod traefik/whoami app=webpod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/webpod ClusterIP 10.96.150.245 <none> 80/TCP 10s app=webpod

NAME ENDPOINTS AGE

endpoints/webpod 172.20.0.216:80,172.20.1.184:80,172.20.2.160:80 10s

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get endpointslices -l app=webpod

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

webpod-57x67 IPv4 80 172.20.0.216,172.20.2.160,172.20.1.184 15s

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints # 각 pod IP 확인

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

curl-pod 5764 ready 172.20.0.100

webpod-697b545f57-cmftm 21214 ready 172.20.2.160

webpod-697b545f57-hchf7 21214 ready 172.20.0.216

webpod-697b545f57-smclf 21214 ready 172.20.1.184

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- curl -s --connect-timeout 1 webpod | grep Hostname

command terminated with exit code 28

#컨트롤 플레인에 배포된 pod만 접근이 되고, w0, w1에는 연결이 안되는 것을 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

---

---

Hostname: webpod-697b545f57-hchf7

---

---

---

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg ip list

IP IDENTITY SOURCE

0.0.0.0/0 reserved:world

172.20.1.0/24 reserved:world

172.20.2.0/24 reserved:world

10.0.2.15/32 reserved:host

reserved:kube-apiserver

172.20.0.45/32 k8s:app=local-path-provisioner custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

172.20.0.62/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

172.20.0.100/32 k8s:app=curl custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.0.123/32 reserved:host

reserved:kube-apiserver

172.20.0.173/32 k8s:app.kubernetes.io/name=hubble-ui custom-resource

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

172.20.0.174/32 k8s:app.kubernetes.io/instance=metrics-server custom-resource

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

172.20.0.210/32 k8s:app.kubernetes.io/name=hubble-relay custom-resource

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

172.20.0.216/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.0.232/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

172.20.1.184/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

172.20.1.198/32 reserved:remote-node

172.20.2.36/32 reserved:remote-node

172.20.2.160/32 k8s:app=webpod custom-resource

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

192.168.10.100/32 reserved:host

reserved:kube-apiserver

192.168.10.101/32 reserved:remote-node

192.168.20.100/32 reserved:remote-node

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg endpoint list

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

12 Disabled Disabled 31648 k8s:app.kubernetes.io/instance=metrics-server 172.20.0.174 ready

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

75 Disabled Disabled 6632 k8s:app.kubernetes.io/name=hubble-relay 172.20.0.210 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

120 Disabled Disabled 5480 k8s:app=local-path-provisioner 172.20.0.45 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

155 Disabled Disabled 21214 k8s:app=webpod 172.20.0.216 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

497 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready

k8s:node.kubernetes.io/exclude-from-external-load-balancers

reserved:host

640 Disabled Disabled 5764 k8s:app=curl 172.20.0.100 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

2265 Disabled Disabled 332 k8s:app.kubernetes.io/name=hubble-ui 172.20.0.173 ready

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

3826 Disabled Disabled 6708 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.232 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

4056 Disabled Disabled 6708 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.62 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg service list

ID Frontend Service Type Backend

1 10.96.45.94:443/TCP ClusterIP 1 => 172.20.0.174:10250/TCP (active)

2 10.96.64.238:80/TCP ClusterIP 1 => 172.20.0.210:4245/TCP (active)

3 0.0.0.0:30003/TCP NodePort 1 => 172.20.0.173:8081/TCP (active)

6 10.96.16.7:80/TCP ClusterIP 1 => 172.20.0.173:8081/TCP (active)

7 10.96.0.10:53/TCP ClusterIP 1 => 172.20.0.62:53/TCP (active)

2 => 172.20.0.232:53/TCP (active)

8 10.96.0.10:53/UDP ClusterIP 1 => 172.20.0.62:53/UDP (active)

2 => 172.20.0.232:53/UDP (active)

9 10.96.0.10:9153/TCP ClusterIP 1 => 172.20.0.62:9153/TCP (active)

2 => 172.20.0.232:9153/TCP (active)

10 10.96.0.1:443/TCP ClusterIP 1 => 192.168.10.100:6443/TCP (active)

11 10.96.141.149:443/TCP ClusterIP 1 => 192.168.10.100:4244/TCP (active)

12 10.96.150.245:80/TCP ClusterIP 1 => 172.20.0.216:80/TCP (active)

2 => 172.20.1.184:80/TCP (active)

3 => 172.20.2.160:80/TCP (active)

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf lb list

SERVICE ADDRESS BACKEND ADDRESS (REVNAT_ID) (SLOT)

10.0.2.15:30003/TCP (0) 0.0.0.0:0 (4) (0) [NodePort]

10.96.0.10:53/TCP (2) 172.20.0.232:53/TCP (7) (2)

10.96.16.7:80/TCP (1) 172.20.0.173:8081/TCP (6) (1)

192.168.10.100:30003/TCP (1) 172.20.0.173:8081/TCP (5) (1)

10.96.150.245:80/TCP (0) 0.0.0.0:0 (12) (0) [ClusterIP, non-routable]

10.96.150.245:80/TCP (3) 172.20.2.160:80/TCP (12) (3)

10.0.2.15:30003/TCP (1) 172.20.0.173:8081/TCP (4) (1)

10.96.0.10:53/TCP (0) 0.0.0.0:0 (7) (0) [ClusterIP, non-routable]

10.96.0.10:53/TCP (1) 172.20.0.62:53/TCP (7) (1)

10.96.0.10:53/UDP (2) 172.20.0.232:53/UDP (8) (2)

10.96.0.10:9153/TCP (0) 0.0.0.0:0 (9) (0) [ClusterIP, non-routable]

10.96.141.149:443/TCP (1) 192.168.10.100:4244/TCP (11) (1)

10.96.16.7:80/TCP (0) 0.0.0.0:0 (6) (0) [ClusterIP, non-routable]

10.96.45.94:443/TCP (0) 0.0.0.0:0 (1) (0) [ClusterIP, non-routable]

10.96.0.10:9153/TCP (2) 172.20.0.232:9153/TCP (9) (2)

192.168.10.100:30003/TCP (0) 0.0.0.0:0 (5) (0) [NodePort]

10.96.0.1:443/TCP (1) 192.168.10.100:6443/TCP (10) (1)

10.96.0.1:443/TCP (0) 0.0.0.0:0 (10) (0) [ClusterIP, non-routable]

10.96.150.245:80/TCP (1) 172.20.0.216:80/TCP (12) (1)

0.0.0.0:30003/TCP (0) 0.0.0.0:0 (3) (0) [NodePort, non-routable]

10.96.141.149:443/TCP (0) 0.0.0.0:0 (11) (0) [ClusterIP, InternalLocal, non-routable]

0.0.0.0:30003/TCP (1) 172.20.0.173:8081/TCP (3) (1)

10.96.45.94:443/TCP (1) 172.20.0.174:10250/TCP (1) (1)

10.96.0.10:53/UDP (1) 172.20.0.62:53/UDP (8) (1)

10.96.64.238:80/TCP (0) 0.0.0.0:0 (2) (0) [ClusterIP, non-routable]

10.96.0.10:9153/TCP (1) 172.20.0.62:9153/TCP (9) (1)

10.96.150.245:80/TCP (2) 172.20.1.184:80/TCP (12) (2)

10.96.0.10:53/UDP (0) 0.0.0.0:0 (8) (0) [ClusterIP, non-routable]

10.96.64.238:80/TCP (1) 172.20.0.210:4245/TCP (2) (1)

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf nat list

TCP OUT 10.0.2.15:58130 -> 44.193.57.117:443 XLATE_SRC 10.0.2.15:58130 Created=63sec ago NeedsCT=1

TCP OUT 10.0.2.15:38416 -> 44.218.153.24:443 XLATE_SRC 10.0.2.15:38416 Created=62sec ago NeedsCT=1

TCP OUT 10.0.2.15:57526 -> 34.232.204.112:443 XLATE_SRC 10.0.2.15:57526 Created=55sec ago NeedsCT=1

TCP IN 44.218.153.24:443 -> 10.0.2.15:38416 XLATE_DST 10.0.2.15:38416 Created=62sec ago NeedsCT=1

TCP IN 34.232.204.112:443 -> 10.0.2.15:57526 XLATE_DST 10.0.2.15:57526 Created=55sec ago NeedsCT=1

TCP IN 52.207.69.161:443 -> 10.0.2.15:36134 XLATE_DST 10.0.2.15:36134 Created=64sec ago NeedsCT=1

UDP OUT 10.0.2.15:53106 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:53106 Created=60sec ago NeedsCT=1

UDP OUT 10.0.2.15:47768 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:47768 Created=63sec ago NeedsCT=1

TCP OUT 10.0.2.15:36134 -> 52.207.69.161:443 XLATE_SRC 10.0.2.15:36134 Created=64sec ago NeedsCT=1

TCP IN 54.210.249.78:443 -> 10.0.2.15:42170 XLATE_DST 10.0.2.15:42170 Created=60sec ago NeedsCT=1

TCP IN 104.16.99.215:443 -> 10.0.2.15:37850 XLATE_DST 10.0.2.15:37850 Created=54sec ago NeedsCT=1

TCP IN 54.144.250.218:443 -> 10.0.2.15:36102 XLATE_DST 10.0.2.15:36102 Created=61sec ago NeedsCT=1

TCP OUT 10.0.2.15:42170 -> 54.210.249.78:443 XLATE_SRC 10.0.2.15:42170 Created=60sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:36971 XLATE_DST 10.0.2.15:36971 Created=64sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:53106 XLATE_DST 10.0.2.15:53106 Created=60sec ago NeedsCT=1

TCP IN 34.232.204.112:443 -> 10.0.2.15:57510 XLATE_DST 10.0.2.15:57510 Created=59sec ago NeedsCT=1

TCP IN 98.85.154.13:443 -> 10.0.2.15:47904 XLATE_DST 10.0.2.15:47904 Created=57sec ago NeedsCT=1

TCP IN 35.172.147.99:443 -> 10.0.2.15:40512 XLATE_DST 10.0.2.15:40512 Created=58sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:47768 XLATE_DST 10.0.2.15:47768 Created=63sec ago NeedsCT=1

TCP IN 34.232.204.112:443 -> 10.0.2.15:57534 XLATE_DST 10.0.2.15:57534 Created=55sec ago NeedsCT=1

TCP OUT 10.0.2.15:37850 -> 104.16.99.215:443 XLATE_SRC 10.0.2.15:37850 Created=54sec ago NeedsCT=1

TCP OUT 10.0.2.15:53782 -> 104.16.100.215:443 XLATE_SRC 10.0.2.15:53782 Created=54sec ago NeedsCT=1

UDP OUT 10.0.2.15:36971 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:36971 Created=64sec ago NeedsCT=1

TCP IN 44.218.153.24:443 -> 10.0.2.15:39802 XLATE_DST 10.0.2.15:39802 Created=50sec ago NeedsCT=1

TCP IN 104.16.99.215:443 -> 10.0.2.15:37848 XLATE_DST 10.0.2.15:37848 Created=60sec ago NeedsCT=1

UDP OUT 10.0.2.15:46837 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:46837 Created=64sec ago NeedsCT=1

TCP OUT 10.0.2.15:56028 -> 34.195.83.243:443 XLATE_SRC 10.0.2.15:56028 Created=62sec ago NeedsCT=1

TCP OUT 10.0.2.15:42184 -> 54.210.249.78:443 XLATE_SRC 10.0.2.15:42184 Created=57sec ago NeedsCT=1

TCP OUT 10.0.2.15:36102 -> 54.144.250.218:443 XLATE_SRC 10.0.2.15:36102 Created=61sec ago NeedsCT=1

TCP IN 44.193.57.117:443 -> 10.0.2.15:58130 XLATE_DST 10.0.2.15:58130 Created=63sec ago NeedsCT=1

TCP IN 54.210.249.78:443 -> 10.0.2.15:42184 XLATE_DST 10.0.2.15:42184 Created=57sec ago NeedsCT=1

TCP IN 34.195.83.243:443 -> 10.0.2.15:56028 XLATE_DST 10.0.2.15:56028 Created=62sec ago NeedsCT=1

TCP OUT 10.0.2.15:39802 -> 44.218.153.24:443 XLATE_SRC 10.0.2.15:39802 Created=50sec ago NeedsCT=1

UDP OUT 10.0.2.15:50353 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:50353 Created=63sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:50353 XLATE_DST 10.0.2.15:50353 Created=63sec ago NeedsCT=1

TCP OUT 10.0.2.15:57534 -> 34.232.204.112:443 XLATE_SRC 10.0.2.15:57534 Created=55sec ago NeedsCT=1

TCP IN 34.195.83.243:443 -> 10.0.2.15:56042 XLATE_DST 10.0.2.15:56042 Created=56sec ago NeedsCT=1

TCP OUT 10.0.2.15:40512 -> 35.172.147.99:443 XLATE_SRC 10.0.2.15:40512 Created=58sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:46837 XLATE_DST 10.0.2.15:46837 Created=64sec ago NeedsCT=1

TCP OUT 10.0.2.15:56042 -> 34.195.83.243:443 XLATE_SRC 10.0.2.15:56042 Created=56sec ago NeedsCT=1

TCP IN 104.16.101.215:443 -> 10.0.2.15:33894 XLATE_DST 10.0.2.15:33894 Created=55sec ago NeedsCT=1

UDP OUT 10.0.2.15:49708 -> 10.0.2.3:53 XLATE_SRC 10.0.2.15:49708 Created=60sec ago NeedsCT=1

TCP IN 104.16.100.215:443 -> 10.0.2.15:53782 XLATE_DST 10.0.2.15:53782 Created=54sec ago NeedsCT=1

TCP OUT 10.0.2.15:37848 -> 104.16.99.215:443 XLATE_SRC 10.0.2.15:37848 Created=60sec ago NeedsCT=1

TCP OUT 10.0.2.15:57510 -> 34.232.204.112:443 XLATE_SRC 10.0.2.15:57510 Created=59sec ago NeedsCT=1

UDP IN 10.0.2.3:53 -> 10.0.2.15:49708 XLATE_DST 10.0.2.15:49708 Created=60sec ago NeedsCT=1

TCP OUT 10.0.2.15:47904 -> 98.85.154.13:443 XLATE_SRC 10.0.2.15:47904 Created=57sec ago NeedsCT=1

TCP OUT 10.0.2.15:36118 -> 54.144.250.218:443 XLATE_SRC 10.0.2.15:36118 Created=60sec ago NeedsCT=1

TCP OUT 10.0.2.15:33894 -> 104.16.101.215:443 XLATE_SRC 10.0.2.15:33894 Created=55sec ago NeedsCT=1

TCP IN 54.144.250.218:443 -> 10.0.2.15:36118 XLATE_DST 10.0.2.15:36118 Created=60sec ago NeedsCT=1

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map list | grep -v '0 0'

Name Num entries Num errors Cache enabled

cilium_policy_v2_00075 3 0 true

cilium_policy_v2_00155 3 0 true

cilium_policy_v2_00640 3 0 true

cilium_lb4_reverse_nat 12 0 true

cilium_lxc 11 0 true

cilium_ipcache_v2 20 0 true

cilium_policy_v2_03826 3 0 true

cilium_policy_v2_04056 3 0 true

cilium_policy_v2_00012 3 0 true

cilium_lb4_backends_v3 14 0 true

cilium_lb4_reverse_sk 7 0 true

cilium_runtime_config 256 0 true

cilium_policy_v2_02265 3 0 true

cilium_lb4_services_v2 29 0 true

cilium_policy_v2_00497 2 0 true

cilium_policy_v2_00120 3 0 true

# Cilium 로드밸런서 서비스 맵 확인 - 서비스 IP와 백엔드 매핑

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_services_v2

Key Value State Error

0.0.0.0:30003/TCP (0) 0 1[0] (3) [0x2 0x0]

10.96.16.7:80/TCP (0) 0 1[0] (6) [0x0 0x0] # hubble-ui 서비스

10.96.0.10:53/TCP (2) 4 0[0] (7) [0x0 0x0] # CoreDNS 서비스

10.96.0.10:9153/TCP (2) 8 0[0] (9) [0x0 0x0] # CoreDNS 메트릭

10.96.45.94:443/TCP (0) 0 1[0] (1) [0x0 0x0] # metrics-server

...

# Cilium 로드밸런서 백엔드 맵 확인 - 실제 파드 IP 주소

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_backends_v3

Key Value State Error

4 TCP://172.20.0.232 # CoreDNS 파드

3 TCP://172.20.0.62 # CoreDNS 파드

8 TCP://172.20.0.232 # CoreDNS 파드

9 TCP://172.20.0.210 # hubble-relay 파드

2 TCP://192.168.10.100 # 컨트롤 플레인 노드

5 UDP://172.20.0.62 # CoreDNS 파드

11 TCP://172.20.0.173 # hubble-ui 파드

...

# Cilium 로드밸런서 역NAT 맵 확인 - 서비스 IP와 포트 매핑

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_reverse_nat

Key Value State Error

3 0.0.0.0:30003 # NodePort 서비스

2 10.96.64.238:80 # hubble-relay 서비스

11 10.96.141.149:443 # hubble-peer 서비스

9 10.96.0.10:9153 # CoreDNS 메트릭

7 10.96.0.10:53 # CoreDNS 서비스

10 10.96.0.1:443 # Kubernetes API

8 10.96.0.10:53 # CoreDNS 서비스

12 10.96.150.245:80 # 테스트 서비스

6 10.96.16.7:80 # hubble-ui 서비스

1 10.96.45.94:443 # metrics-server

...

# Cilium IP 캐시 맵 확인 - IP 주소와 보안 ID 매핑

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_ipcache_v2

Key Value State Error

192.168.10.100/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # 컨트롤 플레인 노드

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # 외부 네트워크

172.20.0.173/32 identity=332 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # hubble-ui 파드

172.20.0.210/32 identity=6632 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # hubble-relay 파드

192.168.10.101/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # k8s-w1 노드

172.20.0.62/32 identity=6708 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # CoreDNS 파드

172.20.0.174/32 identity=31648 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # metrics-server 파드

172.20.2.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.20.100 flags=hastunnel sync # k8s-w0 노드 Pod CIDR

172.20.1.0/24 identity=2 encryptkey=0 tunnelendpoint=192.168.10.101 flags=hastunnel sync # k8s-w1 노드 Pod CIDR

192.168.20.100/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync # k8s-w0 노드

...Cilium BGP 컨트롤 플레인

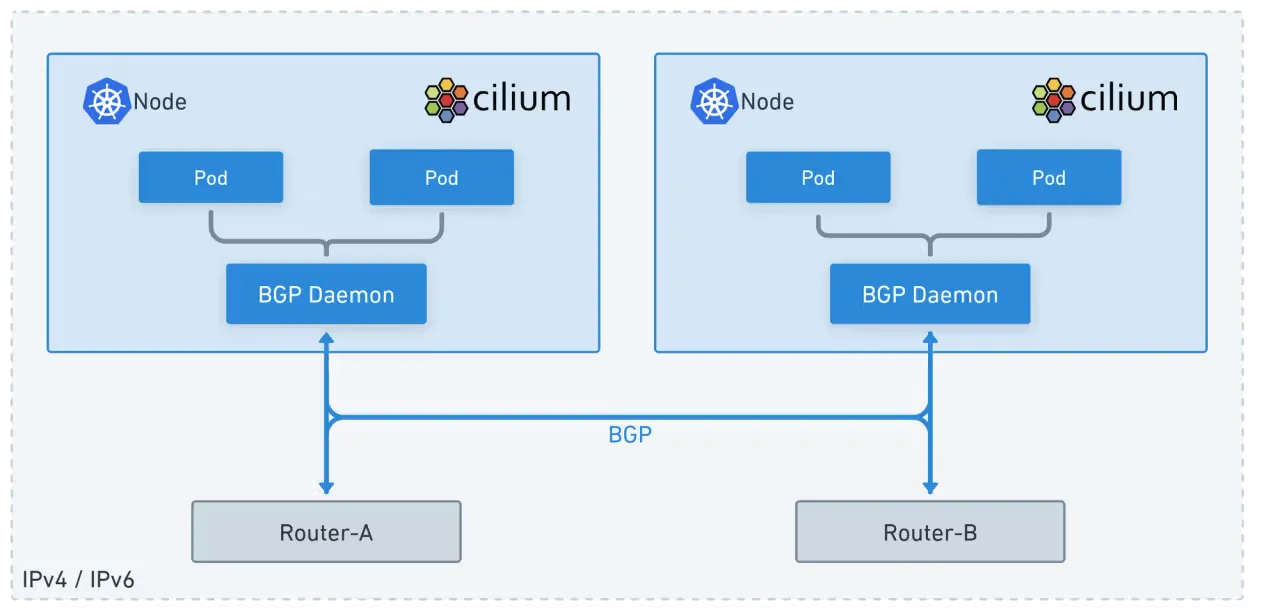

Cilium BGP Control Plane (BGPv2) : Cilium Custom Resources 를 통해 BGP 설정 관리 가능

Cilium BGPv2의 전체적인 데이터 흐름을 보여줍니다. 먼저 사용자가 kubectl 명령어를 통해 BGP 설정을 Kubernetes CRD(Custom Resource Definition)로 생성합니다.

이 설정은 Cilium Operator가 감지하여 각 노드의 Cilium Agent에게 전달합니다. Cilium Agent는 받은 BGP 설정을 기반으로 로컬 BGP 데몬을 구성하고, 이를 통해 외부 라우터와 BGP 세션을 설정합니다.

이 과정을 통해 LoadBalancer 서비스의 외부 IP 주소가 외부 라우터의 라우팅 테이블에 자동으로 광고되어, 클러스터 외부에서 서비스에 접근할 수 있게 됩니다.

v1 Legacy 방식과의 차이

기존 v1legacy 방식에서는 각 노드의 Cilium 에이전트 설정 파일에 BGP 설정을 직접 작성해야 했습니다. 이는 수동 작업이 많고 설정 변경 시 모든 노드를 개별적으로 업데이트해야 하는 번거로움이 있었습니다.

반면 BGPv2는 Kubernetes CRD를 통해 선언적으로 BGP 설정을 관리하므로, 설정 변경 시 자동으로 모든 노드에 적용됩니다. 또한 v1legacy는 수동 설정으로 인해 확장이 어려웠지만, BGPv2는 자동화된 설정 관리로 대규모 클러스터에서도 효율적으로 운영할 수 있습니다.

마지막으로 kubectl 명령어를 통해 BGP 상태와 설정을 쉽게 확인할 수 있어 가시성이 크게 향상되었습니다.

주요 옵션

CiliumBGPClusterConfig: 여러 노드에 적용되는 BGP 인스턴스와 피어 설정을 정의합니다.CiliumBGPPeerConfig: 여러 피어에서 공통으로 사용할 수 있는 BGP 피어링 설정 집합입니다.CiliumBGPAdvertisement: BGP 라우팅 테이블에 주입되는 프리픽스를 정의합니다.CiliumBGPNodeConfigOverride: 더 세밀한 제어를 위한 노드별 BGP 설정을 정의합니다.

주의사항

Cilium의 BGP는 기본적으로 외부 경로를 커널 라우팅 테이블에 주입하지 않습니다. 그렇기 때문에 BGP 사용시 2개 이상의 NIC을 사용할 경우에는 직접 라우팅을 설정하고 관리해야 합니다.

이 문제는 실습을 통해 확인해 보도록 합니다.

BGP 설정 후 통신 확인

router 접속 후 설정 : sshpass -p 'vagrant' ssh vagrant@router

sshpass -p 'vagrant' ssh vagrant@router

# FRR 데몬 프로세스 확인 - BGP 라우팅 데몬 실행 상태

ss -tnlp | grep -iE 'zebra|bgpd'

ps -ef |grep frr

# FRR 현재 설정 확인 - BGP 라우터 설정 상태

vtysh -c 'show running'

cat /etc/frr/frr.conf

...

log syslog informational

!

router bgp 65000

bgp router-id 192.168.10.200

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

# BGP 상태 확인 - BGP 세션 및 라우팅 테이블 상태

vtysh -c 'show running'

vtysh -c 'show ip bgp summary'

vtysh -c 'show ip bgp'

# Cilium 노드 연동 설정 방안 1 - 파일 직접 편집 방식

cat << EOF >> /etc/frr/frr.conf

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM # k8s-ctr 노드

neighbor 192.168.10.101 peer-group CILIUM # k8s-w1 노드

neighbor 192.168.20.100 peer-group CILIUM # k8s-w0 노드

EOF

cat /etc/frr/frr.conf

systemctl daemon-reexec && systemctl restart frr # FRR 서비스 재시작

systemctl status frr --no-pager --full # FRR 서비스 상태 확인

# 모니터링 걸어두기!

journalctl -u frr -f

# Cilium 노드 연동 설정 방안 2 - vtysh 대화형 설정 방식

vtysh

---------------------------

?

show ?

show running

show ip route

# config 모드 진입

conf

?

## bgp 65000 설정 진입

router bgp 65000

?

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM # k8s-ctr 노드

neighbor 192.168.10.101 peer-group CILIUM # k8s-w1 노드

neighbor 192.168.20.100 peer-group CILIUM # k8s-w0 노드

end

# Write configuration to the file (same as write file)

write memory

exit

---------------------------

cat /etc/frr/frr.conf# FRR 데몬 포트 및 프로세스 상태 확인

root@router:~# ss -tnlp | grep -iE 'zebra|bgpd'

LISTEN 0 3 127.0.0.1:2605 0.0.0.0:* users:(("bgpd",pid=4148,fd=18)) # BGP 데몬 관리 포트

LISTEN 0 3 127.0.0.1:2601 0.0.0.0:* users:(("zebra",pid=4143,fd=23)) # Zebra 라우팅 데몬 포트

LISTEN 0 4096 0.0.0.0:179 0.0.0.0:* users:(("bgpd",pid=4148,fd=22)) # BGP 표준 포트 179

LISTEN 0 4096 [::]:179 [::]:* users:(("bgpd",pid=4148,fd=23)) # BGP IPv6 포트

root@router:~# ps -ef |grep frr

root 4130 1 0 17:05 ? 00:00:00 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd # FRR 감시 프로세스

frr 4143 1 0 17:05 ? 00:00:00 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000 # Zebra 라우팅 데몬

frr 4148 1 0 17:05 ? 00:00:00 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1 # BGP 데몬

frr 4155 1 0 17:05 ? 00:00:00 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1 # 정적 라우팅 데몬

...

root@router:~# vtysh -c 'show running'

Building configuration...

Current configuration:

!

frr version 8.4.4

frr defaults traditional

hostname router

log syslog informational

no ipv6 forwarding

service integrated-vtysh-config

!

router bgp 65000

bgp router-id 192.168.10.200

no bgp ebgp-requires-policy

bgp graceful-restart

bgp bestpath as-path multipath-relax

!

address-family ipv4 unicast

network 10.10.1.0/24

maximum-paths 4

exit-address-family

exit

!

end

# FRR 설정 파일 확인

root@router:~# cat /etc/frr/frr.conf

# default to using syslog. /etc/rsyslog.d/45-frr.conf places the log in

# /var/log/frr/frr.log

...

log syslog informational

!

router bgp 65000

bgp router-id 192.168.10.200 # BGP 라우터 ID

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24 # 광고할 네트워크

root@router:~# vtysh -c 'show running'

Building configuration...

Current configuration:

!

frr version 8.4.4

frr defaults traditional

hostname router

log syslog informational

no ipv6 forwarding

service integrated-vtysh-config

!

router bgp 65000

bgp router-id 192.168.10.200

no bgp ebgp-requires-policy

bgp graceful-restart

bgp bestpath as-path multipath-relax

!

address-family ipv4 unicast

network 10.10.1.0/24

maximum-paths 4

exit-address-family

exit

!

end

# BGP 상태 확인 - 피어 및 라우팅 테이블

root@router:~# vtysh -c 'show ip bgp summary'

% No BGP neighbors found in VRF default # 아직 BGP 피어가 설정되지 않음

# 65000-65534: Private ASN 범위

# 인터넷에 직접 연결되지 않는 내부 네트워크에서 사용

# RFC 6996에서 정의된 예약된 범위

# 외부 인터넷과 연결할 때는 다른 ASN으로 교체해야 함

# 실제 운영 환경에서

# 공용 ASN: 1-64511, 65536-4294967295 (인터넷 연결용)

# Private ASN: 64512-65534 (내부 네트워크용)

# 예약된 ASN: 65535 (예약됨)

root@router:~# vtysh -c 'show ip bgp'

BGP table version is 1, local router ID is 192.168.10.200, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.10.1.0/24 0.0.0.0 0 32768 i # 로컬에서 광고하는 네트워크

Displayed 1 routes and 1 total paths

# 라우터의 라우팅 테이블 확인

root@router:~#

ip -c route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100 # 기본 게이트웨이

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100 # 외부 네트워크

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100 # DHCP 서버

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100 # DHCP 서버

10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200 # BGP 광고 네트워크

10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200 # 추가 네트워크

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

root@router:~# cat << EOF >> /etc/frr/frr.conf

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

EOF

root@router:~# cat /etc/frr/frr.conf

# default to using syslog. /etc/rsyslog.d/45-frr.conf places the log in

# /var/log/frr/frr.log

#

# Note:

# FRR's configuration shell, vtysh, dynamically edits the live, in-memory

# configuration while FRR is running. When instructed, vtysh will persist the

# live configuration to this file, overwriting its contents. If you want to

# avoid this, you can edit this file manually before starting FRR, or instruct

# vtysh to write configuration to a different file.

log syslog informational

!

router bgp 65000

bgp router-id 192.168.10.200

bgp graceful-restart

no bgp ebgp-requires-policy

bgp bestpath as-path multipath-relax

maximum-paths 4

network 10.10.1.0/24

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

root@router:~# systemctl daemon-reexec && systemctl restart frr

root@router:~# systemctl status frr --no-pager --full

● frr.service - FRRouting

Loaded: loaded (/usr/lib/systemd/system/frr.service; enabled; preset: enabled)

Active: active (running) since Fri 2025-08-15 19:40:37 KST; 7s ago

Docs: https://frrouting.readthedocs.io/en/latest/setup.html

Process: 4913 ExecStart=/usr/lib/frr/frrinit.sh start (code=exited, status=0/SUCCESS)

Main PID: 4925 (watchfrr)

Status: "FRR Operational"

Tasks: 13 (limit: 553)

Memory: 19.3M (peak: 27.0M)

CPU: 76ms

CGroup: /system.slice/frr.service

├─4925 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd

├─4938 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000

├─4943 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1

└─4950 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1

Aug 15 19:40:37 router watchfrr[4925]: [YFT0P-5Q5YX] Forked background command [pid 4926]: /usr/lib/frr/watchfrr.sh restart all

...

Aug 15 19:40:37 router systemd[1]: Started frr.service - FRRouting.Cilium예 BGP 설정

# BGP 모니터링 및 테스트 - 실시간 로그 확인 및 통신 테스트

# 신규 터미널 1 (router) : 모니터링 걸어두기!

journalctl -u frr -f

# 신규 터미널 2 (k8s-ctr) : 반복 호출

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# BGP 동작할 노드를 위한 라벨 설정 - BGP 활성화 노드 지정

kubectl label nodes k8s-ctr k8s-w0 k8s-w1 enable-bgp=true

kubectl get node -l enable-bgp=true

# Cilium BGP 설정 - BGP 광고 정책 생성

cat << EOF | kubectl apply -f -

apiVersion: cilium.io/v2

kind: CiliumBGPAdvertisement

metadata:

name: bgp-advertisements

labels:

advertise: bgp

spec:

advertisements:

- advertisementType: "PodCIDR"

---

apiVersion: cilium.io/v2

kind: CiliumBGPPeerConfig #피어 세팅

metadata:

name: cilium-peer

spec:

timers:

holdTimeSeconds: 9

keepAliveTimeSeconds: 3

ebgpMultihop: 2

gracefulRestart:

enabled: true

restartTimeSeconds: 15

families:

- afi: ipv4

safi: unicast

advertisements:

matchLabels:

advertise: "bgp"

---

apiVersion: cilium.io/v2

kind: CiliumBGPClusterConfig # 피어들을 클러스터로 묶는 역할

metadata:

name: cilium-bgp

spec:

nodeSelector:

matchLabels:

"enable-bgp": "true" # 노드에서 bgp를 적용

bgpInstances:

- name: "instance-65001"

localASN: 65001

peers:

- name: "tor-switch"