들어가며

안녕하세요! Devlos입니다.

이번 포스팅은 지난글에 이어 CloudNet@ 커뮤니티에서 주최하는 K8S Deploy 3주 차 주제인

"Kubeadm & K8S Upgrade"중 K8S Upgrade에 대해서 정리한 내용입니다.

지난글을 참고 하시면 이번 글을 이해하시는데 도움이 될 것 같아요.

참고 - https://devlos.tistory.com/116

[3주차 - K8S Deploy] Kubeadm & K8S Upgrade 1/2 (26.01.23)

들어가며안녕하세요! Devlos입니다.이번 포스팅은 CloudNet@ 커뮤니티에서 주최하는 K8S Deploy 3주 차 주제인 "Kubeadm & K8S Upgrade"중 kubeadm 기반 kubernetes cluster 설치에 대해서 정리한 내용입니다.1주차에

devlos.tistory.com

k8s 버전 편차 정책

지원 버전(패치 지원 정책)

- Kubernetes 버전은 (x.y.z) 형식(major/minor/patch)이며, 최근 3개 마이너 릴리즈 브랜치(예: 1.35, 1.34, 1.33)를 유지합니다.

- 1.19+: 패치 지원 기간이 대략 1년

- 1.18 이하: 패치 지원 기간이 대략 9개월

- 보안 이슈 등 중요 수정은 (가능한 경우) 위 3개 브랜치에 백포트될 수 있으며, 패치는 정기/긴급 릴리즈로 배포됩니다.

컴포넌트별 허용 버전 편차 (Supported version skew)

kube-apiserver

- HA 환경에서 가장 최신/가장 오래된 kube-apiserver는 마이너 1 버전 이내

kubelet

- kubelet은 kube-apiserver보다 새 버전이면 안 됨

- kubelet은 kube-apiserver보다 최대 3 마이너 버전 낮게 허용

- 단, kubelet < 1.25 인 경우는 최대 2 마이너까지만 허용

- HA에서 apiserver 버전이 섞이면(예: 1.32/1.31) 허용 범위가 더 좁아짐

kube-proxy

- kube-proxy는 kube-apiserver보다 새 버전이면 안 됨

- kube-proxy는 kube-apiserver보다 최대 3 마이너 낮게 허용(단, kube-proxy < 1.25는 최대 2)

- kube-proxy는 같은 노드의 kubelet과 최대 ±3 마이너까지 허용(단, kube-proxy < 1.25는 ±2)

- HA에서 apiserver 버전이 섞이면 허용 범위가 더 좁아짐

kube-controller-manager / kube-scheduler / cloud-controller-manager

- apiserver보다 새 버전이면 안 됨

- 보통 apiserver와 같은 마이너 버전 권장, 라이브 업그레이드를 위해 최대 1 마이너 낮게 허용

- HA에서 apiserver 버전이 섞이고(또는 LB로 어느 apiserver로든 연결 가능) 있으면 허용 범위가 더 좁아짐

kubectl

- kube-apiserver 대비 ±1 마이너까지 지원

- HA에서 apiserver 버전이 섞이면 허용 범위가 더 좁아짐

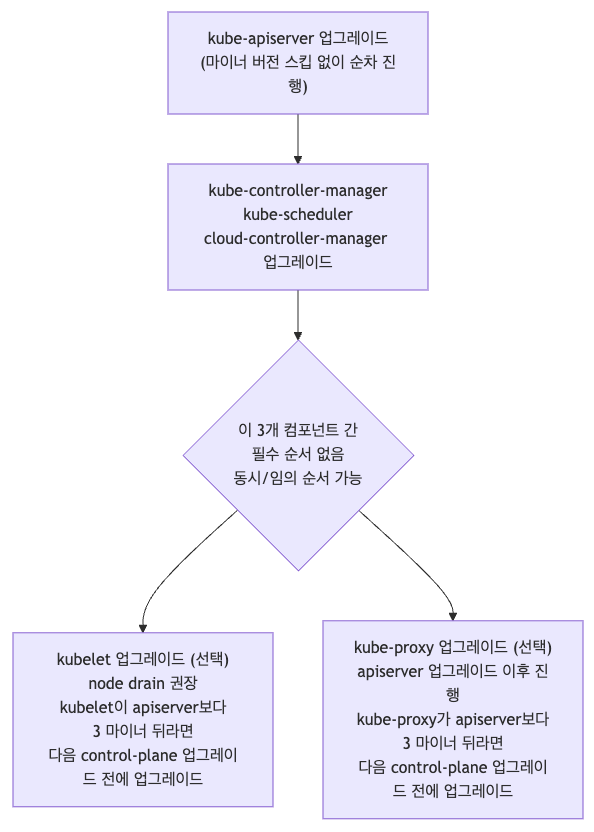

권장 업그레이드 순서 (예: 1.31 → 1.32)

- 사전 권장사항

- 현재 마이너 버전에서 최신 패치 버전으로 먼저 업그레이드

- 목표 마이너 버전도 최신 패치 버전으로 업그레이드

업그레이드 순서

업그레이드 고려사항

Upgrade 방식과 전략

- In-place 업그레이드

- Kubernetes Version Skew 정책을 활용해서, Control Plane → Worker Node 순서로 점진적으로 버전을 올리는 방식입니다.

- 온프레미스 환경에서 현실적으로 가장 많이 쓰이는 전략이며, 허용되는 최대 버전 스큐에 도달할 때까지 Worker 노드 업그레이드를 뒤로 미루고 Control Plane부터 먼저 업그레이드할 수 있습니다.

- Blue-Green 업그레이드

old k8s cluster (100노드)와new k8s cluster (100노드)를 동시에 운영하면서 트래픽을 신규 클러스터로 전환하는 방식입니다.- 온프레미스에서는 동일 규모의 클러스터를 하나 더 준비해야 하므로, 사실상 적용이 어렵거나 불가능한 경우가 많습니다.

In-place 업그레이드 절차

- 사전 준비

→ Control Plane 노드 순차 업그레이드

→ Control Plane 동작 점검

→ Worker(Data Plane) 노드 순차 업그레이드

→ 전체 클러스터 동작 점검

업그레이드 전/중에 꼭 확인할 것들

- Addon / 애플리케이션 호환성

- CNI, CSI 등 Addon과 앱 파드가 신규 K8S 버전의 Control 컴포넌트(apiserver, kube-proxy 등) 와 호환되는지 확인합니다.

- 특히 Kubernetes API 리소스 버전을 사용하는 경우, 해당 버전이 변경/폐기되지 않았는지 반드시 체크합니다.

- OS / CRI(containerd) / Kubelet 조합

- OS, CRI(containerd), kubelet(kubectl, kubeadm 포함) 조합이 공식적으로 지원되는 매트릭스 안에 있는지 확인합니다.

- CI/CD 파이프라인

- 기존 배포 파이프라인(CI/CD, 배포 툴)이 신규 Kubernetes 버전에서도 그대로 동작하는지 테스트합니다.

- 여기서도 사용 중인 API 리소스 버전의 변경 여부를 다시 한 번 확인합니다.

- etcd 데이터

- 업그레이드 전 etcd 백업은 필수입니다.

- Kubernetes는 일반적으로 etcd 데이터 형식의 하위 호환성을 유지하지만, 장애 대비용 백업 전략은 항상 준비해 둬야 합니다.

- 무중단 서비스 보장

- kube-proxy, CNI 등 네트워크 관련 컴포넌트가 업데이트될 때 서비스 트래픽이 끊기지 않는지 모니터링으로 확인합니다.

- 가능하면 롤링 방식으로 컴포넌트를 교체하면서 장애 여부를 점검하는 것이 좋습니다.

업그레이드 계획 수립

0. 현황 파악

각 구성요소의 버전을 확인합니다.

목표 버전 : K8S 1.28 ⇒ 1.32!

1. 버전 호환성 검토

- K8S(kubelet, apiserver..) 1.32 요구 커널 버전 확인 : 예) user namespace 사용 시 커널 6.5 이상 필요 - Docs

- containerd 버전 조사 : 예) user namespace 에 대한 CRI 지원 시 containerd v2.0 및 runc v1.2 이상 필요 - Docs

- K8S 호환 버전 확인 - Docs

- CNI(Cilium) 요구 커널 버전 확인 : 예) BPF-based host routing 기능 필요 시 커널 5.10 이상 필요 - Docs

- CNI 이 지원하는 K8S 버전 확인 - Docs

- CSI : CSI 버전을 업그레이드 할 경우 체크

- 애플리케이션 요구사항 검토

2. 업그레이드 방법 결정

- in-place vs blue-green

- Dev - Stg - Prd 별 각기 다른 방법 vs 모두 동일 방법

3. 결정된 방법으로 업그레이드 계획 수립

예시) in-place 결정

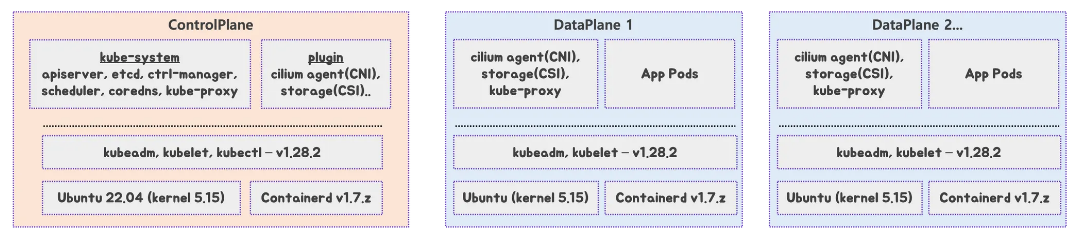

위 그림은 In-place 업그레이드 시 Control Plane과 Data Plane의 역할 분리와 진행 순서를 한 장에 정리한 것입니다.

- 왼쪽 박스(Control Plane)

kube-system네임스페이스의 핵심 컴포넌트(apiserver, etcd, controller-manager, scheduler, coredns, kube-proxy)와 CNI/CSI 플러그인, 그리고 아래쪽에 OS(Ubuntu), CRI(containerd),kubeadm/kubelet/kubectl버전이 계층 구조로 표시됩니다.- 번호(①~⑤)는 Control Plane 노드의 현재 스택 구성을 위에서 아래로 보여주며, 이 조합을 먼저 업그레이드 대상으로 삼는다는 의미입니다.

- 가운데 박스(DataPlane 1)

- 상단에는 CNI/CSI/kube-proxy가, 하단에는 해당 워커 노드의 OS, containerd, kubeadm/kubelet 버전이 표시되어 있습니다.

drain아이콘(⑥)은 이 노드를 먼저 drain(스케줄 불가 + 파드 다른 노드로 이동) 한 뒤, OS/CRI/kubelet 등을 안전하게 업그레이드한다는 의미입니다.- 이후 업그레이드가 끝나면

uncordon(⑩)을 통해 다시 스케줄 가능 상태로 돌려놓습니다.

- 오른쪽 박스(DataPlane 2 …)

- DataPlane 1과 동일한 구조로, 나머지 워커 노드들도 순차적으로 drain → 업그레이드 → uncordon 하는 패턴을 반복한다는 것을 나타냅니다.

4. 사전 준비

- (옵션) 각 작업 별 상세 명령 실행 및 스크립트 작성, 작업 중단 실패 시 롤백 명령/스크립트 작성

- 모니터링 설정

- (1) ETCD 백업

- (2) CNI(cilium) 업그레이드

5. CP 노드 순차 업그레이드 : 1.32 → 1.33 → 1.34

- 노드 1대씩 작업 진행 1.32 → 1.33

- (3) Ubuntu OS(kernel) 업그레이드 → 재부팅

- (4) containerd 업그레이드 → containerd 서비스 재시작

- (5) kubeadm 업그레이드 → kubelet/kubectl 업그레이드 설치 → kubelet 재시작

- 노드 1대씩 작업 진행 1.33 → 1.34

- (5) kubeadm 업그레이드 → kubelet/kubectl 업그레이드 설치 → kubelet 재시작

- 작업 완료 후 CP 노드 상태 확인

6. DP 노드 순차 업그레이드 : 1.32 → 1.33 → 1.34

- 노드 1대씩 작업 진행 1.32 → 1.33

- (6) 작업 노드 drain 설정 후 파드 Evicted 확인, 서비스 중단 여부 모니터링 확인

- (7) Ubuntu OS(kernel) 업그레이드 → 재부팅

- (8) containerd 업그레이드 → containerd 서비스 재시작

- (9) kubeadm 업그레이드 → kubelet 업그레이드 설치 → kubelet 재시작

- 노드 1대씩 작업 진행 1.33 → 1.34

- (9) kubeadm 업그레이드 → kubelet 업그레이드 설치 → kubelet 재시작

- 작업 완료 후 DP 노드 상태 확인 ⇒ (10) 작업 노드 uncordon 설정 후 다시 상태 확인

7. K8S 관련 전체적인 동작 1차 점검

- 애플리케이션 파드 동작 확인 등

8. CP 노드 순차 업그레이드 : 1.34

- 노드 1대씩 작업 진행 x 버전별 반복

- kubeadm 업그레이드 → kubelet/kubectl 업그레이드 설치 → kubelet 재시작

- 작업 완료 후 CP 노드 상태 확인

9. DP 노드 순차 업그레이드 : 1.34

- 노드 1대씩 작업 진행 x 버전별 반복

- 작업 노드 drain 설정 후 파드 Evicted 확인, 서비스 중단 여부 모니터링 확인

- kubeadm 업그레이드 → kubelet 업그레이드 설치 → kubelet 재시작

- 작업 완료 후 DP 노드 상태 확인 ⇒ 작업 노드 uncordon 설정 후 다시 상태 확인

10. K8S 관련 전체적인 동작 2차 점검

- 애플리케이션 파드 동작 확인 등

실습 내용

- 실습 초기 버전 정보 : k8s 1.32.11

| 항목 | 버전 | 버전호환성 |

| Rocky Linux | 10.0-1.6 | version (kernel 6.12 - k8s_kernel) - kubespary |

| containerd | v2.1.5 | CRI Version(v1), k8s 1.32~1.35 지원 - Link |

| runc | v1.3.3 | 정보 조사 필요 https://github.com/opencontainers/runc |

| kubelet, kubeadm, kubectl | v1.32.11 | k8s 버전 정책 문서 참고 - Docs |

| helm | v3.18.6 | k8s 1.30.x ~ 1.33.x 지원 - Docs |

| flannel cni | v0.27.3 | k8s 1.28~ 이후 - Release |

- 목표 버전 정보 : k8s 1.34.11

| 항목 | 버전 | 버전호환성 |

| Rocky Linux | 10.1-1.4 | version (kernel 6.12 - k8s_kernel) - kubespary |

| containerd | v.2.1.5 | CRI Version(v1), k8s 1.32~1.35 지원 - Link |

| runc | v1.x.y | 정보 조사 필요 https://github.com/opencontainers/runc |

| kubelet, kubeadm, kubectl | v1.34.x | k8s 버전 정책 문서 참고 - Docs |

| helm | v3.19.x | k8s 1.31.x ~ 1.34.x 지원 - Docs |

| flannel cni | v0.28.0 | k8s 1.28~ 이후 - Release , Upgrade |

- 사전 준비 & etcd 백업

- CNI Upgrade

- OS Upgrade

- Containerd Upgrade

- Kubeadm , Kubelet, Kubectl Upgrade → 목표 버전까지 단계별 반복 작업

- admin 용 kubeconfig 업데이트

- worker node Upgrdade → 목표 버전까지 단계별 반복 작업

실습 환경 구축

Vagrantfile

# Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/rockylinux-10.0

BOX_IMAGE = "bento/rockylinux-10.0" # "bento/rockylinux-9"

BOX_VERSION = "202510.26.0"

N = 2 # max number of Worker Nodes

Vagrant.configure("2") do |config|

# ControlPlane Nodes

config.vm.define "k8s-ctr" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/K8S-Upgrade-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-ctr"

vb.cpus = 4

vb.memory = 3072 # 2048 2560 3072 4096

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-ctr"

subconfig.vm.network "private_network", ip: "192.168.10.100"

subconfig.vm.network "forwarded_port", guest: 22, host: "60000", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh"

subconfig.vm.provision "shell", path: "k8s-ctr.sh"

end

# Worker Nodes

(1..N).each do |i|

config.vm.define "k8s-w#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/K8S-Upgrade-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-w#{i}"

vb.cpus = 2

vb.memory = 2048

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-w#{i}"

subconfig.vm.network "private_network", ip: "192.168.10.10#{i}"

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh"

subconfig.vm.provision "shell", path: "k8s-w.sh"

end

end

endinit_cfg.sh : (Update) 가상머신에서 외부 통신(ping 8.8.8.8) 실패 시 해결 설정 추가, swap 파티션 제거 추가

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Change Timezone and Enable NTP"

timedatectl set-local-rtc 0

timedatectl set-timezone Asia/Seoul

echo "[TASK 2] Disable firewalld and selinux"

systemctl disable --now firewalld >/dev/null 2>&1

setenforce 0

sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

echo "[TASK 3] Disable and turn off SWAP & Delete swap partitions"

swapoff -a

sed -i '/swap/d' /etc/fstab

sfdisk --delete /dev/sda 2

partprobe /dev/sda

echo "[TASK 4] Config kernel & module"

cat << EOF > /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

modprobe overlay >/dev/null 2>&1

modprobe br_netfilter >/dev/null 2>&1

cat << EOF >/etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 5] Setting Local DNS Using Hosts file"

sed -i '/^127\.0\.\(1\|2\)\.1/d' /etc/hosts

cat << EOF >> /etc/hosts

192.168.10.100 k8s-ctr

192.168.10.101 k8s-w1

192.168.10.102 k8s-w2

EOF

echo "[TASK 6] Delete default routing - enp0s9 NIC" # setenforce 0 설정 필요

nmcli connection modify enp0s9 ipv4.never-default yes

nmcli connection up enp0s9 >/dev/null 2>&1

echo "[TASK 7] Install Containerd"

dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo >/dev/null 2>&1

dnf install -y -q containerd.io-2.1.5-1.el10

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

systemctl daemon-reload

systemctl enable --now containerd >/dev/null 2>&1

echo "[TASK 8] Install kubeadm kubelet kubectl"

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.32/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.32/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

dnf install -y -q kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet >/dev/null 2>&1

cat << EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

echo ">>>> Initial Config End <<<<"k8s-ctr.sh

#!/usr/bin/env bash

echo ">>>> K8S Controlplane config Start <<<<"

echo "[TASK 1] Initial Kubernetes"

cat << EOF > kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta4

kind: InitConfiguration

bootstrapTokens:

- token: "123456.1234567890123456"

ttl: "0s"

usages:

- signing

- authentication

nodeRegistration:

kubeletExtraArgs:

- name: node-ip

value: "192.168.10.100"

criSocket: "unix:///run/containerd/containerd.sock"

localAPIEndpoint:

advertiseAddress: "192.168.10.100"

---

apiVersion: kubeadm.k8s.io/v1beta4

kind: ClusterConfiguration

kubernetesVersion: "1.32.11"

networking:

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.96.0.0/16"

controllerManager:

extraArgs:

- name: "bind-address"

value: "0.0.0.0"

scheduler:

extraArgs:

- name: "bind-address"

value: "0.0.0.0"

etcd:

local:

extraArgs:

- name: "listen-metrics-urls"

value: "http://127.0.0.1:2381,http://192.168.10.100:2381"

EOF

kubeadm init --config="kubeadm-init.yaml"

echo "[TASK 2] Setting kube config file"

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown $(id -u):$(id -g) /root/.kube/config

echo "[TASK 3] Install Helm"

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | DESIRED_VERSION=v3.18.6 bash >/dev/null 2>&1

echo "[TASK 4] Install kubecolor"

dnf install -y -q 'dnf-command(config-manager)' >/dev/null 2>&1

dnf config-manager --add-repo https://kubecolor.github.io/packages/rpm/kubecolor.repo >/dev/null 2>&1

dnf install -y -q kubecolor >/dev/null 2>&1

echo "[TASK 5] Install Kubectx & Kubens"

dnf install -y -q git >/dev/null 2>&1

git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

echo "[TASK 6] Install Kubeps & Setting PS1"

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 >/dev/null 2>&1

cat << "EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=true

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab" >/dev/null 2>&1

echo "[TASK 7] Install Flannel CNI"

/usr/local/bin/helm repo add flannel https://flannel-io.github.io/flannel >/dev/null 2>&1

kubectl create namespace kube-flannel >/dev/null 2>&1

cat << EOF > flannel.yaml

podCidr: "10.244.0.0/16"

flannel:

cniBinDir: "/opt/cni/bin"

cniConfDir: "/etc/cni/net.d"

args:

- "--ip-masq"

- "--kube-subnet-mgr"

- "--iface=enp0s9"

backend: "vxlan"

EOF

/usr/local/bin/helm install flannel flannel/flannel --namespace kube-flannel --version 0.27.3 -f flannel.yaml >/dev/null 2>&1

echo "[TASK 8] Source the completion"

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'source <(kubeadm completion bash)' >> /etc/profile

echo "[TASK 9] Alias kubectl to k"

echo 'alias k=kubectl' >> /etc/profile

echo 'alias kc=kubecolor' >> /etc/profile

echo 'complete -o default -F __start_kubectl k' >> /etc/profile

echo "[TASK 10] Install Metrics-server"

/usr/local/bin/helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/ >/dev/null 2>&1

/usr/local/bin/helm upgrade --install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system >/dev/null 2>&1

echo "sudo su -" >> /home/vagrant/.bashrc

echo ">>>> K8S Controlplane Config End <<<<"k8s-w.sh

#!/usr/bin/env bash

echo ">>>> K8S Node config Start <<<<"

echo "[TASK 1] K8S Controlplane Join"

NODEIP=$(ip -4 addr show enp0s9 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

cat << EOF > kubeadm-join.yaml

apiVersion: kubeadm.k8s.io/v1beta4

kind: JoinConfiguration

discovery:

bootstrapToken:

token: "123456.1234567890123456"

apiServerEndpoint: "192.168.10.100:6443"

unsafeSkipCAVerification: true

nodeRegistration:

criSocket: "unix:///run/containerd/containerd.sock"

kubeletExtraArgs:

- name: node-ip

value: "$NODEIP"

EOF

kubeadm join --config="kubeadm-join.yaml"

echo "sudo su -" >> /home/vagrant/.bashrc

echo ">>>> K8S Node config End <<<<"

실습환경 배포는 다음과 같습니다.

# 실습용 디렉터리 생성

mkdir k8s-upgrade

cd k8s-upgrade

# 파일 다운로드

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-upgrade/Vagrantfile

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-upgrade/init_cfg.sh

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-upgrade/k8s-ctr.sh

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-upgrade/k8s-w.sh

# 실습 환경 배포

vagrant up

vagrant status1. 사전 준비

(TS) Rocky Linux 10 vagrant image 에 SWAP off 해결 : init_cfg.sh 에 반영되어 있음

echo "[TASK 3] Disable and turn off SWAP & Delete swap partitions"

swapoff -a

sed -i '/swap/d' /etc/fstab

sfdisk --delete /dev/sda 2

partprobe /dev/sda >/dev/null 2>&1kubens default

# Context "HomeLab" modified.

# Active namespace is "default".

# swap 사용 확인

free -h

# total used free shared buff/cache available

# Mem: 2.8Gi 869Mi 529Mi 19Mi 1.5Gi 1.9Gi

# Swap: 0B 0B 0B

lsblk

├─sda2 8:2 0 3.8G 0 part # swap 사용이었으내, 스크립트 명령으로 swapoff 상태

# 이미 swap 삭제되어 있음

cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Oct 23 21:07:22 2025

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

# UUID=f3394b07-cf85-4a3f-934c-25ab2eca1335 / xfs defaults 0 0

# UUID=9071-8EA9 /boot/efi vfat umask=0077,shortname=winnt 0 2

# #VAGRANT-BEGIN

# # The contents below are automatically generated by Vagrant. Do not modify.

# #VAGRANT-END

# w2 기동 중 컨테이너 확인

crictl ps

# CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD NAMESPACE

# 6e3fe7d991299 d84558c0144bc 19 minutes ago Running kube-flannel 0 3bd833ad6a093 kube-flannel-ds-txg4g kube-flannel

# 01c59247a7c6b dcdb790dc2bfe 19 minutes ago Running kube-proxy 0 570d4d428a0ff kube-proxy-pcshq kube-system

free -h

# total used free shared buff/cache available

# Mem: 1.8Gi 360Mi 1.1Gi 13Mi 410Mi 1.4Gi

# Swap: 0B 0B 0B

swapon --show

lsblk

# NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

# sda 8:0 0 64G 0 disk

# ├─sda1 8:1 0 600M 0 part /boot/efi

# └─sda3 8:3 0 59.6G 0 part /

kube-prometheus-stack 설치

# repo 추가

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성

cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

scrapeInterval: "20s"

evaluationInterval: "20s"

externalLabels:

cluster: "myk8s-cluster"

service:

type: NodePort

nodePort: 30001

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

service:

type: NodePort

nodePort: 30002

alertmanager:

enabled: true

defaultRules:

create: true

kubeProxy:

enabled: false

prometheus-windows-exporter:

prometheus:

monitor:

enabled: false

EOT

cat monitor-values.yaml

# 배포

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 80.13.3 \

-f monitor-values.yaml --create-namespace --namespace monitoring

# 확인

helm list -n monitoring

# NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# kube-prometheus-stack monitoring 1 2026-01-22 01:13:21.365983377 +0900 KST deployedkube-prometheus-stack-80.13.3 v0.87.1

kubectl get pod,svc,ingress,pvc -n monitoring

# NAME READY STATUS RESTARTS AGE

# pod/alertmanager-kube-prometheus-stack-alertmanager-0 2/2 Running 0 5m5s

# pod/kube-prometheus-stack-grafana-5cb7c586f9-zr7vj 3/3 Running 0 5m10s

# pod/kube-prometheus-stack-kube-state-metrics-7846957b5b-thrsg 1/1 Running 0 5m10s

# pod/kube-prometheus-stack-operator-584f446c98-7hbbc 1/1 Running 0 5m10s

# pod/kube-prometheus-stack-prometheus-node-exporter-46jr7 1/1 Running 0 5m10s

# pod/kube-prometheus-stack-prometheus-node-exporter-779lg 1/1 Running 0 5m10s

# pod/kube-prometheus-stack-prometheus-node-exporter-nghcp 1/1 Running 0 5m10s

# pod/prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 5m5s

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 5m5s

# service/kube-prometheus-stack-alertmanager ClusterIP 10.96.243.152 <none> 9093/TCP,8080/TCP 5m10s

# service/kube-prometheus-stack-grafana NodePort 10.96.155.130 <none> 80:30002/TCP 5m10s

# service/kube-prometheus-stack-kube-state-metrics ClusterIP 10.96.153.91 <none> 8080/TCP 5m10s

# service/kube-prometheus-stack-operator ClusterIP 10.96.77.73 <none> 443/TCP 5m10s

# service/kube-prometheus-stack-prometheus NodePort 10.96.58.137 <none> 9090:30001/TCP,8080:31295/TCP 5m10s

# service/kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.96.118.31 <none> 9100/TCP 5m10s

# service/prometheus-operated ClusterIP None <none> 9090/TCP 5m5s

# - Prometheus, Grafana, Alertmanager, kube-state-metrics, node-exporter 등 모든 컴포넌트가 정상적으로 배포되었습니다.

# - Grafana와 Prometheus는 NodePort로 외부 접근이 가능합니다 (각각 30002, 30001 포트).

kubectl get prometheus,servicemonitors,alertmanagers -n monitoring

# NAME VERSION DESIRED READY RECONCILED AVAILABLE AGE

# prometheus.monitoring.coreos.com/kube-prometheus-stack-prometheus v3.9.1 1 1 True True 5m13s

#

# NAME AGE

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-alertmanager 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-apiserver 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-coredns 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-grafana 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-controller-manager 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-etcd 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-scheduler 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-state-metrics 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kubelet 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-operator 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-prometheus 5m12s

# servicemonitor.monitoring.coreos.com/kube-prometheus-stack-prometheus-node-exporter 5m12s

#

# NAME VERSION REPLICAS READY RECONCILED AVAILABLE AGE

# alertmanager.monitoring.coreos.com/kube-prometheus-stack-alertmanager v0.30.0 1 1 True True 5m13s

# - Prometheus Operator가 생성한 Prometheus CRD 리소스가 v3.9.1로 배포되었습니다.

# - ServiceMonitor는 apiserver, coredns, kube-controller-manager, kube-scheduler, etcd, kubelet 등 클러스터 컴포넌트의 메트릭을 수집하도록 설정되어 있습니다.

# - Alertmanager도 정상적으로 배포되어 알림 기능을 제공합니다.

kubectl get crd | grep monitoring

# alertmanagerconfigs.monitoring.coreos.com 2026-01-21T16:13:20Z

# alertmanagers.monitoring.coreos.com 2026-01-21T16:13:20Z

# podmonitors.monitoring.coreos.com 2026-01-21T16:13:20Z

# probes.monitoring.coreos.com 2026-01-21T16:13:20Z

# prometheusagents.monitoring.coreos.com 2026-01-21T16:13:20Z

# prometheuses.monitoring.coreos.com 2026-01-21T16:13:20Z

# prometheusrules.monitoring.coreos.com 2026-01-21T16:13:20Z

# scrapeconfigs.monitoring.coreos.com 2026-01-21T16:13:20Z

# servicemonitors.monitoring.coreos.com 2026-01-21T16:13:20Z

# thanosrulers.monitoring.coreos.com 2026-01-21T16:13:21Z

# - Prometheus Operator가 설치되면서 10개의 monitoring 관련 CRD(Custom Resource Definition)가 생성되었습니다.

# - 이 CRD들을 통해 Prometheus, Alertmanager, ServiceMonitor, PodMonitor 등을 선언적으로 관리할 수 있습니다.

# 각각 웹 접속 실행 : NodePort 접속

open http://192.168.10.100:30001 # prometheus

open http://192.168.10.100:30002 # grafana : 접속 계정 admin / prom-operator

# 프로메테우스 버전 확인

kubectl exec -it sts/prometheus-kube-prometheus-stack-prometheus -n monitoring -c prometheus -- prometheus --version

# prometheus, version 3.9.1

# 그라파나 버전 확인

kubectl exec -it -n monitoring deploy/kube-prometheus-stack-grafana -- grafana --version

# grafana version 12.3.1샘플 애플리케이션 배포

# 샘플 애플리케이션 배포

cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# k8s-ctr 노드에 curl-pod 파드 배포

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF샘플 애플리케이션 확인 & 반복 호출

# 배포 확인

kubectl get deploy,svc,ep webpod -owide

# NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/webpod 2/2 2 2 6m54s webpod traefik/whoami app=webpod

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/webpod ClusterIP 10.96.254.19 <none> 80/TCP 6m54s app=webpod

# NAME ENDPOINTS AGE

# endpoints/webpod 10.244.1.5:80,10.244.2.7:80 6m54s

# 반복 호출

kubectl exec -it curl-pod -- curl webpod | grep Hostname

# Hostname: webpod-697b545f57-ngsb7

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

혹은

SVCIP=$(kubectl get svc webpod -o jsonpath='{.spec.clusterIP}')

while true; do curl -s $SVCIP | grep Hostname; sleep 1; doneETCD 백업

# etcd 컨테이너 버전 확인

crictl images | grep etcd

registry.k8s.io/etcd 3.5.24-0 1211402d28f58 21.9MB

kubectl exec -n kube-system etcd-k8s-ctr -- etcdctl version

etcdctl version: 3.5.24

API version: 3.5

# 버전 변수 지정

ETCD_VER=3.5.24

# cpu arch 변수 지정

ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then ARCH=arm64; fi

echo $ARCH

# etcd 바이너리 다운로드

curl -L https://github.com/etcd-io/etcd/releases/download/v${ETCD_VER}/etcd-v${ETCD_VER}-linux-${ARCH}.tar.gz -o /tmp/etcd-v${ETCD_VER}.tar.gz

ls /tmp

# 압축 해제

mkdir -p /tmp/etcd-download

tar xzvf /tmp/etcd-v${ETCD_VER}.tar.gz -C /tmp/etcd-download --strip-components=1

# 실행 파일 이동 (etcdctl, etcdutl)

mv /tmp/etcd-download/etcdctl /usr/local/bin/

mv /tmp/etcd-download/etcdutl /usr/local/bin/

chown root:root /usr/local/bin/etcdctl

chown root:root /usr/local/bin/etcdutl

# 설치 확인

etcdctl version

etcdctl version: 3.5.24

API version: 3.5

# listen-client-urls 정보 확인

kubectl describe pod -n kube-system etcd-k8s-ctr | grep isten-client-urls

# --listen-client-urls=https://127.0.0.1:2379,https://192.168.10.100:2379

# --listen-client-urls=https://127.0.0.1:2379,https://192.168.10.100:2379

# -bash: --listen-client-urls=https://127.0.0.1:2379,https://192.168.10.100:2379: No such file or directory

# etcdctl 환경변수 설정

export ETCDCTL_API=3 # etcd v3 API를 사용

export ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crt

export ETCDCTL_CERT=/etc/kubernetes/pki/etcd/server.crt

export ETCDCTL_KEY=/etc/kubernetes/pki/etcd/server.key

export ETCDCTL_ENDPOINTS=https://127.0.0.1:2379 # listen-client-urls

# 확인

etcdctl endpoint status -w table

# +------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

# | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

# +------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

# | https://127.0.0.1:2379 | f330bec74ce6cc42 | 3.5.24 | 20 MB | true | false | 2 | 8129 | 8129 | |

# +------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

etcdctl member list -w table

# +------------------+---------+---------+-----------------------------+-----------------------------+------------+

# | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

# +------------------+---------+---------+-----------------------------+-----------------------------+------------+

# | f330bec74ce6cc42 | started | k8s-ctr | https://192.168.10.100:2380 | https://192.168.10.100:2379 | false |

# +------------------+---------+---------+-----------------------------+-----------------------------+------------+

# 백업 수행

mkdir /backup

etcdctl snapshot save /backup/etcd-snapshot-$(date +%F).db

# {"level":"info","ts":"2026-01-22T01:45:32.791732+0900","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/backup/etcd-snapshot-2026-01-22.db.part"}

# {"level":"info","ts":"2026-01-22T01:45:32.797008+0900","logger":"client","caller":"v3@v3.5.24/maintenance.go:212","msg":"opened snapshot stream; downloading"}

# {"level":"info","ts":"2026-01-22T01:45:32.797044+0900","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"https://127.0.0.1:2379"}

# {"level":"info","ts":"2026-01-22T01:45:32.851843+0900","logger":"client","caller":"v3@v3.5.24/maintenance.go:220","msg":"completed snapshot read; closing"}

# {"level":"info","ts":"2026-01-22T01:45:32.880727+0900","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"https://127.0.0.1:2379","size":"20 MB","took":"now"}

# {"level":"info","ts":"2026-01-22T01:45:32.880796+0900","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/backup/etcd-snapshot-2026-01-22.db"}

# Snapshot saved at /backup/etcd-snapshot-2026-01-22.db

# 백업 파일 확인

tree /backup/

# /backup/

# └── etcd-snapshot-2026-01-22.db

# 백업 결과 확인

etcdutl snapshot status /backup/etcd-snapshot-2026-01-20.db

# d6bd3b23, 7314, 1629, 20 MB

2. Flanel CNI 업그레이드

v0.27.03 -> v0.27.4 버전으로 업그레이드합니다.

#

crictl images | grep flannel

ghcr.io/flannel-io/flannel-cni-plugin v1.7.1-flannel1 127562bd9047f 5.14MB

ghcr.io/flannel-io/flannel v0.27.3 d84558c0144bc 33.1MB

# cni-pligin 버전 정보도 확인 후 pull 해두자!

crictl pull ghcr.io/flannel-io/flannel:v0.27.4

crictl pull ghcr.io/flannel-io/flannel:v1.8.0-flannel1

crictl images | grep flannel

ghcr.io/flannel-io/flannel-cni-plugin v1.7.1-flannel1 127562bd9047f 5.14MB

ghcr.io/flannel-io/flannel v0.27.3 d84558c0144bc 33.1MB

ghcr.io/flannel-io/flannel v0.27.4 24d577aa4188d 33.2MB

# [w1/w2]

crictl pull ghcr.io/flannel-io/flannel:v0.27.4

crictl pull ghcr.io/flannel-io/flannel:v1.8.0-flannel1

crictl images | grep flannel

# ghcr.io/flannel-io/flannel-cni-plugin v1.7.1-flannel1 127562bd9047f 5.14MB

# ghcr.io/flannel-io/flannel v0.27.3 d84558c0144bc 33.1MB

# ghcr.io/flannel-io/flannel v0.27.4 24d577aa4188d 33.2MB

# 모니터링

## 터미널1 : 아래 호출 중단 발생 확인!

SVCIP=$(kubectl get svc webpod -o jsonpath='{.spec.clusterIP}')

while true; do curl -s $SVCIP | grep Hostname; sleep 1; done

혹은

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

## 터미널3 : kube-flannel 파드 재기동 후 정상 기동까지 시간 확인

watch -d kubectl get pod -n kube-flannel

# 작업 전 정보 확인

helm list -n kube-flannel

# NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# flannel kube-flannel 1 2026-01-22 00:37:48.802223548 +0900 KST deployed flannel-v0.27.3 v0.27.3

helm get values -n kube-flannel flannel

# USER-SUPPLIED VALUES:

# flannel:

# args:

# - --ip-masq

# - --kube-subnet-mgr

# - --iface=enp0s9

# backend: vxlan

# cniBinDir: /opt/cni/bin

# cniConfDir: /etc/cni/net.d

# podCidr: 10.244.0.0/16

kubectl get pod -n kube-flannel -o yaml | grep -i image: | sort | uniq

# image: ghcr.io/flannel-io/flannel-cni-plugin:v1.7.1-flannel1

# image: ghcr.io/flannel-io/flannel:v0.27.3

kubectl get ds -n kube-flannel -owide

# NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

# kube-flannel-ds 3 3 3 3 3 <none> 126m kube-flannel ghcr.io/flannel-io/flannel:v0.27.3 app=flannel

# 신규 버전 명시 values 파일 작성

cat << EOF > flannel.yaml

podCidr: "10.244.0.0/16"

flannel:

cniBinDir: "/opt/cni/bin"

cniConfDir: "/etc/cni/net.d"

args:

- "--ip-masq"

- "--kube-subnet-mgr"

- "--iface=enp0s9"

backend: "vxlan"

image:

tag: v0.27.4

EOF

# helm 업그레이드 수행 : Flannel은 DaemonSet 이므로 노드별 순차 업데이트

helm upgrade flannel flannel/flannel -n kube-flannel -f flannel.yaml --version 0.27.4

kubectl -n kube-flannel rollout status ds/kube-flannel-ds

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 1 out of 3 new pods have been updated...

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 1 out of 3 new pods have been updated...

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 1 out of 3 new pods have been updated...

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 2 out of 3 new pods have been updated...

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 2 out of 3 new pods have been updated...

# Waiting for daemon set "kube-flannel-ds" rollout to finish: 2 of 3 updated pods are available...

# daemon set "kube-flannel-ds" successfully rolled out

# 확인

helm list -n kube-flannel

# NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# flannel kube-flannel 2 2026-01-22 01:52:23.732763563 +0900 KST deployed flannel-v0.27.4 v0.27.4

helm get values -n kube-flannel flannel

# USER-SUPPLIED VALUES:

# flannel:

# args:

# - --ip-masq

# - --kube-subnet-mgr

# - --iface=enp0s9

# backend: vxlan

# cniBinDir: /opt/cni/bin

# cniConfDir: /etc/cni/net.d

# image:

# tag: v0.27.4

# podCidr: 10.244.0.0/16

# 확인

crictl ps

# CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD NAMESPACE

# b555967b565ae 24d577aa4188d 2 minutes ago Running kube-flannel 0 88c6f0af7b118 kube-flannel-ds-rwbk7 kube-flannel

# 828a29ed835af e8aef26f96135 24 minutes ago Running curl 0 f174ba42d452b curl-pod default

# cabd6d01570a4 6b5bc413b280c 40 minutes ago Running node-exporter 0 991d68e19ab50 kube-prometheus-stack-prometheus-node-exporter-46jr7 monitoring

# 8e745a752c394 2f6c962e7b831 About an hour ago Running coredns 0 e256db21066bb coredns-668d6bf9bc-d2d5g kube-system

# 06047de36bdbf 2f6c962e7b831 About an hour ago Running coredns 0 8cabfcf3ff7db coredns-668d6bf9bc-4x8j7 kube-system

# d609afb7ccc5e dcdb790dc2bfe About an hour ago Running kube-proxy 0 0ef535c405cd4 kube-proxy-r5bmb kube-system

# 724c49db426cd cfa17ff3d6634 About an hour ago Running kube-scheduler 0 bfc870da7a697 kube-scheduler-k8s-ctr kube-system

# 1a8fe2e6fd88d 82766e5f2d560 About an hour ago Running kube-controller-manager 0 3f5ed75f4a89e kube-controller-manager-k8s-ctr kube-system

# 5752e041d79c9 1211402d28f58 About an hour ago Running etcd 0 15b8d22968d79 etcd-k8s-ctr kube-system

# 74322195e66e4 58951ea1a0b5d About an hour ago Running kube-apiserver 0 179ed14a977e1 kube-apiserver-k8s-ctr kube-system

kubectl get ds -n kube-flannel -owide

# NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

# kube-flannel-ds 3 3 3 3 3 <none> 77m kube-flannel ghcr.io/flannel-io/flannel:v0.27.4 app=flannel

kubectl get pod -n kube-flannel -o yaml | grep -i image: | sort | uniq

image: ghcr.io/flannel-io/flannel-cni-plugin:v1.8.0-flannel1

image: ghcr.io/flannel-io/flannel:v0.27.43. [k8s-ctr] Rocky Linux OS 마이너 버전 Upgrade : v10.0 → v10.1 ⇒ 노드 재기동

[k8s-ctr] 작업 전 정보 확인 : reboot 시 영향도 파악

# Rocky Linux Upgrade 후 reboot 해야되니, reboot 시 영향도 파악

kubectl get pod -A -owide |grep k8s-ctr

# default curl-pod 1/1 Running 0 26m 10.244.0.4 k8s-ctr <none> <none>

# kube-flannel kube-flannel-ds-rwbk7 1/1 Running 0 4m24s 192.168.10.100 k8s-ctr <none> <none>

# kube-system coredns-668d6bf9bc-4x8j7 1/1 Running 0 79m 10.244.0.2 k8s-ctr <none> <none>

# kube-system coredns-668d6bf9bc-d2d5g 1/1 Running 0 79m 10.244.0.3 k8s-ctr <none> <none>

# kube-system etcd-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-apiserver-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-r5bmb 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-scheduler-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# monitoring kube-prometheus-stack-prometheus-node-exporter-46jr7 1/1 Running 0 43m 192.168.10.100 k8s-ctr <none> <none>

# coredns 노드 분산 배포 : 만약 아래 조치 없이 k8s-ctr reboot 시 영향도를 생각해보자!

k scale deployment -n kube-system coredns --replicas 1

# kubectl get pod -A -owide |grep k8s-ctr

# default curl-pod 1/1 Running 0 27m 10.244.0.4 k8s-ctr <none> <none>

# kube-flannel kube-flannel-ds-rwbk7 1/1 Running 0 4m43s 192.168.10.100 k8s-ctr <none> <none>

# kube-system coredns-668d6bf9bc-4x8j7 1/1 Running 0 79m 10.244.0.2 k8s-ctr <none> <none>

# kube-system coredns-668d6bf9bc-d2d5g 1/1 Terminating 0 79m 10.244.0.3 k8s-ctr <none> <none>

# kube-system etcd-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-apiserver-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-r5bmb 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-scheduler-k8s-ctr 1/1 Running 0 79m 192.168.10.100 k8s-ctr <none> <none>

# monitoring kube-prometheus-stack-prometheus-node-exporter-46jr7 1/1 Running 0 43m 192.168.10.100 k8s-ctr <none> <none>

kubectl scale deployment -n kube-system coredns --replicas 2

# coredns-668d6bf9bc-4x8j7 1/1 Running 0 79m 10.244.0.2 k8s-ctr <none> <none>

# coredns-668d6bf9bc-gb5mt 1/1 Running 0 11s 10.244.2.8 k8s-w2 <none> <none>

kubectl get pod -n kube-system -owide | grep coredns[k8s-ctr] Rocky Linux 마이너 버전 Upgrade : v10.0 → v10.1 ⇒ 노드 재기동

# 모니터링

## 터미널1 : 아래 호출 중단 발생 확인!

SVCIP=$(kubectl get svc webpod -o jsonpath='{.spec.clusterIP}')

[k8s-w1] SVCIP=<IP 직접 입력>

[k8s-w1] while true; do curl -s $SVCIP | grep Hostname; sleep 1; done # 이유는 k8s-ctr reboot 시에도 app 워크로드 통신 확인을 위함

혹은

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# Rocky Linux 버전 확인

rpm -aq | grep release

# rocky-release-10.0-1.6.el10.noarch

uname -r

# 6.12.0-55.39.1.el10_0.aarch64

-------------------------------------------------------------

# (옵션) containerd.io 버전 업그레이드 되지 않게, 현재 설치 버전 고정 설정

rpm -q containerd.io # 현재 설치 버전 정보 확인

# containerd.io-2.1.5-1.el10.aarch64

## 버전 잠금 플러그인 설치

dnf install -y 'dnf-command(versionlock)'

## containerd.io 버전 잠금

dnf versionlock add containerd.io

# Last metadata expiration check: 0:29:38 ago on Thu 22 Jan 2026 01:31:14 AM KST.

# Adding versionlock on: containerd.io-0:2.1.5-1.el10.*

## 버전 잠금 목록 확인

dnf versionlock list

# containerd.io-0:2.1.5-1.el10.*

-------------------------------------------------------------

# update 수행 : 해당 과정에서 containerd 가 업그레이드 된것으로 보임. 정확한 버전 관리가 필요 시, containerd/runc 예외 설정 할 것

dnf -y update # 6.12.0-124.21.1.el10_1.aarch64

## 커널 업데이트 완료 : 새로운 리눅스 커널 버전인 6.12.0-124.27.1.el10_1.aarch64이 시스템에 성공적으로 설치됨.

## 부트로더 갱신 : 다음 재부팅 시 시스템은 가장 최신 버전인 6.12.0-124 커널로 부팅됨.

Running scriptlet: kernel-modules-core-6.12.0-124.27.1.el10_1.aarch64 584/584

Running scriptlet: kernel-core-6.12.0-124.27.1.el10_1.aarch64 584/584

Generating grub configuration file ...

Adding boot menu entry for UEFI Firmware Settings ...

done

kdump: For kernel=/boot/vmlinuz-6.12.0-124.27.1.el10_1.aarch64, crashkernel=2G-4G:256M,4G-64G:320M,64G-:576M now.

Please reboot the system for the change to take effect.

Note if you don't want kdump-utils to manage the crashkernel kernel parameter, please set auto_reset_crashkernel=no in /etc/kdump.conf.

Running scriptlet: kernel-modules-6.12.0-124.27.1.el10_1.aarch64 584/584

Running scriptlet: kdump-utils-1.0.54-7.el10.aarch64 584/584

kdump: For kernel=/boot/vmlinuz-6.12.0-55.39.1.el10_0.aarch64, crashkernel=2G-4G:256M,4G-64G:320M,64G-:576M now.

Please reboot the system for the change to take effect.

Note if you don't want kdump-utils to manage the crashkernel kernel parameter, please set auto_reset_crashkernel=no in /etc/kdump.conf.

# 재기동 : 단일 Controlplane node 환경이므로, reboot 후 부팅 정상화 완료 기간에는 k8s api 통신 불가!

reboot

ping 192.168.10.100

64 bytes from 192.168.10.100: icmp_seq=47 ttl=64 time=0.452 ms

64 bytes from 192.168.10.100: icmp_seq=48 ttl=64 time=0.406 ms

Request timeout for icmp_seq 49 # 재부팅 시점에 ping 통신 불가

Request timeout for icmp_seq 50

Request timeout for icmp_seq 51

Request timeout for icmp_seq 52

Request timeout for icmp_seq 53

Request timeout for icmp_seq 54

Request timeout for icmp_seq 55

Request timeout for icmp_seq 56

Request timeout for icmp_seq 57

Request timeout for icmp_seq 58

Request timeout for icmp_seq 59

Request timeout for icmp_seq 60

Request timeout for icmp_seq 61

Request timeout for icmp_seq 62

Request timeout for icmp_seq 63

Request timeout for icmp_seq 64

Request timeout for icmp_seq 65

Request timeout for icmp_seq 66

64 bytes from 192.168.10.100: icmp_seq=67 ttl=64 time=0.364 ms # 부팅 과정에서 nic 처리 정상 시점~

64 bytes from 192.168.10.100: icmp_seq=68 ttl=64 time=0.291 ms

...

# vagrant ssh k8s-ctr

rpm -aq | grep release

# rocky-release-10.1-1.4.el10.noarch

uname -r

# 6.12.0-124.27.1.el10_1.aarch64

# 파드 기동 확인

kubectl get pod -A -owide |grep k8s-ctr

default curl-pod 1/1 Running 1 (2m46s ago) 41m 10.244.0.3 k8s-ctr <none> <none>

kube-flannel kube-flannel-ds-rwbk7 1/1 Running 1 (2m46s ago) 19m 192.168.10.100 k8s-ctr <none> <none>

kube-system coredns-668d6bf9bc-4x8j7 1/1 Running 1 (2m46s ago) 93m 10.244.0.2 k8s-ctr <none> <none>

kube-system etcd-k8s-ctr 1/1 Running 1 (2m46s ago) 94m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-apiserver-k8s-ctr 1/1 Running 1 (2m46s ago) 94m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-controller-manager-k8s-ctr 1/1 Running 1 (2m46s ago) 94m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-proxy-r5bmb 1/1 Running 1 (2m46s ago) 93m 192.168.10.100 k8s-ctr <none> <none>

kube-system kube-scheduler-k8s-ctr 1/1 Running 1 (2m46s ago) 94m 192.168.10.100 k8s-ctr <none> <none>

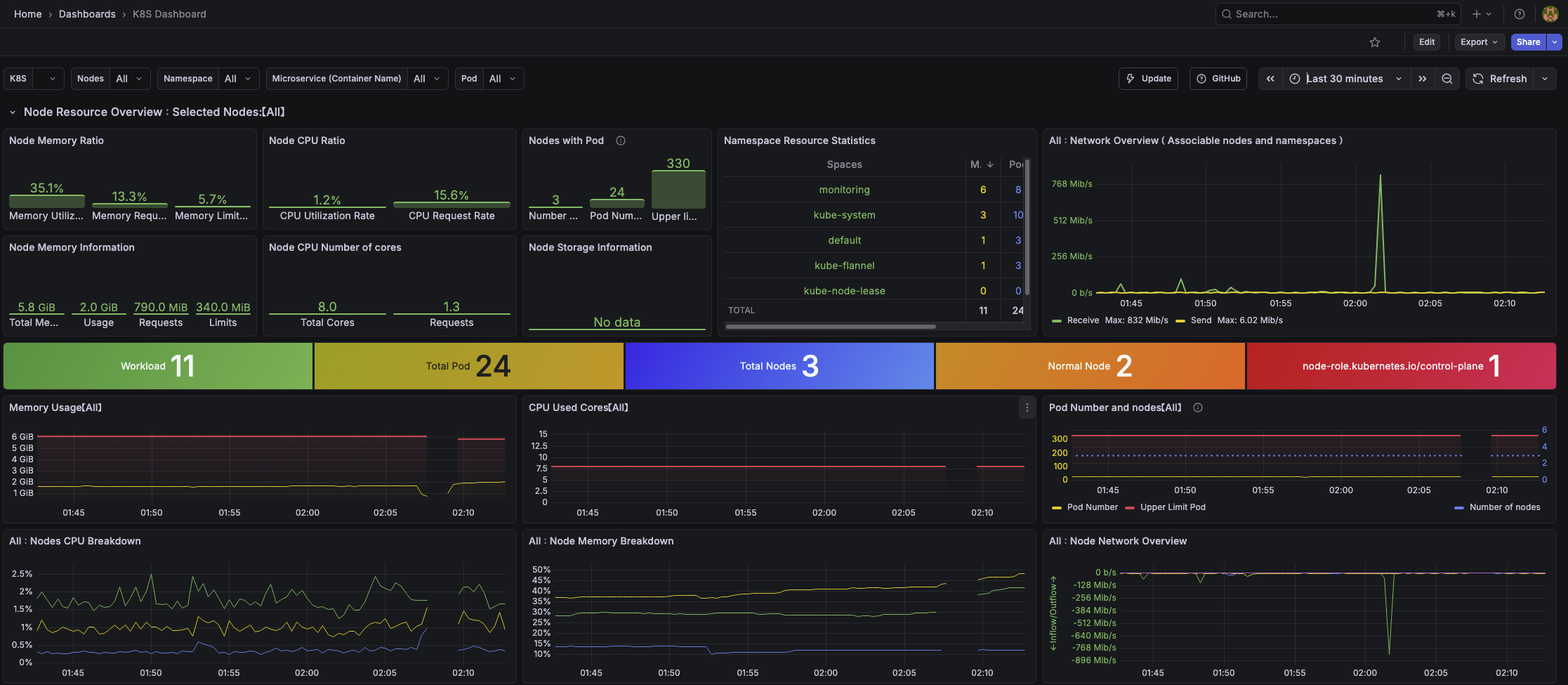

monitoring kube-prometheus-stack-prometheus-node-exporter-46jr7 1/1 Running 1 (2m46s ago) 58m 192.168.10.100 k8s-ctr <none> <none>OS 업그레이드 도중 다음과 같이 매트릭 수집이 중단된 것을 확인할 수 있습니다.

4. Containerd(runc) Upgrade

Containerd는 자동으로 업데이트 되었습니다..

5. [k8s-ctr] Kubeadm , Kubelet, Kubectl Upgrade : v1.32.11 → v1.33.11

kubeadm을 먼저 업그레이드한 후, kubelet과 kubectl도 각각 업그레이드하여 설치합니다. 모든 업그레이드가 완료되면 kubelet 서비스를 재시작하여 변경된 사항이 적용되도록 합니다.

이 과정에서 containerd는 별도로 재시작하지 않습니다. kubeadm을 업그레이드하더라도 containerd와 통신하는 데 사용하는 CRI API 버전이나 소켓 경로는 변하지 않기 때문에, 런타임의 상태를 그대로 유지할 수 있습니다.

# repo 수정 : 기본 1.32 -> 1.33

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

dnf makecache

# 전체 설치 가능 버전 확인 : --disableexcludes=... kubernetes repo에 설정된 exclude 규칙을 이번 설치에서만 무시(1회성 옵션 처럼 사용)

dnf list --showduplicates kubeadm --disableexcludes=kubernetes

Installed Packages

kubeadm.aarch64 1.32.11-150500.1.1 @kubernetes

Available Packages

..

kubeadm.aarch64 1.33.7-150500.1.1 kubernetes

kubeadm.ppc64le 1.33.7-150500.1.1 kubernetes

kubeadm.s390x 1.33.7-150500.1.1 kubernetes

kubeadm.src 1.33.7-150500.1.1 kubernetes

kubeadm.x86_64 1.33.7-150500.1.1 kubernetes

# 설치

dnf install -y --disableexcludes=kubernetes kubeadm-1.33.7-150500.1.1

Upgrading:

kubeadm aarch64 1.33.7-150500.1.1 kubernetes 11 M

# 설치 파일들 확인

which kubeadm && kubeadm version -o yaml

# /usr/bin/kubeadm

# clientVersion:

# buildDate: "2025-12-09T14:41:01Z"

# compiler: gc

# gitCommit: a7245cdf3f69e11356c7e8f92b3e78ca4ee4e757

# gitTreeState: clean

# gitVersion: v1.33.7

# goVersion: go1.24.11

# major: "1"

# minor: "33"

# platform: linux/arm64

# 업그레이드 계획 확인 : 현재 버전 , 대상 버전 , 컴포넌트 변경 사항

kubeadm upgrade plan

# [upgrade/versions] Target version: v1.33.7

# [upgrade/versions] Latest version in the v1.32 series: v1.32.11

# Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

# COMPONENT NODE CURRENT TARGET

# kubelet k8s-ctr v1.32.11 v1.33.7

# kubelet k8s-w1 v1.32.11 v1.33.7

# kubelet k8s-w2 v1.32.11 v1.33.7

# Upgrade to the latest stable version:

# COMPONENT NODE CURRENT TARGET

# kube-apiserver k8s-ctr v1.32.11 v1.33.7

# kube-controller-manager k8s-ctr v1.32.11 v1.33.7

# kube-scheduler k8s-ctr v1.32.11 v1.33.7

# kube-proxy 1.32.11 v1.33.7 # kube-proxy 데몬셋으로 모든 노드에 영향

# CoreDNS v1.11.3 v1.12.0 # coredns 도메인 처리로 영향

# etcd k8s-ctr 3.5.24-0 3.5.24-0 # 기본 버전 사용으로 영향 없음

# You can now apply the upgrade by executing the following command:

# kubeadm upgrade apply v1.33.7

# _____________________________________________________________________

# The table below shows the current state of component configs as understood by this version of kubeadm.

# Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

# resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

# upgrade to is denoted in the "PREFERRED VERSION" column.

# API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

# kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

# kubelet.config.k8s.io v1beta1 v1beta1 no

# _____________________________________________________________________

# 모니터링

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# 터미널2

watch -d kubectl get node

## 터미널3

watch -d kubectl get pod -n kube-system

## 터미널4

watch -d etcdctl member list -w table

# 컨테이너 이미지 미리 다운로드 : 특히 업그레이드 작업 시, 작업 시간 단축을 위해서 수행할 것

kubeadm config images pull

[config/images] Pulled registry.k8s.io/kube-apiserver:v1.33.7

[config/images] Pulled registry.k8s.io/kube-controller-manager:v1.33.7

[config/images] Pulled registry.k8s.io/kube-scheduler:v1.33.7

[config/images] Pulled registry.k8s.io/kube-proxy:v1.33.7

[config/images] Pulled registry.k8s.io/coredns/coredns:v1.12.0

[config/images] Pulled registry.k8s.io/pause:3.10

[config/images] Pulled registry.k8s.io/etcd:3.5.24-0

crictl images

...

# [k8s-w1/w2] kube-proxy 데몬셋과 coredns 신규 버전은 all node 에 미리 다운로드 해두자!

crictl pull registry.k8s.io/kube-proxy:v1.33.7

crictl pull registry.k8s.io/coredns/coredns:v1.12.0

# 실행 후 y 입력

kubeadm upgrade apply v1.33.7

# [upgrade] Reading configuration from the "kubeadm-config" ConfigMap in namespace "kube-system"...

# [upgrade] Use 'kubeadm init phase upload-config --config your-config-file' to re-upload it.

# [upgrade/preflight] Running preflight checks

# [upgrade] Running cluster health checks

# [upgrade/preflight] You have chosen to upgrade the cluster version to "v1.33.7"

# [upgrade/versions] Cluster version: v1.32.11

# [upgrade/versions] kubeadm version: v1.33.7

# [upgrade] Are you sure you want to proceed? [y/N]: y

# [upgrade/preflight] Pulling images required for setting up a Kubernetes cluster

# ...

# [addons] Applied essential addon: CoreDNS

# [addons] Applied essential addon: kube-proxy

# [upgrade] SUCCESS! A control plane node of your cluster was upgraded to "v1.33.7".

# [upgrade] Now please proceed with upgrading the rest of the nodes by following the right order.

# control component(apiserver, kcm 등)의 컨테이너 이미지는 1.33.7 업그레이드 되었고,

# kubelet 은 아직 업그레이드 되지 않은 상태. node 출력에 버전은 kubelet 버전을 기준 출력으로 보임.

kubectl get node -owide

# NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr Ready control-plane 109m v1.32.11 192.168.10.100 <none> Rocky Linux 10.1 (Red Quartz) 6.12.0-124.27.1.el10_1.aarch64 containerd://2.1.5

# k8s-w1 Ready <none> 108m v1.32.11 192.168.10.101 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

# k8s-w2 Ready <none> 107m v1.32.11 192.168.10.102 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

kubectl describe node k8s-ctr | grep 'Kubelet Version:'

Kubelet Version: v1.32.11

kubectl get pod -A

...

# 이미지 업데이트 확인 : etcd, pause 기존 버전만 local 존재 확인.

crictl images

# IMAGE TAG IMAGE ID SIZE

# registry.k8s.io/coredns/coredns v1.11.3 2f6c962e7b831 16.9MB

# registry.k8s.io/coredns/coredns v1.12.0 f72407be9e08c 19.1MB

# registry.k8s.io/etcd 3.5.24-0 1211402d28f58 21.9MB

# registry.k8s.io/kube-apiserver v1.32.11 58951ea1a0b5d 26.4MB

# registry.k8s.io/kube-apiserver v1.33.7 6d7bc8e445519 27.4MB

# registry.k8s.io/kube-controller-manager v1.32.11 82766e5f2d560 24.2MB

# registry.k8s.io/kube-controller-manager v1.33.7 a94595d0240bc 25.1MB

# registry.k8s.io/kube-proxy v1.32.11 dcdb790dc2bfe 27.6MB

# registry.k8s.io/kube-proxy v1.33.7 78ccb937011a5 28.3MB

# registry.k8s.io/kube-scheduler v1.32.11 cfa17ff3d6634 19.2MB

# registry.k8s.io/kube-scheduler v1.33.7 94005b6be50f0 19.9MB

# registry.k8s.io/pause 3.10 afb61768ce381 268kB

# static pod yaml 파일 내용 업데이트 확인 : yaml 파일 내에 images 부분 업데이트

ls -l /etc/kubernetes/manifests/

cat /etc/kubernetes/manifests/*.yaml | grep -i image:

# image: registry.k8s.io/etcd:3.5.24-0

# image: registry.k8s.io/kube-apiserver:v1.33.7

# image: registry.k8s.io/kube-controller-manager:v1.33.7

# image: registry.k8s.io/kube-scheduler:v1.33.7

# kube-system 네임스페이스 내에 동작 중인 파드 내에 컨테이너 이미지 정보 출력

kubectl get pods -n kube-system -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}{range .spec.containers[*]} - {.name}: {.image}{"\n"}{end}{"\n"}{end}'

# coredns-674b8bbfcf-8qhd9

# - coredns: registry.k8s.io/coredns/coredns:v1.12.0

# etcd-k8s-ctr

# - etcd: registry.k8s.io/etcd:3.5.24-0 # 기존 버전 동일(유지)

# kube-apiserver-k8s-ctr

# - kube-apiserver: registry.k8s.io/kube-apiserver:v1.33.7

# kube-controller-manager-k8s-ctr

# - kube-controller-manager: registry.k8s.io/kube-controller-manager:v1.33.7

# kube-proxy-2c6d6

# - kube-proxy: registry.k8s.io/kube-proxy:v1.33.7

# kube-scheduler-k8s-ctr

# - kube-scheduler: registry.k8s.io/kube-scheduler:v1.33.7kubelet/kubectl 업그레이드

# 전체 설치 가능 버전 확인

dnf list --showduplicates kubelet --disableexcludes=kubernetes

dnf list --showduplicates kubectl --disableexcludes=kubernetes

# Upgrade 설치

dnf install -y --disableexcludes=kubernetes kubelet-1.33.7-150500.1.1 kubectl-1.33.7-150500.1.1

Upgrading:

kubectl aarch64 1.33.7-150500.1.1 kubernetes 9.7 M

kubelet aarch64 1.33.7-150500.1.1 kubernetes 13 M

# 설치 파일들 확인

which kubectl && kubectl version --client=true

# /usr/bin/kubectl

# Client Version: v1.33.7

# Kustomize Version: v5.6.0

which kubelet && kubelet --version

# /usr/bin/kubelet

# Kubernetes v1.33.7

# 재시작

systemctl daemon-reload

systemctl restart kubelet # k8s api 10초 정도 단절

# 확인

kubectl get nodes -o wide

# NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr Ready control-plane 111m v1.33.7 192.168.10.100 <none> Rocky Linux 10.1 (Red Quartz) 6.12.0-124.27.1.el10_1.aarch64 containerd://2.1.5

# k8s-w1 Ready <none> 110m v1.32.11 192.168.10.101 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

# k8s-w2 Ready <none> 109m v1.32.11 192.168.10.102 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

kc describe node k8s-ctr

kubectl describe node k8s-ctr | grep 'Kubelet Version:'

Kubelet Version: v1.33.76. [k8s-ctr] Kubeadm , Kubelet, Kubectl Upgrade : v1.33.11 → v1.34.3

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.34/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.34/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

dnf makecache

# 설치

dnf install -y --disableexcludes=kubernetes kubeadm-1.34.3-150500.1.1

# clientVersion:

# buildDate: "2025-12-09T15:05:15Z"

# compiler: gc

# gitCommit: df11db1c0f08fab3c0baee1e5ce6efbf816af7f1

# gitTreeState: clean

# gitVersion: v1.34.3

# goVersion: go1.24.11

# major: "1"

# minor: "34"

# platform: linux/arm64

kubeadm upgrade plan

# [preflight] Running pre-flight checks.

# [upgrade/config] Reading configuration from the "kubeadm-config" ConfigMap in namespace "kube-system"...

# [upgrade/config] Use 'kubeadm init phase upload-config kubeadm --config your-config-file' to re-upload it.

# [upgrade] Running cluster health checks

# [upgrade] Fetching available versions to upgrade to

# [upgrade/versions] Cluster version: 1.33.7

# [upgrade/versions] kubeadm version: v1.34.3

# I0122 02:35:36.283566 20468 version.go:260] remote version is much newer: v1.35.0; falling back to: stable-1.34

# [upgrade/versions] Target version: v1.34.3

# [upgrade/versions] Latest version in the v1.33 series: v1.33.7

# Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

# COMPONENT NODE CURRENT TARGET

# kubelet k8s-w1 v1.32.11 v1.34.3

# kubelet k8s-w2 v1.32.11 v1.34.3

# kubelet k8s-ctr v1.33.7 v1.34.3

# Upgrade to the latest stable version:

# COMPONENT NODE CURRENT TARGET

# kube-apiserver k8s-ctr v1.33.7 v1.34.3

# kube-controller-manager k8s-ctr v1.33.7 v1.34.3

# kube-scheduler k8s-ctr v1.33.7 v1.34.3

# kube-proxy 1.33.7 v1.34.3

# CoreDNS v1.12.0 v1.12.1

# etcd k8s-ctr 3.5.24-0 3.6.5-0

# You can now apply the upgrade by executing the following command:

# kubeadm upgrade apply v1.34.3

# _____________________________________________________________________

# The table below shows the current state of component configs as understood by this version of kubeadm.

# Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

# resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

# upgrade to is denoted in the "PREFERRED VERSION" column.

# API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

# kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

# kubelet.config.k8s.io v1beta1 v1beta1 no

# _____________________________________________________________________kubeadm upgrade apply 실행 : etcd 신규 버전 기동으로 이전 1.32 → 1.33 때보다 약간 소요 시간 추가

kubeadm config images pull

# I0122 02:36:17.390610 20900 version.go:260] remote version is much newer: v1.35.0; falling back to: stable-1.34

# [config/images] Pulled registry.k8s.io/kube-apiserver:v1.34.3

# [config/images] Pulled registry.k8s.io/kube-controller-manager:v1.34.3

# [config/images] Pulled registry.k8s.io/kube-scheduler:v1.34.3

# [config/images] Pulled registry.k8s.io/kube-proxy:v1.34.3

# [config/images] Pulled registry.k8s.io/coredns/coredns:v1.12.1

# [config/images] Pulled registry.k8s.io/pause:3.10.1

# [config/images] Pulled registry.k8s.io/etcd:3.6.5-0`

crictl pull registry.k8s.io/kube-proxy:v1.34.3

crictl pull registry.k8s.io/coredns/coredns:v1.12.1

crictl pull registry.k8s.io/pause:3.10.1

crictl images

# IMAGE TAG IMAGE ID SIZE

# docker.io/nicolaka/netshoot latest e8aef26f96135 208MB

# ghcr.io/flannel-io/flannel-cni-plugin v1.7.1-flannel1 127562bd9047f 5.14MB

# ghcr.io/flannel-io/flannel-cni-plugin v1.8.0-flannel1 c6ef1e714d02a 5.17MB

# ghcr.io/flannel-io/flannel v0.27.3 d84558c0144bc 33.1MB

# ghcr.io/flannel-io/flannel v0.27.4 24d577aa4188d 33.2MB

# quay.io/prometheus/node-exporter v1.10.2 6b5bc413b280c 12.1MB

# registry.k8s.io/coredns/coredns v1.11.3 2f6c962e7b831 16.9MB

# registry.k8s.io/coredns/coredns v1.12.0 f72407be9e08c 19.1MB

# registry.k8s.io/coredns/coredns v1.12.1 138784d87c9c5 20.4MB

# registry.k8s.io/etcd 3.5.24-0 1211402d28f58 21.9MB

# registry.k8s.io/etcd 3.6.5-0 2c5f0dedd21c2 21.1MB

# registry.k8s.io/kube-apiserver v1.32.11 58951ea1a0b5d 26.4MB

# registry.k8s.io/kube-apiserver v1.33.7 6d7bc8e445519 27.4MB

# registry.k8s.io/kube-apiserver v1.34.3 cf65ae6c8f700 24.6MB

# registry.k8s.io/kube-controller-manager v1.32.11 82766e5f2d560 24.2MB

# registry.k8s.io/kube-controller-manager v1.33.7 a94595d0240bc 25.1MB

# registry.k8s.io/kube-controller-manager v1.34.3 7ada8ff13e54b 20.7MB

# registry.k8s.io/kube-proxy v1.32.11 dcdb790dc2bfe 27.6MB

# registry.k8s.io/kube-proxy v1.33.7 78ccb937011a5 28.3MB

# registry.k8s.io/kube-proxy v1.34.3 4461daf6b6af8 22.8MB

# registry.k8s.io/kube-scheduler v1.32.11 cfa17ff3d6634 19.2MB

# registry.k8s.io/kube-scheduler v1.33.7 94005b6be50f0 19.9MB

# registry.k8s.io/kube-scheduler v1.34.3 2f2aa21d34d2d 15.8MB

# registry.k8s.io/pause 3.10 afb61768ce381 268kB

# registry.k8s.io/pause 3.10.1 d7b100cd9a77b 268kB

kubeadm upgrade apply v1.34.3 --yes

# [upgrade] Reading configuration from the "kubeadm-config" ConfigMap in namespace "kube-system"...

# [upgrade] Use 'kubeadm init phase upload-config kubeadm --config your-config-file' to re-upload it.

# [upgrade/preflight] Running preflight checks

# [upgrade] Running cluster health checks

# [upgrade/preflight] You have chosen to upgrade the cluster version to "v1.34.3"

# [upgrade/versions] Cluster version: v1.33.7

# [upgrade/versions] kubeadm version: v1.34.3

# [upgrade/preflight] Pulling images required for setting up a Kubernetes cluster

# [upgrade/preflight] This might take a minute or two, depending on the speed of your internet connection

# [upgrade/preflight] You can also perform this action beforehand using 'kubeadm config images pull'

# [upgrade/control-plane] Upgrading your static Pod-hosted control plane to version "v1.34.3" (timeout: 5m0s)...

# [upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests3544192219"

# [upgrade/staticpods] Preparing for "etcd" upgrade

# [upgrade/staticpods] Renewing etcd-server certificate

# [upgrade/staticpods] Renewing etcd-peer certificate

# [upgrade/staticpods] Renewing etcd-healthcheck-client certificate

# [upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/etcd.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2026-01-22-02-40-59/etcd.yaml"

# [upgrade/staticpods] Waiting for the kubelet to restart the component

# [upgrade/staticpods] This can take up to 5m0s

# [apiclient] Found 1 Pods for label selector component=etcd

# [upgrade/staticpods] Component "etcd" upgraded successfully!

# [upgrade/etcd] Waiting for etcd to become available

# [upgrade/staticpods] Preparing for "kube-apiserver" upgrade

# [upgrade/staticpods] Renewing apiserver certificate

# [upgrade/staticpods] Renewing apiserver-kubelet-client certificate

# [upgrade/staticpods] Renewing front-proxy-client certificate

# [upgrade/staticpods] Renewing apiserver-etcd-client certificate

# [upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2026-01-22-02-40-59/kube-apiserver.yaml"

# [upgrade/staticpods] Waiting for the kubelet to restart the component

# [upgrade/staticpods] This can take up to 5m0s

# [apiclient] Found 1 Pods for label selector component=kube-apiserver

# [upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

# [upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

# [upgrade/staticpods] Renewing controller-manager.conf certificate

# [upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2026-01-22-02-40-59/kube-controller-manager.yaml"

# [upgrade/staticpods] Waiting for the kubelet to restart the component

# [upgrade/staticpods] This can take up to 5m0s

# [apiclient] Found 1 Pods for label selector component=kube-controller-manager

# [upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

# [upgrade/staticpods] Preparing for "kube-scheduler" upgrade

# [upgrade/staticpods] Renewing scheduler.conf certificate

# [upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2026-01-22-02-40-59/kube-scheduler.yaml"

# [upgrade/staticpods] Waiting for the kubelet to restart the component

# [upgrade/staticpods] This can take up to 5m0s

# [apiclient] Found 1 Pods for label selector component=kube-scheduler

# [upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

# [upgrade/control-plane] The control plane instance for this node was successfully upgraded!

# [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

# [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

# [upgrade/kubeconfig] The kubeconfig files for this node were successfully upgraded!

# W0122 02:43:29.324766 22426 postupgrade.go:116] Using temporary directory /etc/kubernetes/tmp/kubeadm-kubelet-config845772198 for kubelet config. To override it set the environment variable KUBEADM_UPGRADE_DRYRUN_DIR

# [upgrade] Backing up kubelet config file to /etc/kubernetes/tmp/kubeadm-kubelet-config845772198/config.yaml

# [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

# [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/instance-config.yaml"

# [patches] Applied patch of type "application/strategic-merge-patch+json" to target "kubeletconfiguration"

# [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

# [upgrade/kubelet-config] The kubelet configuration for this node was successfully upgraded!

# [upgrade/bootstrap-token] Configuring bootstrap token and cluster-info RBAC rules

# [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

# [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

# [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

# [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

# [addons] Applied essential addon: CoreDNS

# [addons] Applied essential addon: kube-proxy

# [upgrade] SUCCESS! A control plane node of your cluster was upgraded to "v1.34.3".

# [upgrade] Now please proceed with upgrading the rest of the nodes by following the right order.k8s 상태 확인

# control component(apiserver, kcm 등)의 컨테이너 이미지는 1.34.3 업그레이드 되었고,

# kubelet 은 아직 업그레이드 되지 않은 상태. node 출력에 버전은 kubelet 버전을 기준 출력으로 보임.

kubectl get node -owide

# NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr Ready control-plane 126m v1.33.7 192.168.10.100 <none> Rocky Linux 10.1 (Red Quartz) 6.12.0-124.27.1.el10_1.aarch64 containerd://2.1.5

# k8s-w1 Ready <none> 125m v1.32.11 192.168.10.101 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

# k8s-w2 Ready <none> 124m v1.32.11 192.168.10.102 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

kubectl describe node k8s-ctr | grep 'Kubelet Version:'

# Kubelet Version: v1.33.7

kubectl get pod -A

# NAMESPACE NAME READY STATUS RESTARTS AGE

# default curl-pod 1/1 Running 1 (35m ago) 74m

# default webpod-697b545f57-87zr5 1/1 Running 0 74m

# default webpod-697b545f57-ngsb7 1/1 Running 0 74m

# kube-flannel kube-flannel-ds-5kk9q 1/1 Running 0 51m

# kube-flannel kube-flannel-ds-rwbk7 1/1 Running 1 (35m ago) 52m

# kube-flannel kube-flannel-ds-wtfzq 1/1 Running 0 51m

# kube-system coredns-66bc5c9577-68fx6 1/1 Running 0 55s

# kube-system coredns-66bc5c9577-hsrvf 1/1 Running 0 55s

# kube-system etcd-k8s-ctr 1/1 Running 0 2m17s

# kube-system kube-apiserver-k8s-ctr 1/1 Running 0 98s

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 74s

# kube-system kube-proxy-626f4 1/1 Running 0 51s

# kube-system kube-proxy-vx4nr 1/1 Running 0 55s

# kube-system kube-proxy-wc4f5 1/1 Running 0 49s

# kube-system kube-scheduler-k8s-ctr 1/1 Running 0 61s

# kube-system metrics-server-5dd7b49d79-lx278 1/1 Running 0 126m

# monitoring alertmanager-kube-prometheus-stack-alertmanager-0 2/2 Running 0 90m

# monitoring kube-prometheus-stack-grafana-5cb7c586f9-zr7vj 3/3 Running 0 90m

# monitoring kube-prometheus-stack-kube-state-metrics-7846957b5b-thrsg 1/1 Running 11 (107s ago) 90m

# monitoring kube-prometheus-stack-operator-584f446c98-7hbbc 1/1 Running 0 90m

# monitoring kube-prometheus-stack-prometheus-node-exporter-46jr7 1/1 Running 1 (35m ago) 90m

# monitoring kube-prometheus-stack-prometheus-node-exporter-779lg 1/1 Running 0 90m

# monitoring kube-prometheus-stack-prometheus-node-exporter-nghcp 1/1 Running 0 90m

# monitoring prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0

...

# 이미지 업데이트 확인 : etcd, pause 신규 버전 pull 확인.

crictl images

IMAGE TAG IMAGE ID SIZE

registry.k8s.io/coredns/coredns v1.11.3 2f6c962e7b831 16.9MB

registry.k8s.io/coredns/coredns v1.12.0 f72407be9e08c 19.1MB

registry.k8s.io/coredns/coredns v1.12.1 138784d87c9c5 20.4MB

registry.k8s.io/etcd 3.5.24-0 1211402d28f58 21.9MB

registry.k8s.io/etcd 3.6.5-0 2c5f0dedd21c2 21.1MB

registry.k8s.io/kube-apiserver v1.32.11 58951ea1a0b5d 26.4MB

registry.k8s.io/kube-apiserver v1.33.7 6d7bc8e445519 27.4MB

registry.k8s.io/kube-apiserver v1.34.3 cf65ae6c8f700 24.6MB

registry.k8s.io/kube-controller-manager v1.32.11 82766e5f2d560 24.2MB

registry.k8s.io/kube-controller-manager v1.33.7 a94595d0240bc 25.1MB

registry.k8s.io/kube-controller-manager v1.34.3 7ada8ff13e54b 20.7MB

registry.k8s.io/kube-proxy v1.32.11 dcdb790dc2bfe 27.6MB

registry.k8s.io/kube-proxy v1.33.7 78ccb937011a5 28.3MB

registry.k8s.io/kube-proxy v1.34.3 4461daf6b6af8 22.8MB

registry.k8s.io/kube-scheduler v1.32.11 cfa17ff3d6634 19.2MB

registry.k8s.io/kube-scheduler v1.33.7 94005b6be50f0 19.9MB

registry.k8s.io/kube-scheduler v1.34.3 2f2aa21d34d2d 15.8MB

registry.k8s.io/pause 3.10 afb61768ce381 268kB

registry.k8s.io/pause 3.10.1 d7b100cd9a77b 268kB

# static pod yaml 파일 내용 업데이트 확인 : yaml 파일 내에 images 부분 업데이트

ls -l /etc/kubernetes/manifests/

cat /etc/kubernetes/manifests/*.yaml | grep -i image:

# image: registry.k8s.io/etcd:3.6.5-0

# image: registry.k8s.io/kube-apiserver:v1.34.3

# image: registry.k8s.io/kube-controller-manager:v1.34.3

# image: registry.k8s.io/kube-scheduler:v1.34.3

kubectl get pods -n kube-system -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}{range .spec.containers[*]} - {.name}: {.image}{"\n"}{end}{"\n"}{end}'

# coredns-66bc5c9577-v99kw

# - coredns: registry.k8s.io/coredns/coredns:v1.12.1

# etcd-k8s-ctr

# - etcd: registry.k8s.io/etcd:3.6.5-0

# kube-apiserver-k8s-ctr

# - kube-apiserver: registry.k8s.io/kube-apiserver:v1.34.3

# kube-controller-manager-k8s-ctr

# - kube-controller-manager: registry.k8s.io/kube-controller-manager:v1.34.3

# kube-proxy-9p996

# - kube-proxy: registry.k8s.io/kube-proxy:v1.34.3

# kube-scheduler-k8s-ctr

# - kube-scheduler: registry.k8s.io/kube-scheduler:v1.34.3

kubelet/kubectl 업그레이드

# 전체 설치 가능 버전 확인

dnf list --showduplicates kubelet --disableexcludes=kubernetes

# Last metadata expiration check: 0:12:08 ago on Thu 22 Jan 2026 02:34:57 AM KST.

# Installed Packages

# kubelet.aarch64 1.33.7-150500.1.1 @kubernetes

# Available Packages

# kubelet.aarch64 1.34.0-150500.1.1 kubernetes

# kubelet.ppc64le 1.34.0-150500.1.1 kubernetes

# kubelet.s390x 1.34.0-150500.1.1 kubernetes

# kubelet.src 1.34.0-150500.1.1 kubernetes

# kubelet.x86_64 1.34.0-150500.1.1 kubernetes

# kubelet.aarch64 1.34.1-150500.1.1 kubernetes

# kubelet.ppc64le 1.34.1-150500.1.1 kubernetes

# kubelet.s390x 1.34.1-150500.1.1 kubernetes

# kubelet.src 1.34.1-150500.1.1 kubernetes

# kubelet.x86_64 1.34.1-150500.1.1 kubernetes

# kubelet.aarch64 1.34.2-150500.1.1 kubernetes

# kubelet.ppc64le 1.34.2-150500.1.1 kubernetes

# kubelet.s390x 1.34.2-150500.1.1 kubernetes

# kubelet.src 1.34.2-150500.1.1 kubernetes

# kubelet.x86_64 1.34.2-150500.1.1 kubernetes

# kubelet.aarch64 1.34.3-150500.1.1 kubernetes

# kubelet.ppc64le 1.34.3-150500.1.1 kubernetes

# kubelet.s390x 1.34.3-150500.1.1 kubernetes

# kubelet.src 1.34.3-150500.1.1 kubernetes

# kubelet.x86_64 1.34.3-150500.1.1 kubernetes

dnf list --showduplicates kubectl --disableexcludes=kubernetes

# Last metadata expiration check: 0:12:24 ago on Thu 22 Jan 2026 02:34:57 AM KST.

# Installed Packages

# kubectl.aarch64 1.33.7-150500.1.1 @kubernetes

# Available Packages

# kubectl.aarch64 1.34.0-150500.1.1 kubernetes

# kubectl.ppc64le 1.34.0-150500.1.1 kubernetes

# kubectl.s390x 1.34.0-150500.1.1 kubernetes

# kubectl.src 1.34.0-150500.1.1 kubernetes

# kubectl.x86_64 1.34.0-150500.1.1 kubernetes

# kubectl.aarch64 1.34.1-150500.1.1 kubernetes

# kubectl.ppc64le 1.34.1-150500.1.1 kubernetes

# kubectl.s390x 1.34.1-150500.1.1 kubernetes

# kubectl.src 1.34.1-150500.1.1 kubernetes

# kubectl.x86_64 1.34.1-150500.1.1 kubernetes

# kubectl.aarch64 1.34.2-150500.1.1 kubernetes

# kubectl.ppc64le 1.34.2-150500.1.1 kubernetes

# kubectl.s390x 1.34.2-150500.1.1 kubernetes

# kubectl.src 1.34.2-150500.1.1 kubernetes

# kubectl.x86_64 1.34.2-150500.1.1 kubernetes

# kubectl.aarch64 1.34.3-150500.1.1 kubernetes

# kubectl.ppc64le 1.34.3-150500.1.1 kubernetes

# kubectl.s390x 1.34.3-150500.1.1 kubernetes

# kubectl.src 1.34.3-150500.1.1 kubernetes

# kubectl.x86_64 1.34.3-150500.1.1 kubernetes

# Upgrade 설치

dnf install -y --disableexcludes=kubernetes kubelet-1.34.3-150500.1.1 kubectl-1.34.3-150500.1.1

# Upgrading:

# kubectl aarch64 1.33.7-150500.1.1 kubernetes 9.7 M

kubelet aarch64 1.33.7-150500.1.1 kubernetes 13 M

# 설치 파일들 확인

which kubectl && kubectl version --client=true

# /usr/bin/kubectl

# Client Version: v1.34.3

# Kustomize Version: v5.7.1

which kubelet && kubelet --version

# /usr/bin/kubelet

# Kubernetes v1.34.3

# 재시작

systemctl daemon-reload # systemctl daemon-reexec 와 차이점은?

systemctl restart kubelet # k8s api 10초 정도 단절

# 확인

kubectl get nodes -o wide

# NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr Ready control-plane 132m v1.34.3 192.168.10.100 <none> Rocky Linux 10.1 (Red Quartz) 6.12.0-124.27.1.el10_1.aarch64 containerd://2.1.5

# k8s-w1 Ready <none> 131m v1.32.11 192.168.10.101 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

# k8s-w2 Ready <none> 130m v1.32.11 192.168.10.102 <none> Rocky Linux 10.0 (Red Quartz) 6.12.0-55.39.1.el10_0.aarch64 containerd://2.1.5

kc describe node k8s-ctr

kubectl describe node k8s-ctr | grep 'Kubelet Version:'

# Kubelet Version: v1.34.3admin 용 kubeconfig 업데이트

ls -l ~/.kube/config

# -rw-------. 1 root root 5637 Jan 22 00:58 /root/.kube/config

#

ls -l /etc/kubernetes/admin.conf

# -rw-------. 1 root root 5638 Jan 22 02:43 /etc/kubernetes/admin.conf

# admin 용 kubeconfig 업데이트

yes | cp /etc/kubernetes/admin.conf ~/.kube/config ; echo

chown $(id -u):$(id -g) ~/.kube/config

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab"

kubens default7. worker node 작업 : v1.32.11 → v1.33.11 ⇒ v1.34.3

- 특정 워커 노드에 종속된 파드나 pv/pvc 있는지 확인 → 필요 시 조치

- 워커 노드에 쿼럼 기반 동작 app 파드 존재 시, 워커 노드 다수 작업 시 주의

사전 정보 확인 & pdb 설정 추가

# 워커 1번에 '프로메테우스, 그라파나' 파드 기동 확인 -> 8번에서 워커 노드 작업 시 워커 2번 부터 하자.

kubectl get pod -A -owide | grep k8s-w1

# default webpod-697b545f57-87zr5 1/1 Running 0 82m 10.244.1.5 k8s-w1 <none> <none>

# kube-flannel kube-flannel-ds-wtfzq 1/1 Running 0 59m 192.168.10.101 k8s-w1 <none> <none>

# kube-system kube-proxy-vx4nr 1/1 Running 0 8m44s 192.168.10.101 k8s-w1 <none> <none>

# kube-system metrics-server-5dd7b49d79-lx278 1/1 Running 0 134m 10.244.1.2 k8s-w1 <none> <none>

# monitoring kube-prometheus-stack-grafana-5cb7c586f9-zr7vj 3/3 Running 0 98m 10.244.1.3 k8s-w1 <none> <none>

# monitoring kube-prometheus-stack-prometheus-node-exporter-nghcp 1/1 Running 0 98m 192.168.10.101 k8s-w1 <none> <none>

# monitoring prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 98m 10.244.1.4 k8s-w1 <none> <none>

kubectl get pod -A -owide | grep k8s-w2

# default webpod-697b545f57-ngsb7 1/1 Running 0 83m 10.244.2.7 k8s-w2 <none> <none>

# kube-flannel kube-flannel-ds-5kk9q 1/1 Running 0 60m 192.168.10.102 k8s-w2 <none> <none>

# kube-system coredns-66bc5c9577-68fx6 1/1 Running 0 9m34s 10.244.2.9 k8s-w2 <none> <none>

# kube-system kube-proxy-wc4f5 1/1 Running 0 9m28s 192.168.10.102 k8s-w2 <none> <none>

# monitoring alertmanager-kube-prometheus-stack-alertmanager-0 2/2 Running 0 99m 10.244.2.6 k8s-w2 <none> <none>

# monitoring kube-prometheus-stack-kube-state-metrics-7846957b5b-thrsg 1/1 Running 12 (4m36s ago) 99m 10.244.2.4 k8s-w2 <none> <none>

# monitoring kube-prometheus-stack-operator-584f446c98-7hbbc 1/1 Running 0 99m 10.244.2.3 k8s-w2 <none> <none>

# monitoring kube-prometheus-stack-prometheus-node-exporter-779lg 1/1 Running 0

# sts 확인

kubectl get sts -A

# NAMESPACE NAME READY AGE

# monitoring alertmanager-kube-prometheus-stack-alertmanager 1/1 99m

# monitoring prometheus-kube-prometheus-stack-prometheus 1/1 99m

# pv,pvc 확인