들어가며

안녕하세요! Devlos입니다.

이번 포스팅은 CloudNet@ 커뮤니티에서 주최하는 K8S Deploy 6주 차 주제인 "Kubespray 오프라인 설치 및 Air-Gap 환경 구축"에 대해 정리한 내용입니다.

실습 환경(Admin 서버 + k8s 노드 2대)에서 kubespray-offline 도구를 활용해 필요한 바이너리 파일들과 컨테이너 이미지들을 사전 다운로드하고, 오프라인 패키지 저장소(RPM/DEB), PyPI 미러, 프라이빗 컨테이너 레지스트리를 구축한 뒤, 완전히 격리된 환경에서 Kubernetes 클러스터를 성공적으로 배포하는 전 과정을 다룹니다.

폐쇄망에서 필요한 핵심 서비스들(DNS, NTP, Package Repository, Container Registry 등) 구성부터 시작해, Helm OCI Registry 활용, 트러블슈팅까지 실무에서 바로 적용 가능한 오프라인 배포 방법에 대해 알아봅니다.

폐쇄망 환경 소개

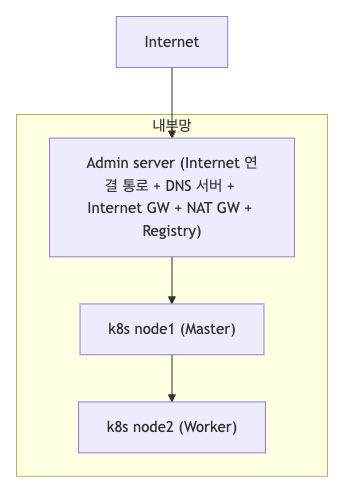

실습을 위해서 Air-Gap 환경에서 Kubernetes 클러스터 배포를 위한 네트워크를 구성합니다.

- 내부망: 폐쇄된 네트워크 환경

- Admin Server: 다중 역할을 수행하는 중앙 관리 서버

- DNS 서버: 내부 도메인 이름 해석

- Internet Gateway: 외부 연결 관리

- NAT Gateway: 네트워크 주소 변환

- Registry: 컨테이너 이미지 저장소

- k8s node1 (Master): Kubernetes 마스터 노드

- k8s node2 (Worker): Kubernetes 워커 노드

폐쇄망 환경에서 Kubernetes 클러스터를 성공적으로 배포하기 위해 필요한 핵심 서비스들은 다음과 같습니다.

- Network Gateway (GW, NATGW): 네트워크 게이트웨이 및 NAT 서비스

- NTP Server: 시간 동기화 서비스

- Helm Artifact Repository: Helm 차트 저장소

- Private PyPI Mirror: Python 패키지 미러 저장소

- DNS Server: 도메인 이름 해석 서비스

- Local (Mirror) YUM/DNF Repository: OS 패키지 미러 저장소

- Private Container (Image) Registry: 컨테이너 이미지 저장소

폐쇠망 실습 환경 배포

Vagrantfile에서는 admin 서버의 디스크 용량을 120GB로 확장하고, Kubernetes 클러스터를 위해 컨트롤러 노드와 워커 노드 역할을 하는 k8s-node 2대를 구성했습니다.

Vagrantfile

# Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/rockylinux-10.0

BOX_IMAGE = "bento/rockylinux-10.0" # "bento/rockylinux-9"

BOX_VERSION = "202510.26.0"

N = 2 # max number of Node

Vagrant.configure("2") do |config|

# Nodes

(1..N).each do |i|

config.vm.define "k8s-node#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/Kubespary-offline-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-node#{i}"

vb.cpus = 4

vb.memory = 2048

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-node#{i}"

subconfig.vm.network "private_network", ip: "192.168.10.1#{i}"

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh" , args: [ N ]

end

end

# Admin Node

config.vm.define "admin" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/Kubespary-offline-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "admin"

vb.cpus = 4

vb.memory = 2048

vb.linked_clone = true

end

subconfig.vm.host_name = "admin"

subconfig.vm.network "private_network", ip: "192.168.10.10"

subconfig.vm.network "forwarded_port", guest: 22, host: "60000", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.disk :disk, size: "120GB", primary: true # https://developer.hashicorp.com/vagrant/docs/disks/usage

subconfig.vm.provision "shell", path: "admin.sh" , args: [ N ]

end

endadmin.sh는 폐쇄망 환경에서 Admin 서버를 구성하기 위한 초기 설정 스크립트입니다.

주요 기능은 다음과 같습니다.

- 시스템 기본 설정: 시간대 설정, 방화벽/SELinux 비활성화

- 네트워크 구성: 로컬 DNS 설정, IP 포워딩 활성화, 라우팅 설정

- SSH 환경 구성: SSH 키 생성 및 배포, 패스워드 인증 활성화

- 필수 패키지 설치: Python, Git, Helm, K9s 등 Kubernetes 관리 도구

- 디스크 확장: 시스템 디스크 용량 자동 확장

admin.sh

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Change Timezone and Enable NTP"

timedatectl set-local-rtc 0 # 하드웨어 클럭을 UTC로 설정

timedatectl set-timezone Asia/Seoul # 시스템 타임존을 서울로 변경

echo "[TASK 2] Disable firewalld and selinux"

systemctl disable --now firewalld >/dev/null 2>&1 # firewalld 비활성화 및 중지

setenforce 0 # SELinux 모드를 Permissive로 전환(일시)

sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config # SELinux 영구 설정 변경

echo "[TASK 3] Setting Local DNS Using Hosts file"

sed -i '/^127\.0\.\(1\|2\)\.1/d' /etc/hosts # hosts 파일에서 기본 loopback 항목 제거

echo "192.168.10.10 admin" >> /etc/hosts # admin 서버 hosts 등록

for (( i=1; i<=$1; i++ )); do echo "192.168.10.1$i k8s-node$i" >> /etc/hosts; done # 각 k8s 노드 hosts 등록

echo "[TASK 4] Delete default routing - enp0s9 NIC" # setenforce 0 설정 필요

nmcli connection modify enp0s9 ipv4.never-default yes # enp0s9 인터페이스에 기본 라우팅 금지

nmcli connection up enp0s9 >/dev/null 2>&1 # enp0s9 인터페이스 재시작

echo "[TASK 5] Config net.ipv4.ip_forward"

cat << EOF > /etc/sysctl.d/99-ipforward.conf

net.ipv4.ip_forward = 1 # IP 포워딩 활성화

EOF

sysctl --system >/dev/null 2>&1 # sysctl 전체 재적용

echo "[TASK 6] Install packages"

dnf install -y python3-pip git sshpass cloud-utils-growpart >/dev/null 2>&1 # 필수 패키지 설치

echo "[TASK 7] Install Helm"

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | DESIRED_VERSION=v3.20.0 bash >/dev/null 2>&1 # Helm 설치

echo "[TASK 8] Increase Disk Size"

growpart /dev/sda 3 >/dev/null 2>&1 # /dev/sda3 파티션 용량 확장

xfs_growfs /dev/sda3 >/dev/null 2>&1 # xfs 파일시스템 확장

echo "[TASK 9] Setting SSHD"

echo "root:qwe123" | chpasswd # root 비밀번호 설정

cat << EOF >> /etc/ssh/sshd_config # SSH 서버 설정 변경: 루트로그인/패스워드 인증 허용

PermitRootLogin yes

PasswordAuthentication yes

EOF

systemctl restart sshd >/dev/null 2>&1 # SSH 서버 재시작

echo "[TASK 10] Setting SSH Key"

ssh-keygen -t rsa -N "" -f /root/.ssh/id_rsa >/dev/null 2>&1 # root 계정 ssh key 생성

sshpass -p 'qwe123' ssh-copy-id -o StrictHostKeyChecking=no root@192.168.10.10 >/dev/null 2>&1 # self ssh key 등록

ssh -o StrictHostKeyChecking=no root@admin-lb hostname >/dev/null 2>&1 # (옵션) admin-lb 접속 확인

for (( i=1; i<=$1; i++ )); do sshpass -p 'qwe123' ssh-copy-id -o StrictHostKeyChecking=no root@192.168.10.1$i >/dev/null 2>&1 ; done # 각 k8s 노드에 ssh key 복사

for (( i=1; i<=$1; i++ )); do sshpass -p 'qwe123' ssh -o StrictHostKeyChecking=no root@k8s-node$i hostname >/dev/null 2>&1 ; done # 각 k8s 노드 ssh 접속 테스트

echo "[TASK 11] Install K9s"

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi # 아키텍처에 따라 바이너리 선택

wget -P /tmp https://github.com/derailed/k9s/releases/latest/download/k9s_linux_${CLI_ARCH}.tar.gz >/dev/null 2>&1 # K9s 바이너리 다운로드

tar -xzf /tmp/k9s_linux_${CLI_ARCH}.tar.gz -C /tmp # 압축 해제

chown root:root /tmp/k9s # 소유자 변경

mv /tmp/k9s /usr/local/bin/ # 실행경로로 이동

chmod +x /usr/local/bin/k9s # 실행권한 부여

echo "[TASK 12] ETC"

echo "sudo su -" >> /home/vagrant/.bashrc # vagrant 계정 로그인시 root 자동 전환

echo ">>>> Initial Config End <<<<"init_cfg.sh 스크립트 기능

init_cfg.sh는 Kubernetes 노드들(Master/Worker)의 초기 설정을 위한 스크립트입니다.

- 시스템 기본 설정: 시간대 설정, 방화벽/SELinux 비활성화

- Kubernetes 준비: SWAP 비활성화, 커널 모듈 로드, 네트워크 설정

- 네트워크 구성: 로컬 DNS 설정, 기본 라우팅 제거

- SSH 환경 구성: SSH 접근 설정 및 패스워드 인증 활성화

- 필수 패키지 설치: Python, Git 등 기본 도구 설치

init_cfg.sh

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Change Timezone and Enable NTP"

timedatectl set-local-rtc 0 # 하드웨어 시계를 UTC로 설정

timedatectl set-timezone Asia/Seoul # 시간대를 서울로 설정

echo "[TASK 2] Disable firewalld and selinux"

systemctl disable --now firewalld >/dev/null 2>&1 # 방화벽 비활성화 및 중지

setenforce 0 # SELinux 임시 비활성화

sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config # SELinux 영구 비활성화

echo "[TASK 3] Disable and turn off SWAP & Delete swap partitions"

swapoff -a # 모든 SWAP 비활성화 (Kubernetes 요구사항)

sed -i '/swap/d' /etc/fstab # fstab에서 SWAP 항목 제거

sfdisk --delete /dev/sda 2 >/dev/null 2>&1 # SWAP 파티션 삭제

partprobe /dev/sda >/dev/null 2>&1 # 파티션 테이블 다시 읽기

echo "[TASK 4] Config kernel & module"

# Kubernetes 필수 커널 모듈 설정

cat << EOF > /etc/modules-load.d/k8s.conf

overlay # 컨테이너 오버레이 파일시스템

br_netfilter # 브리지 네트워크 필터링

vxlan # VXLAN 터널링 (CNI 플러그인용)

EOF

modprobe overlay >/dev/null 2>&1 # overlay 모듈 즉시 로드

modprobe br_netfilter >/dev/null 2>&1 # br_netfilter 모듈 즉시 로드

# Kubernetes 네트워킹을 위한 커널 파라미터 설정

cat << EOF >/etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1 # 브리지 트래픽을 iptables로 처리

net.bridge.bridge-nf-call-ip6tables = 1 # 브리지 IPv6 트래픽을 ip6tables로 처리

net.ipv4.ip_forward = 1 # IP 포워딩 활성화

EOF

sysctl --system >/dev/null 2>&1 # 커널 파라미터 적용

echo "[TASK 5] Setting Local DNS Using Hosts file"

sed -i '/^127\.0\.\(1\|2\)\.1/d' /etc/hosts # 기존 localhost 항목 제거

echo "192.168.10.10 admin" >> /etc/hosts # admin 서버 호스트 추가

# 동적으로 k8s-node 호스트들 추가 ($1은 노드 개수)

for (( i=1; i<=$1; i++ )); do echo "192.168.10.1$i k8s-node$i" >> /etc/hosts; done

echo "[TASK 6] Delete default routing - enp0s9 NIC" # setenforce 0 설정 필요

nmcli connection modify enp0s9 ipv4.never-default yes # enp0s9 인터페이스를 기본 라우트에서 제외

nmcli connection up enp0s9 >/dev/null 2>&1 # 네트워크 연결 활성화

echo "[TASK 7] Setting SSHD"

echo "root:qwe123" | chpasswd # root 계정 패스워드 설정

# SSH 접근 허용 설정

cat << EOF >> /etc/ssh/sshd_config

PermitRootLogin yes # root 로그인 허용

PasswordAuthentication yes # 패스워드 인증 허용

EOF

systemctl restart sshd >/dev/null 2>&1 # SSH 서비스 재시작

echo "[TASK 8] Install packages"

dnf install -y python3-pip git >/dev/null 2>&1 # Python pip, Git 설치

echo "[TASK 9] ETC"

echo "sudo su -" >> /home/vagrant/.bashrc # vagrant 사용자 로그인 시 자동으로 root 전환

echo ">>>> Initial Config End <<<<"

설치 스크립트는 다음과 같습니다.

mkdir k8s-offline

cd k8s-offline

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-kubespary-offline/Vagrantfile

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-kubespary-offline/admin.sh

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-kubespary-offline/init_cfg.sh

vagrant up

vagrant status

# admin, k8s-node1, k8s-node2 각각 접속 : 호스트 OS에 sshpass가 없을 경우 ssh로 root로 접속 후 암호 qwe123 입력

sshpass -p 'qwe123' ssh root@192.168.10.10 # ssh root@192.168.10.10

sshpass -p 'qwe123' ssh root@192.168.10.11 # ssh root@192.168.10.11

sshpass -p 'qwe123' ssh root@192.168.10.12 # ssh root@192.168.10.12Network Gateway(IGW, NATGW)

################################################################

# [k8s-node] 네트워크 기본 설정 : enp0s8 연결 down, enp0s9 디폴트 라우팅 → 외부 통신 확인

################################################################

nmcli connection down enp0s8

nmcli connection modify enp0s8 connection.autoconnect no

nmcli connection modify enp0s9 +ipv4.routes "0.0.0.0/0 192.168.10.10 200"

nmcli connection up enp0s9

# Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/7)

ip route

# k8s-node1:~# ip route

# default via 192.168.10.10 dev enp0s9 proto static metric 200

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.11 metric 100

# k8s-node2:~# ip route

# default via 192.168.10.10 dev enp0s9 proto static metric 200

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.12 metric 100

################################################################

# [k8s-node] 통신 안되게

################################################################

# enp0s8 연결 내리기 : 실행 직후 부터 외부 통신 X

nmcli connection down enp0s8

# enp0s8 확인 : 할당된 IP가 제거되고, 외부 통신 라우팅 정보도 삭제됨

cat /etc/NetworkManager/system-connections/enp0s8.nmconnection

ip addr show enp0s8

# 2: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# link/ether 08:00:27:90:ea:eb brd ff:ff:ff:ff:ff:ff

# altname enx08002790eaeb

ip route

# k8s-node1:~# ip route

# default via 192.168.10.10 dev enp0s9 proto static metric 200

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.11 metric 100

# k8s-node2:~# ip route

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.12 metric 100

# 192.168.1

# 재부팅 이후에도 자동 연결 내리기 설정 시

nmcli connection modify enp0s8 connection.autoconnect no

cat /etc/NetworkManager/system-connections/enp0s8.nmconnection

[connection]

id=enp0s8

uuid=7f94e839-e070-4bfe-9330-07090381d89f

type=ethernet

autoconnect=false

...

# 외부 통신을 위해 enp0s9 에 디폴트 라우팅 추가 : 우선순위 200 설정

nmcli connection modify enp0s9 +ipv4.routes "0.0.0.0/0 192.168.10.10 200"

cat /etc/NetworkManager/system-connections/enp0s9.nmconnection

...

[ipv4]

address1=192.168.10.11/24

method=manual

never-default=true # nmcli connection modify enp0s9 ipv4.never-default yes

route1=0.0.0.0/0,192.168.10.10

...

# 설정 적용하기

nmcli connection up enp0s9

# Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/8)

ip route

# k8s-node1:~# ip route

# default via 192.168.10.10 dev enp0s9 proto static metric 200

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.11 metric 100

# k8s-node2:~# ip route

# default via 192.168.10.10 dev enp0s9 proto static metric 200

# 192.168.10.0/24 dev enp0s9 proto kernel scope link src 192.168.10.12 metric 100

# 외부 통신 확인

ping -w 1 -W 1 8.8.8.8

# PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

# --- 8.8.8.8 ping statistics ---

# 1 packets transmitted, 0 received, 100% packet loss, time 0ms

curl www.google.com

# curl: (6) Could not resolve host: www.google.com

# DNS Nameserver 정보 확인

cat /etc/resolv.conf

# Generated by NetworkManager

cat << EOF > /etc/resolv.conf

nameserver 168.126.63.1

nameserver 8.8.8.8

EOF

curl www.google.com

# 네임서버를 별도로 잡아도 통신이 안됨

# (참고) 디폴트 라우팅 제거 시

# nmcli connection modify enp0s9 -ipv4.routes "0.0.0.0/0 192.168.10.10 200"

# nmcli connection up enp0s9

# ip route

################################################################

# [k8s-node] Admin server를 NAT GW로 설정

################################################################

# 라우팅 설정 : 이미 설정 되어 있음

sysctl -w net.ipv4.ip_forward=1 # sysctl net.ipv4.ip_forward

cat <<EOF | tee /etc/sysctl.d/99-ipforward.conf

net.ipv4.ip_forward = 1

EOF

sysctl --system

# NAT 설정

iptables -t nat -A POSTROUTING -o enp0s8 -j MASQUERADE

# NAT 설정 이후에는 통신이 잘됨

iptables -t nat -S

# -P PREROUTING ACCEPT

# -P INPUT ACCEPT

# -P OUTPUT ACCEPT

# -P POSTROUTING ACCEPT

# -A POSTROUTING -o enp0s8 -j MASQUERADE

iptables -t nat -L -n -v

...

Chain POSTROUTING (policy ACCEPT 1 packets, 120 bytes)

pkts bytes target prot opt in out source destination

2 168 MASQUERADE all -- * enp0s8 0.0.0.0/0 0.0.0.0/0

################################

# [admin] ⇒ 다시 iptables NAT 설정 제거로, 다시 내부 k8s-node 인터넷 단절

################################

iptables -t nat -D POSTROUTING -o enp0s8 -j MASQUERADE

################################################################

# [admin] ⇒ NTP 서버 설정

################################################################

# 현재 ntp 서버와 타임 동기화 설정 및 상태 확인

systemctl status chronyd.service --no-pager

# grep "^[^#]" /etc/chrony.conf

# ● chronyd.service - NTP client/server

# Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; preset: enabled)

# Active: active (running) since Wed 2026-02-11 22:03:12 KST; 31min ago

# Invocation: d06309e9517b40fc92a5233b01e45663

# Docs: man:chronyd(8)

grep "^[^#]" /etc/chrony.conf

pool 2.rocky.pool.ntp.org iburst # 시간 정보를 받아올 외부 서버 주소입니다. 서비스 시작 직후 짧은 간격으로 4~8개의 패킷을 보내 서버와 빠르게 연결(초기 동기화)되도록 돕는 옵션입니다.

sourcedir /run/chrony-dhcp # DHCP 서버로부터 받은 NTP 서버 정보를 저장하는 디렉토리입니다. 네트워크 환경에 따라 유동적으로 서버를 추가할 수 있게 해줍니다.

driftfile /var/lib/chrony/drift # 컴퓨터 내부 시계(수정 진동자)가 실제 시간과 얼마나 오차가 발생하는지(시간 편차) 기록하는 파일입니다. 나중에 네트워크가 끊겨도 이 기록을 바탕으로 오차를 보정합니다.

makestep 1.0 3 # 시스템 부팅 시 시간 차이가 너무 크면 점진적 보정 대신 '강제 점프(Step)'를 수행하라는 설정입니다. 1.0 3은 "시간 차이가 1초 이상일 경우, 초기 3번의 업데이트 내에서 즉시 시간을 맞추라"는 뜻입니다.

rtcsync # 시스템 시계(OS 시간)의 동기화 결과를 하드웨어 시계(메인보드의 RTC)에 주기적으로 복사합니다. 서버를 재부팅해도 시간이 잘 맞게 유지됩니다.

ntsdumpdir /var/lib/chrony # NTS(Network Time Security) 키 정보를 저장하는 위치입니다. (보안 연결용)

logdir /var/log/chrony

# chrony가 어떤 NTP 서버들을 알고 있고, 그중 어떤 서버를 기준으로 시간을 맞추는지를 보여줍니다.

chronyc sources -v

dig +short 2.rocky.pool.ntp.org

# chrony 설정

cp /etc/chrony.conf /etc/chrony.bak

cat << EOF > /etc/chrony.conf

# 외부 한국 공용 NTP 서버 설정

server pool.ntp.org iburst

server kr.pool.ntp.org iburst

# 내부망(192.168.10.0/24)에서 이 서버에 접속하여 시간 동기화 허용

allow 192.168.10.0/24

# 외부망이 끊겼을 때도 로컬 시계를 기준으로 내부망에 시간 제공 (선택 사항)

local stratum 10

# 로그

logdir /var/log/chrony

EOF

systemctl restart chronyd.service

systemctl status chronyd.service --no-pager

# 상태 확인

timedatectl status

# Local time: Wed 2026-02-11 22:36:16 KST

# Universal time: Wed 2026-02-11 13:36:16 UTC

# RTC time: Wed 2026-02-11 14:09:31

# Time zone: Asia/Seoul (KST, +0900)

# System clock synchronized: yes

# NTP service: active

# RTC in local TZ: no

chronyc sources -v

################################################################

# [k8s-node] NTP 클라이언트 설정

################################################################

# 상태 확인

timedatectl status

chronyc sources -v

# chrony 설정

cp /etc/chrony.conf /etc/chrony.bak

cat << EOF > /etc/chrony.conf

server 192.168.10.10 iburst

logdir /var/log/chrony

EOF

systemctl restart chronyd.service

systemctl status chronyd.service --no-pager

# ● chronyd.service - NTP client/server

# Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; preset: enabled)

# Active: active (running) since Wed 2026-02-11 22:37:57 KST; 45ms ago

# Invocation: 39c962f585994a78b5e708a3461a917f

# Docs: man:chronyd(8)

# man:chrony.conf(5)

# Process: 5754 ExecStart=/usr/sbin/chronyd $OPTIONS (code=exited, status=0/SUCCESS)

# Main PID: 5756 (chronyd)

# Tasks: 1 (limit: 12336)

# Memory: 992K (peak: 2.8M)

# CPU: 23ms

# CGroup: /system.slice/chronyd.service

# └─5756 /usr/sbin/chronyd -F 2

# 상태 확인

timedatectl status

# Local time: Wed 2026-02-11 22:38:19 KST

# Universal time: Wed 2026-02-11 13:38:19 UTC

# RTC time: Wed 2026-02-11 14:11:34

# Time zone: Asia/Seoul (KST, +0900)

# System clock synchronized: no

# NTP service: active

# RTC in local TZ: no

chronyc sources -v

# MS Name/IP address Stratum Poll Reach LastRx Last sample

# ===============================================================================

# ^* admin 0 7 0 - +0ns[ +0ns] +/- 0ns

# [admin-lb] 자신의 NTP Server 를 사용하는 클라이언트 확인

chronyc clients

# Hostname NTP Drop Int IntL Last Cmd Drop Int Last

# ===============================================================================

# k8s-node1 3 0 1 - 1 0 0 - -

# k8s-node2 2 0 1 - 0 0 0 - -

################################################################

# [admin] DNS 서버(bind) 설정

################################################################

# bind 설치

dnf install -y bind bind-utils

# named.conf 설정

cp /etc/named.conf /etc/named.bak

cat <<EOF > /etc/named.conf

options {

listen-on port 53 { any; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

secroots-file "/var/named/data/named.secroots";

recursing-file "/var/named/data/named.recursing";

allow-query { 127.0.0.1; 192.168.10.0/24; };

allow-recursion { 127.0.0.1; 192.168.10.0/24; };

forwarders {

168.126.63.1;

8.8.8.8;

};

recursion yes;

dnssec-validation auto; # https://sirzzang.github.io/kubernetes/Kubernetes-Kubespray-08-01-06/#troubleshooting-dnssec-%EA%B2%80%EC%A6%9D-%EC%8B%A4%ED%8C%A8

managed-keys-directory "/var/named/dynamic";

geoip-directory "/usr/share/GeoIP";

pid-file "/run/named/named.pid";

session-keyfile "/run/named/session.key";

include "/etc/crypto-policies/back-ends/bind.config";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

EOF

# 문법 오류 확인 (아무 메시지 없으면 정상)

named-checkconf /etc/named.conf

# 서비스 활성화 및 시작

systemctl enable --now named

# DMZ 서버 자체 DNS 설정 (자기 자신 사용)

cat /etc/resolv.conf

echo "nameserver 192.168.10.10" > /etc/resolv.conf

# search Davolink

# nameserver 203.248.252.2

# nameserver 164.124.101.2

# 확인

dig +short google.com @192.168.10.10

dig +short google.com

################################

# [k8s-nodes] DNS 클라이언트 설정 : NetworkManager에서 DNS 관리 끄기

################################

# NetworkManager에서 DNS 관리 끄기

cat /etc/NetworkManager/conf.d/99-dns-none.conf

cat << EOF > /etc/NetworkManager/conf.d/99-dns-none.conf

[main]

dns=none

EOF

systemctl restart NetworkManager

# DNS 서버 정보 설정

echo "nameserver 192.168.10.10" > /etc/resolv.conf

# 확인

dig +short google.com @192.168.10.10 #인터넷이 안되도, 도메인 질의가 가능하다

# 142.250.183.110

dig +short google.com

# 142.250.183.110

################################################################

# Local (Mirror) YUM/DNF Repository - kubespray 오프라인 모드에서 지원해서 사용 안해도됨

################################################################

################################

# [admin] Linux 패키지 저장소 12분 소요 : reposync + createrepo + nginx

################################

# 패키지 설치

dnf install -y dnf-plugins-core createrepo nginx

# 패키지(저장소) 동기화 (reposync) : 외부 저장소(BaseOS, AppStream 등)의 패키지를 로컬 디렉토리로 가져옵니다.

## 미러 저장 디렉터리 생성

mkdir -p /data/repos/rocky/10

cd /data/repos/rocky/10

## BaseOS, AppStream, Extras 동기화

# Rocky 10 repo id 확인

dnf repolist

# repo id repo name

# appstream Rocky Linux 10 - AppStream

# baseos Rocky Linux 10 - BaseOS

# extras Rocky Linux 10 - Extras

# 특정 레포 동기화 (예: baseos, appstream)

# --download-metadata 옵션을 쓰면 원본 메타데이터까지 가져옵니다 : 메타데이터 생성 - 다운로드한 패키지들을 dnf가 인식할 수 있도록 인덱싱 작업을 합니다.

## baseos : 3분 소요, 4.8G

dnf reposync --repoid=baseos --download-metadata -p /data/repos/rocky/10 # 로컬에 다운로드

# ...

# (1472/1474): zlib-ng-compat-2.2.3-2.el10.aarch64. 2.7 MB/s | 64 kB 00:00

# (1473/1474): zsh-5.9-15.el10.aarch64.rpm 13 MB/s | 3.3 MB 00:00

# (1474/1474): zstd-1.5.5-9.el10.aarch64.rpm 1.7 MB/s | 453 kB 00:00

du -sh /data/repos/rocky/10/baseos/

# 6.2G /data/repos/rocky/10/baseos/

## (참고) 메타데이터 확인 : YUM/DNF 저장소의 핵심 두뇌 역할을 하는 Metadata(메타데이터) 파일들 확인

ls -l /data/repos/rocky/10/baseos/repodata/

# total 30836

# -rw-r--r--. 1 root root 62360 Feb 11 22:50 0fb567b1f4c5f9bb81eed3bafc9f40b252558508fcd362a7fdd6b5e1f4c85e52-comps-BaseOS.aarch64.xml.xz

# -rw-r--r--. 1 root root 10561544 Feb 11 22:50 1b34bb7357df23636e2683908e763013b4918a245739769642ebf900f077201d-primary.sqlite.xz

# -rw-r--r--. 1 root root 343464 Feb 11 22:50 1de704afeee6d14017ee0a20de05455359ebcb291b70b5fd93020b1f1df76b23-other.sqlite.xz

# -rw-r--r--. 1 root root 1631654 Feb 11 22:50 3a1d90c1347a5d6d179d8a779da81b8326d1ca1a7111cfc4bf51d7cef9f831f5-filelists.xml.gz

# -rw-r--r--. 1 root root 1082008 Feb 11 22:50 62bf9ca6e90e9fbaf53c10eeb8b08b9d8d2cd0d2ebb04d6e488e9526a19c9982-filelists.sqlite.xz

# -rw-r--r--. 1 root root 1624517 Feb 11 22:50 9027e315287283bc4e95bd862e0e09012e4e4aa8e93916708e955731d5462f6d-other.xml.gz

# -rw-r--r--. 1 root root 308363 Feb 11 22:50 a55eb7089d714a338318e5acf8e9ff8a682433816e0608a3e0eeefc230153a0e-comps-BaseOS.aarch64.xml

# -rw-r--r--. 1 root root 111103 Feb 11 22:50 acf4170ee10547bb5dd491406d99b67c32bf7b3d5229928dc3c58aa03b13353a-updateinfo.xml.gz

# -rw-r--r--. 1 root root 15820685 Feb 11 22:50 f49f5be57837217b638f20dbb776b3b1dd5e92daaf3bb0e20c67abf38d2c5e40-primary.xml.gz

# -rw-r--r--. 1 root root 4449 Feb 11 22:50 repomd.xml

cat /data/repos/rocky/10/baseos/repodata/repomd.xml # 저장소의 마스터 인덱스입니다. 다른 모든 메타데이터 파일의 위치와 체크섬(Hash) 정보를 담고 있어, 클라이언트가 가장 먼저 다운로드하는 파일입니다.

# <?xml version="1.0" encoding="UTF-8"?>

# <repomd xmlns="http://linux.duke.edu/metadata/repo" xmlns:rpm="http://linux.duke.edu/metadata/rpm">

# <revision>10</revision>

# <tags>

# <distro cpeid="cpe:/o:rocky:rocky:10">Rocky Linux 10</distro>

# </tags>

# <data type="primary">

# <checksum type="sha256">f49f5be57837217b638f20dbb776b3b1dd5e92daaf3bb0e20c67abf38d2c5e40</checksum>

# <open-checksum type="sha256">82d38dc9ad7c0db87413a65a9a6f6c3ad8a8651cdefcb7828a26e4288af6637c</open-checksum>

# <location href="repodata/f49f5be57837217b638f20dbb776b3b1dd5e92daaf3bb0e20c67abf38d2c5e40-primary.xml.gz"/>

# <timestamp>1770488169</timestamp>

# <size>15820685</size>

# <open-size>115690744</open-size>

# </data>

# <data type="filelists">

# <checksum type="sha256">3a1d90c1347a5d6d179d8a779da81b8326d1ca1a7111cfc4bf51d7cef9f831f5</checksum>

# <open-checksum type="sha256">a67acaa86bad7a0dc7ddd5c80cae5cdf1242d0edfe78c17d7d4854820b961840</open-checksum>

# <location href="repodata/3a1d90c1347a5d6d179d8a779da81b8326d1ca1a7111cfc4bf51d7cef9f831f5-filelists.xml.gz"/>

# <timestamp>1770488169</timestamp>

# <size>1631654</size>

# <open-size>21511816</open-size>

# </data>

# <data type="other">

# <checksum type="sha256">9027e315287283bc4e95bd862e0e09012e4e4aa8e93916708e955731d5462f6d</checksum>

# <open-checksum type="sha256">d97a6faa184813af47dda95dd336b888e18d91b13951655b843b72b6c1149c26</open-checksum>

# <location href="repodata/9027e315287283bc4e95bd862e0e09012e4e4aa8e93916708e955731d5462f6d-other.xml.gz"/>

# <timestamp>1770488169</timestamp>

# <size>1624517</size>

# <open-size>14384037</open-size>

# </data>

# <data type="primary_db">

# <checksum type="sha256">1b34bb7357df23636e2683908e763013b4918a245739769642ebf900f077201d</checksum>

# <open-checksum type="sha256">8f9e7bedd4060846ba2884d6f9b6dc585d70cd1a5177956bef61cb5173376d36</open-checksum>

# <location href="repodata/1b34bb7357df23636e2683908e763013b4918a245739769642ebf900f077201d-primary.sqlite.xz"/>

# <timestamp>1770488221</timestamp>

# <size>10561544</size>

# <open-size>144027648</open-size>

# <database_version>10</database_version>

# </data>

# <data type="filelists_db">

# <checksum type="sha256">62bf9ca6e90e9fbaf53c10eeb8b08b9d8d2cd0d2ebb04d6e488e9526a19c9982</checksum>

# <open-checksum type="sha256">028f71fa1ed528030d4fa4c8956ef60ea1b8a7dccc7ff1648c83d37690492734</open-checksum>

# <location href="repodata/62bf9ca6e90e9fbaf53c10eeb8b08b9d8d2cd0d2ebb04d6e488e9526a19c9982-filelists.sqlite.xz"/>

# <timestamp>1770488174</timestamp>

# <size>1082008</size>

# <open-size>11321344</open-size>

# <database_version>10</database_version>

# </data>

# <data type="other_db">

# <checksum type="sha256">1de704afeee6d14017ee0a20de05455359ebcb291b70b5fd93020b1f1df76b23</checksum>

# <open-checksum type="sha256">f148df792c63a83051b341a259339e0c39b08559e76767ffe61fde958a44030c</open-checksum>

# <location href="repodata/1de704afeee6d14017ee0a20de05455359ebcb291b70b5fd93020b1f1df76b23-other.sqlite.xz"/>

# <timestamp>1770488174</timestamp>

# <size>343464</size>

# <open-size>15183872</open-size>

# <database_version>10</database_version>

# </data>

# <data type="group">

# <checksum type="sha256">a55eb7089d714a338318e5acf8e9ff8a682433816e0608a3e0eeefc230153a0e</checksum>

# <location href="repodata/a55eb7089d714a338318e5acf8e9ff8a682433816e0608a3e0eeefc230153a0e-comps-BaseOS.aarch64.xml"/>

# <timestamp>1770488147</timestamp>

# <size>308363</size>

# </data>

# <data type="group_xz">

# <checksum type="sha256">0fb567b1f4c5f9bb81eed3bafc9f40b252558508fcd362a7fdd6b5e1f4c85e52</checksum>

# <open-checksum type="sha256">a55eb7089d714a338318e5acf8e9ff8a682433816e0608a3e0eeefc230153a0e</open-checksum>

# <location href="repodata/0fb567b1f4c5f9bb81eed3bafc9f40b252558508fcd362a7fdd6b5e1f4c85e52-comps-BaseOS.aarch64.xml.xz"/>

# <timestamp>1770488169</timestamp>

# <size>62360</size>

# <open-size>308363</open-size>

# </data>

# <data type="updateinfo">

# <checksum type="sha256">acf4170ee10547bb5dd491406d99b67c32bf7b3d5229928dc3c58aa03b13353a</checksum>

# <open-checksum type="sha256">6a4ae116304f66077264269fc41f3a68df34958eb19e50faed039fc6e5c630a4</open-checksum>

# <location href="repodata/acf4170ee10547bb5dd491406d99b67c32bf7b3d5229928dc3c58aa03b13353a-updateinfo.xml.gz"/>

# <timestamp>1770488945</timestamp>

# <size>111103</size>

# <open-size>812991</open-size>

# </data>

# </repomd>

## appstream : 9분 소요, 13G

dnf reposync --repoid=appstream --download-metadata -p /data/repos/rocky/10 # 로컬에 다운로드

# ...

# (5216/5219): zenity-4.0.1-5.el10.aarch64.rpm 6.6 MB/s | 3.3 MB 00:00

# (5217/5219): zram-generator-1.1.2-14.el10.aarch64 4.5 MB/s | 399 kB 00:00

# (5218/5219): zziplib-0.13.78-2.el10.aarch64.rpm 1.5 MB/s | 89 kB 00:00

# (5219/5219): zziplib-utils-0.13.78-2.el10.aarch64 1.8 MB/s | 45 kB 00:00

du -sh /data/repos/rocky/10/appstream/

# 14G /data/repos/rocky/10/appstream/

## extras : 금방 끝남, 67M

dnf reposync --repoid=extras --download-metadata -p /data/repos/rocky/10 # 로컬에 다운로드

# ...

# (24/26): update-motd-0.1-2.el10.noarch.rpm 197 kB/s | 10 kB 00:00

# (25/26): rpmfusion-free-release-tainted-10-1.noar 136 kB/s | 7.3 kB 00:00

# (26/26): rocky-backgrounds-extras-100.4-7.el10.no 12 MB/s | 63 MB 00:05

du -sh /data/repos/rocky/10/extras/

# 67M /data/repos/rocky/10/extras/

# 내부 배포용 웹 서버 설정 (nginx)

cat <<EOF > /etc/nginx/conf.d/repos.conf

server {

listen 80;

server_name repo-server;

location /rocky/10/ {

autoindex on; # 디렉터리 목록 표시

autoindex_exact_size off; # 파일 크기 KB/MB/GB 단위로 보기 좋게

autoindex_localtime on; # 서버 로컬 타임으로 표시

root /data/repos;

}

}

EOF

systemctl enable --now nginx

systemctl status nginx.service --no-pager

ss -tnlp | grep nginx

# LISTEN 0 511 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=6010,fd=6),("nginx",pid=6009,fd=6),("nginx",pid=6008,fd=6),("nginx",pid=6007,fd=6),("nginx",pid=6006,fd=6))

# LISTEN 0 511 [::]:80 [::]:* users:(("nginx",pid=6010,fd=7),("nginx",pid=6009,fd=7),("nginx",pid=6008,fd=7),("nginx",pid=6007,fd=7),("nginx",pid=6006,fd=7))

# 접속 테스트

curl http://192.168.10.10/rocky/10/

open http://192.168.10.10/rocky/10/baseos/

# <html>

# <head><title>Index of /rocky/10/baseos/</title></head>

# <body>

# <h1>Index of /rocky/10/baseos/</h1><hr><pre><a href="../">../</a>

# <a href="Packages/">Packages/</a> 11-Feb-2026 22:50 -

# <a href="repodata/">repodata/</a> 11-Feb-2026 22:50 -

# <a href="mirrorlist">mirrorlist</a> 11-Feb-2026 22:50 2601

# </pre><hr></body>

# </html>

################################

# [k8s-node] 인터넷이 안 되는 내부 서버에서 admin Linux 패키지 저장소를 바라보게 설정

################################

# 기존 레포 설정 백업

tree /etc/yum.repos.d/

# /etc/yum.repos.d/

# ├── rocky-addons.repo

# ├── rocky-devel.repo

# ├── rocky-extras.repo

# └── rocky.repo

mkdir /etc/yum.repos.d/backup

mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/

# 로컬 레포 파일 생성: 서버 IP는 Repo 서버의 IP로 수정

cat <<EOF > /etc/yum.repos.d/internal-rocky.repo

[internal-baseos]

name=Internal Rocky 10 BaseOS

baseurl=http://192.168.10.10/rocky/10/baseos

enabled=1

gpgcheck=0

[internal-appstream]

name=Internal Rocky 10 AppStream

baseurl=http://192.168.10.10/rocky/10/appstream

enabled=1

gpgcheck=0

[internal-extras]

name=Internal Rocky 10 Extras

baseurl=http://192.168.10.10/rocky/10/extras

enabled=1

gpgcheck=0

EOF

# 내부 서버 repo 정상 동작 확인 : 클라이언트에서 캐시를 비우고 목록을 불러옵니다.

dnf clean all

# 18 files removed

dnf repolist

# repo id repo name

# internal-appstream Internal Rocky 10 AppStream

# internal-baseos Internal Rocky 10 BaseOS

# internal-extras Internal Rocky 10 Extras

dnf makecache

# Internal Rocky 10 BaseOS 151 MB/s | 15 MB 00:00

# Internal Rocky 10 AppStream 40 MB/s | 2.1 MB 00:00

# Internal Rocky 10 Extras 254 kB/s | 6.2 kB 00:00

# Metadata cache created.

# 패키지 인스톨 정상 실행 확인

dnf install -y nfs-utils

# Last metadata expiration check: 0:00:33 ago on Wed 11 Feb 2026 11:38:16 PM KST.

# Package nfs-utils-1:2.8.2-3.el10.aarch64 is already installed.

# Dependencies resolved.

# ==================================================================================

# Package Architecture Version Repository Size

# ==================================================================================

# Upgrading:

# libnfsidmap aarch64 1:2.8.3-0.el10 internal-baseos 61 k

# nfs-utils aarch64 1:2.8.3-0.el10 internal-baseos 476 k

# Transaction Summary

# ==================================================================================

# Upgrade 2 Packages

# Total download size: 537 k

# Downloading Packages:

# (1/2): libnfsidmap-2.8.3-0.el10.aarch64.rpm 12 MB/s | 61 kB 00:00

# (2/2): nfs-utils-2.8.3-0.el10.aarch64.rpm 67 MB/s | 476 kB 00:00

# ----------------------------------------------------------------------------------

# Total 52 MB/s | 537 kB 00:00

# Running transaction check

# Transaction check succeeded.

# Running transaction test

# Transaction test succeeded.

# Running transaction

# Preparing : 1/1

# Upgrading : libnfsidmap-1:2.8.3-0.el10.aarch64 1/4

# Running scriptlet: nfs-utils-1:2.8.3-0.el10.aarch64 2/4

# Upgrading : nfs-utils-1:2.8.3-0.el10.aarch64 2/4

# Running scriptlet: nfs-utils-1:2.8.3-0.el10.aarch64 2/4

# Running scriptlet: nfs-utils-1:2.8.2-3.el10.aarch64 3/4

# Cleanup : nfs-utils-1:2.8.2-3.el10.aarch64 3/4

# Running scriptlet: nfs-utils-1:2.8.2-3.el10.aarch64 3/4

# Cleanup : libnfsidmap-1:2.8.2-3.el10.aarch64 4/4

# Running scriptlet: libnfsidmap-1:2.8.2-3.el10.aarch64 4/4

# Upgraded:

# libnfsidmap-1:2.8.3-0.el10.aarch64 nfs-utils-1:2.8.3-0.el10.aarch64

# Complete!

## 패키지 정보에 repo 확인

dnf info nfs-utils | grep -i repo

# Repository : @System

# From repo : internal-baseos

################################

# [admin] 다음 실습을 위해 삭제

################################

systemctl disable --now nginx && dnf remove -y nginx

################################################################

# Private Container (Image) Registry - kubespray 오프라인 모드에서 지원해서 사용 안해도됨

################################################################

################################

# [admin] podman 으로 컨테이너 이미지 저장소 Docker Registry (registry) 기동

################################

# podman 설치 : 기본 설치 되어 있음

dnf install -y podman

dnf info podman | grep repo

# From repo : appstream

# podman 확인

which podman

# /usr/bin/podman

podman --version

podman info

cat /etc/containers/registries.conf

# unqualified-search-registries = ["registry.access.redhat.com", "registry.redhat.io", "docker.io"]

cat /etc/containers/registries.conf.d/000-shortnames.conf

# Registry 이미지 받기

podman pull docker.io/library/registry:latest

# Trying to pull docker.io/library/registry:latest...

# Getting image source signatures

# Copying blob 92c7580d074a done |

# Copying blob a447a5de8f4e done |

# Copying blob 50b5971fe294 done |

# Copying blob 1cc3d49277b7 done |

# Copying blob 0a52a06d47e0 done |

# Copying config 2f5ec5015b done |

# Writing manifest to image destination

# 2f5ec5015badd603680de78accbba6eb3e9146f4d642a7ccef64205e55ac518f

podman images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# docker.io/library/registry latest 2f5ec5015bad 2 weeks ago 57.3 MB

# Registry 데이터 저장 디렉터리 준비

mkdir -p /data/registry

chmod 755 /data/registry

# Docker Registry 컨테이너 실행 (기본, 인증 없음)

podman run -d --name local-registry -p 5000:5000 -v /data/registry:/var/lib/registry --restart=always docker.io/library/registry:latest

# cb9a1b4c69541125c5725447a75a078233ae627e5dd9528380aed700c5cd57a9

# 확인

podman ps

# CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# cb9a1b4c6954 docker.io/library/registry:latest /etc/distribution... 8 seconds ago Up 9 seconds 0.0.0.0:5000->5000/tcp local-registry

ss -tnlp | grep 5000

# LISTEN 0 4096 0.0.0.0:5000 0.0.0.0:* users:(("conmon",pid=6418,fd=5))

pstree -a

# ...

# ├─conmon --api-version 1 -ccb9a1b4c69541125c5725447a75a078233ae627e5dd9528

# │ └─registry serve /etc/distribution/config.yml

# │ └─6*[{registry}]

# ...

# Registry 정상 동작 확인

curl -s http://localhost:5000/v2/_catalog | jq

# {"repositories":[]}

################################

# [admin] 컨테이너 이미지 저장소 Docker Registry 에 이미지 push

################################

# 이미지 가져오기

podman pull alpine

# Resolved "alpine" as an alias (/etc/containers/registries.conf.d/000-shortnames.conf)

# Trying to pull docker.io/library/alpine:latest...

# Writing manifest to image destination

# 1ab49c19c53ebca95c787b482aeda86d1d681f58cdf19278c476bcaf37d96de1

cat /etc/containers/registries.conf.d/000-shortnames.conf | grep alpine

# "alpine" = "docker.io/library/alpine"

podman images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# docker.io/library/registry latest 2f5ec5015bad 2 weeks ago 57.3 MB

# docker.io/library/alpine latest 1ab49c19c53e 2 weeks ago 8.99 MB

# tag

podman tag alpine:latest 192.168.10.10:5000/alpine:1.0

podman images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# docker.io/library/registry latest 2f5ec5015bad 2 weeks ago 57.3 MB

# 192.168.10.10:5000/alpine 1.0 1ab49c19c53e 2 weeks ago 8.99 MB

# docker.io/library/alpine latest 1ab49c19c53e 2 weeks ago 8.99 MB

# 프라이빗 레지스트리에 업로드 : 실패!

podman push 192.168.10.10:5000/alpine:1.0

# Getting image source signatures

# Error: trying to reuse blob sha256:45f3ea5848e8a25ca27718b640a21ffd8c8745d342a24e1d4ddfc8c449b0a724 at destination: pinging container registry 192.168.10.10:5000: Get "https://192.168.10.10:5000/v2/": http: server gave HTTP response to HTTPS client

# 기본적으로 컨테이너 엔진들은 HTTPS를 요구합니다. 내부망에서 HTTP로 테스트하려면 Registry 주소를 '안전하지 않은 저장소'로 등록해야 합니다.

# (참고) registries.conf 는 containers-common 설정이라서, 'podman, skopeo, buildah' 등 전부 동일하게 적용됨.

cp /etc/containers/registries.conf /etc/containers/registries.bak

cat <<EOF >> /etc/containers/registries.conf

[[registry]]

location = "192.168.10.10:5000"

insecure = true

EOF

grep "^[^#]" /etc/containers/registries.conf

# unqualified-search-registries = ["registry.access.redhat.com", "registry.redhat.io", "docker.io"]

# short-name-mode = "enforcing"

# [[registry]]

# location = "192.168.10.10:5000"

# insecure = true

# 프라이빗 레지스트리에 업로드 : 성공!

podman push 192.168.10.10:5000/alpine:1.0

# Getting image source signatures

# Copying blob 45f3ea5848e8 done |

# Copying config 1ab49c19c5 done |

# Writing manifest to image destination

# 업로드된 이미지와 태그 조회

curl -s 192.168.10.10:5000/v2/_catalog | jq

# {

# "repositories": [

# "alpine"

# ]

# }

curl -s 192.168.10.10:5000/v2/alpine/tags/list | jq

# {

# "name": "alpine",

# "tags": [

# "1.0"

# ]

# }

################################

# [k8s-node] 컨테이너 이미지 pull

################################

# registries.conf 설정

cp /etc/containers/registries.conf /etc/containers/registries.bak

cat <<EOF >> /etc/containers/registries.conf

[[registry]]

location = "192.168.10.10:5000"

insecure = true

EOF

grep "^[^#]" /etc/containers/registries.conf

# unqualified-search-registries = ["registry.access.redhat.com", "registry.redhat.io", "docker.io"]

# short-name-mode = "enforcing"

# [[registry]]

# location = "192.168.10.10:5000"

# insecure = true

# 이미지 가져오기

podman pull 192.168.10.10:5000/alpine:1.0

# 1ab49c19c53ebca95c787b482aeda86d1d681f58cdf19278c476bcaf37d96de1

podman images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# 192.168.10.10:5000/alpine 1.0 1ab49c19c53e 2 weeks ago 8.98 MB

################################

# [admin] 다음 실습을 위해 삭제

################################

podman rm -f local-registry

################################

# [admin, k8s-node] 다음 실습을 위해 파일 원복

################################

mv /etc/containers/registries.bak /etc/containers/registries.conf

################################################################

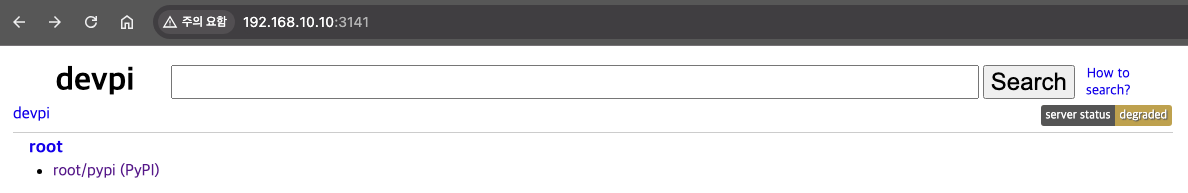

# Private PyPI(Python Package Index) Mirror - kubespray 오프라인 모드에서 지원해서 사용 안해도됨

################################################################

################################

# [admin] Python 패키지 저장소 구성 : devpi-server

################################

# devpi-server 설치

## devpi-server : PyPI 미러/사설 패키지 저장소 서버

## devpi-client :devpi 서버에 패키지 업로드/관리

## devpi-web : 웹 UI (선택)

pip install devpi-server devpi-client devpi-web

# ...

# readme-renderer-44.0 repoze.lru-0.7 ruamel.yaml-0.19.1 soupsieve-2.8.3 strictyaml-1.7.3 translationstring-1.4 typing-extensions-4.15.0 venusian-3.1.1 waitress-3.0.2 webob-1.8.9 zope.deprecation-6.0 zope.interface-8.2

# WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

pip list | grep devpi

# devpi-client 7.2.0

# devpi-common 4.1.1

# devpi-server 6.19.1

# devpi-web 5.0.1

# 서버 데이터 디렉토리 생성 및 초기화 : Initialize new devpi-server instance.

## --serverdir을 지정하지 않으면 기본값은 ~/.devpi/server 입니다.

devpi-init --serverdir /data/devpi_data

# 2026-02-11 23:46:03,323 INFO NOCTX Loading node info from /data/devpi_data/.nodeinfo

# 2026-02-11 23:46:03,323 INFO NOCTX generated uuid: 72aa3df59e644d1fb446f1f7ea57fff8

# 2026-02-11 23:46:03,323 INFO NOCTX wrote nodeinfo to: /data/devpi_data/.nodeinfo

# 2026-02-11 23:46:03,325 INFO NOCTX DB: Creating schema

# 2026-02-11 23:46:03,360 INFO [Wtx-1] setting password for user 'root'

# 2026-02-11 23:46:03,360 INFO [Wtx-1] created user 'root'

# 2026-02-11 23:46:03,360 INFO [Wtx-1] created root user

# 2026-02-11 23:46:03,360 INFO [Wtx-1] created root/pypi index

# 2026-02-11 23:46:03,362 INFO [Wtx-1] fswriter0: committed at 0

ls -al /data/devpi_data/ #인덱스 관련된 패키지들 저장

# total 28

# drwxr-xr-x. 2 root root 60 Feb 11 23:46 .

# drwxr-xr-x. 5 root root 53 Feb 11 23:46 ..

# -rw-------. 1 root root 72 Feb 11 23:46 .nodeinfo

# -rw-r--r--. 1 root root 1 Feb 11 23:46 .serverversion

# -rw-r--r--. 1 root root 20480 Feb 11 23:46 .sqlite

# devpi 서버 기동 : --host 0.0.0.0은 외부(다른 PC) 접속을 허용하기 위함

## 백그라운드 상시 구동은 systemd 서비스로 등록 설정 할 것

nohup devpi-server --serverdir /data/devpi_data --host 0.0.0.0 --port 3141 > /var/log/devpi.log 2>&1 &

# 확인

ss -tnlp | grep devpi-server

LISTEN 0 1024 0.0.0.0:3141 0.0.0.0:* users:(("devpi-server",pid=6710,fd=12))

tail -f /var/log/devpi.log

# 2026-02-11 23:46:25,948 INFO NOCTX serving at url: http://0.0.0.0:3141 (might be http://[0.0.0.0]:3141 for IPv6)

# 2026-02-11 23:46:25,948 INFO NOCTX using 50 threads

# 2026-02-11 23:46:25,948 INFO NOCTX bug tracker: https://github.com/devpi/devpi/issues

...

# 웹 UI 접속

open http://192.168.10.10:3141

################################

# [admin] devpi-server 필요한 패키지 업로드

################################

# 서버 연결

devpi use http://192.168.10.10:3141

# Warning: insecure http host, trusted-host will be set for pip

# using server: http://192.168.10.10:3141/ (not logged in)

# no current index: type 'devpi use -l' to discover indices

# /root/.config/pip/pip.conf: no config file exists

# /root/.config/uv/uv.toml: no config file exists

# /root/.pydistutils.cfg: no config file exists

# /root/.buildout/default.cfg: no config file exists

# always-set-cfg: no

# 로그인 (기본 root 비번은 없음)

## (skip) root 비밀번호 설정 devpi user -m root password=<신규비밀번호>

devpi login root --password ""

# logged in 'root', credentials valid for 10.00 hours

# 폐쇄망에서 쓸 패키지 미리 받아두기

pip download jmespath netaddr -d /tmp/pypi-packages

tree /tmp/pypi-packages/

# /tmp/pypi-packages/

# ├── jmespath-1.1.0-py3-none-any.whl

# └── netaddr-1.3.0-py3-none-any.whl

# 팀 또는 프로젝트용 인덱스 생성 (예: prod)

## 상속(Inheritance): bases=root/pypi 설정을 통해, 외부망이 연결된 환경에서는 자동으로 PyPI에서 캐싱해오고, 폐쇄망에서는 내부 업로드 파일을 우선 조회하도록 구성할 수 있습니다.

## 참고) PyPI 미러 인덱스 생성(캐시용) : devpi index -c pypi-mirror type=mirror mirror_url=https://pypi.org/simple

devpi index -c prod bases=root/pypi

# http://192.168.10.10:3141/root/prod?no_projects=:

# type=stage

# bases=root/pypi

# volatile=True

# acl_upload=root

# acl_toxresult_upload=:ANONYMOUS:

# mirror_whitelist=

# mirror_whitelist_inheritance=intersection

# devpi 인덱스(저장소) 목록 확인

devpi index -l

# root/prod

# root/pypi

# 특정 인덱스(저장소)에 패키지 있는지 확인

devpi use root/pypi

# Warning: insecure http host, trusted-host will be set for pip

# current devpi index: http://192.168.10.10:3141/root/pypi (logged in as root)

# supported features: push-no-docs, push-only-docs, push-register-project, server-keyvalue-parsing

# /root/.config/pip/pip.conf: no config file exists

# /root/.config/uv/uv.toml: no config file exists

# /root/.pydistutils.cfg: no config file exists

# /root/.buildout/default.cfg: no config file exists

# always-set-cfg: no

devpi list

devpi use root/prod

# Warning: insecure http host, trusted-host will be set for pip

# current devpi index: http://192.168.10.10:3141/root/prod (logged in as root)

# supported features: push-no-docs, push-only-docs, push-register-project, server-keyvalue-parsing

# /root/.config/pip/pip.conf: no config file exists

# /root/.config/uv/uv.toml: no config file exists

# /root/.pydistutils.cfg: no config file exists

# /root/.buildout/default.cfg: no config file exists

devpi list

# devpi 서버 root/prod 인덱스(저장소)에 패키지 업로드

devpi upload /tmp/pypi-packages/*

# file_upload of jmespath-1.1.0-py3-none-any.whl to http://192.168.10.10:3141/root/prod/

# file_upload of netaddr-1.3.0-py3-none-any.whl to http://192.168.10.10:3141/root/prod/

# 업로드 한 패키지 실제 위치 확인

tree /data/devpi_data/+files/

# /data/devpi_data/+files/

# └── root

# └── prod

# └── +f

# ├── a56

# │ └── 63118de4908c9

# │ └── jmespath-1.1.0-py3-none-any.whl

# └── c2c

# └── 6a8ebe5554ce3

# └── netaddr-1.3.0-py3-none-any.whl

# 업로드 한 패키지 확인

devpi list

jmespath

netaddr

################################

# [k8s-node] pip 설정 및 사용

################################

# (방안1) 일회성 사용

pip list | grep -i jmespath

pip install jmespath --index-url http://192.168.10.10:3141/root/prod/+simple --trusted-host 192.168.10.10

# Looking in indexes: http://192.168.10.10:3141/root/prod/+simple

# Collecting jmespath

# Downloading http://192.168.10.10:3141/root/prod/%2Bf/a56/63118de4908c9/jmespath-1.1.0-py3-none-any.whl (20 kB)

# Installing collected packages: jmespath

# Successfully installed jmespath-1.1.0

# WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

pip list | grep -i jmespath

# jmespath 1.1.0

# (방안2) 전역 설정 : /root/prod (사람용 웹 UI) , /root/prod/+simple (pip 전용 API 엔드포인트)

## pip 설정에서 반드시 +simple 붙인 URL을 써야 정상 동작한다 <- pip 표준 인덱스 엔드포인트

## pip는 패키지 저장소를 PEP 503 “Simple API” 형식으로 접근함.

## +simple 의미 : pip 전용 인덱스 엔드포인트, 패키지 목록을 pip가 파싱할 수 있는 HTML 포맷으로 제공

cat <<EOF > /etc/pip.conf

[global]

index-url = http://192.168.10.10:3141/root/prod/+simple

trusted-host = 192.168.10.10

timeout = 60

EOF

pip list | grep -i netaddr

# 없음

pip install netaddr

# Looking in indexes: http://192.168.10.10:3141/root/prod/+simple

# Collecting netaddr

# Downloading http://192.168.10.10:3141/root/prod/%2Bf/c2c/6a8ebe5554ce3/netaddr-1.3.0-py3-none-any.whl (2.3 MB)

# ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.3/2.3 MB 152.5 MB/s eta 0:00:00

# Installing collected packages: netaddr

# Successfully installed netaddr-1.3.0

# WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

pip list | grep -i netaddr

# netaddr 1.3.0

# 현재 devpi-server 없는 패키지 설치 시도 : 성공!

pip install cryptography

# Looking in indexes: http://192.168.10.10:3141/root/prod/+simple

# Collecting cryptography

# Downloading http://192.168.10.10:3141/root/pypi/%2Bf/e92/51e3be159d102/cryptography-46.0.5-cp311-abi3-manylinux_2_34_aarch64.whl (4.3 MB)

# ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 4.3/4.3 MB 8.0 MB/s eta 0:00:00

# Collecting cffi>=2.0.0 (from cryptography)

# Downloading http://192.168.10.10:3141/root/pypi/%2Bf/b21/e08af67b8a103/cffi-2.0.0-cp312-cp312-manylinux2014_aarch64.manylinux_2_17_aarch64.whl (220 kB)

# ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 220.1/220.1 kB 52.1 MB/s eta 0:00:00

# Collecting pycparser (from cffi>=2.0.0->cryptography)

# Downloading http://192.168.10.10:3141/root/pypi/%2Bf/b72/7414169a36b7d/pycparser-3.0-py3-none-any.whl (48 kB)

# ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 48.2/48.2 kB 197.9 MB/s eta 0:00:00

# Installing collected packages: pycparser, cffi, cryptography

# Successfully installed cffi-2.0.0 cryptography-46.0.5 pycparser-3.0

# WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

################################

# [admin] 다음 실습을 위해 삭제

################################

pkill -f "devpi-server --serverdir /data/devpi_data"

################################

# [k8s-node] 다음 실습을 위해

################################

rm -rf /etc/pip.conf

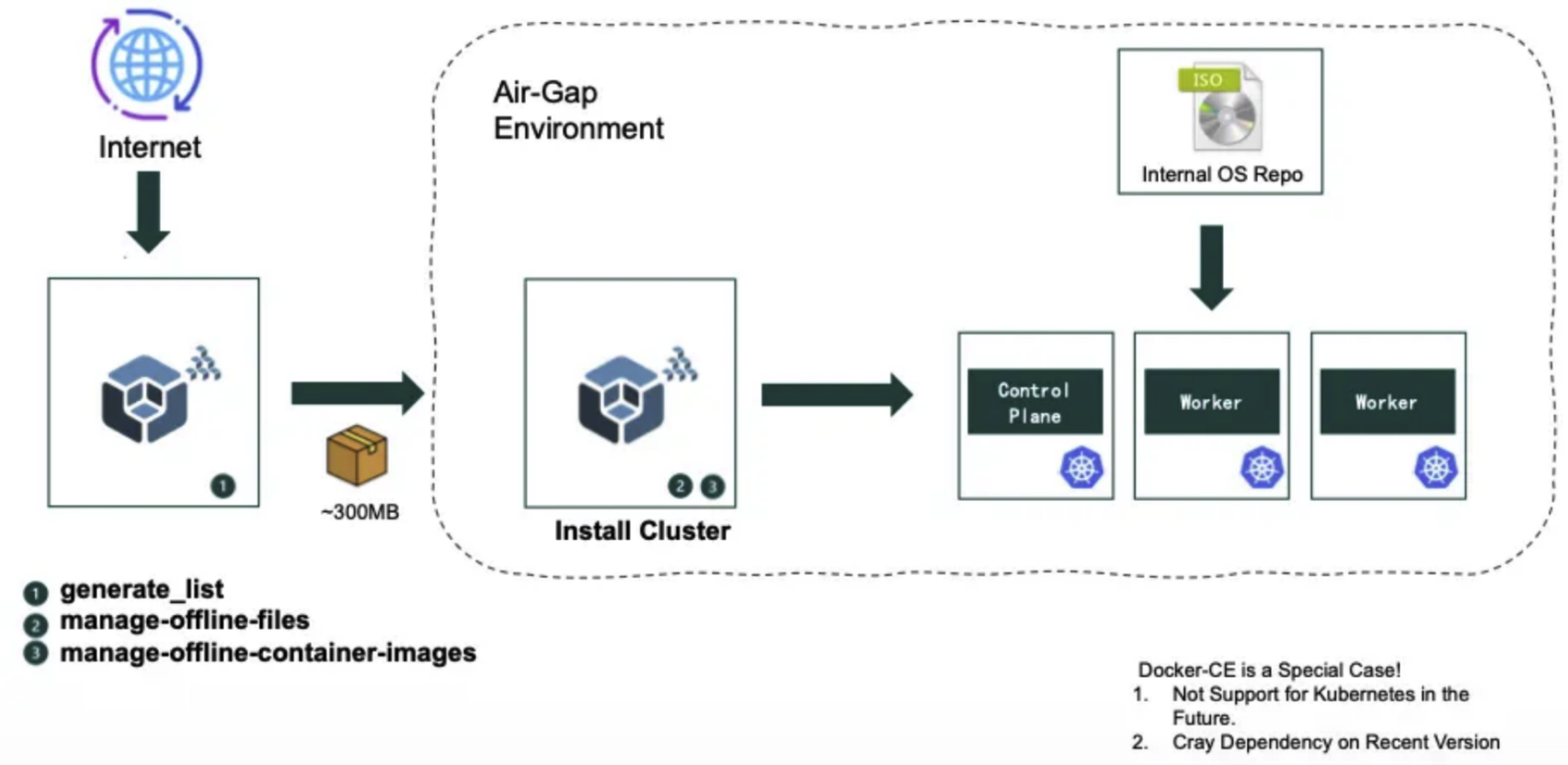

Air-gap 환경에서 오프라인 Kubernetes 클러스터를 구축하기 위해서는 인터넷 연결 없이 모든 필요한 파일, 패키지, 그리고 컨테이너 이미지를 미리 준비해야 합니다. Kubespray-offline을 활용하면 아래와 같은 절차로 손쉽게 오프라인 설치 환경을 구성할 수 있습니다.

Kubespray-offline 설치

위에서 설명한 각각이 kubespray-offline에서 모두 지원이 됩니다.

kubespray-offline은 오프라인 Kubernetes 클러스터 구축을 위한 파일 및 이미지를 자동으로 수집, 정리, 배포할 수 있도록 도와주는 도구입니다. 인터넷이 연결되지 않은 환경에서도 필요한 모든 구성요소(컨테이너 이미지, 패키지, 바이너리 등)를 사전에 다운로드해 레지스트리와 로컬 저장소에 준비할 수 있습니다. 이를 통해 Kubespray 기반의 Kubernetes 클러스터를 완전한 air-gap 환경에서 손쉽게 설치하고 유지관리할 수 있습니다.

kubespary 버전이 업데이트 될 때마다, 해당 프로젝트 개발자가 버전 업데이트에 맞게 kubespary-offline을 업데이트해서 편리하게 사용할 수 있습니다.

Kubespray 오프라인 배포 아키텍처

이 다이어그램은 인터넷이 차단된 Air-Gap 환경에서 Kubernetes 클러스터를 배포하기 위한 전체적인 워크플로우를 보여줍니다.

구성 요소:

- 인터넷 환경 (좌측): Kubespray를 사용하여 필요한 파일들과 컨테이너 이미지들을 준비 (~300MB)

- Air-Gap 환경 (우측 점선 영역): 인터넷과 격리된 폐쇄망 환경

- Install Cluster: Kubespray를 통한 클러스터 설치

- Internal OS Repo: 내부 OS 패키지 저장소 (ISO)

- Control Plane & Worker 노드들: Kubernetes 클러스터 구성 요소

오프라인 배포 프로세스:

- 다운로드될 파일 목록과 컨테이너 이미지 목록을 파일별로 생성

- 오프라인 배포를 위한 컨테이너 이미지 다운로드 및 이미지 레지스트리(저장소)에 등록(업로드)

- 파일(목록)을 다운로드 하고 Nginx 컨테이너를 실행하여, 파일 다운로드 기능 제공

레포지토리: https://github.com/kubespray-offline/kubespray-offline

스크립트 기능:

- 다운로드 기능은 Kubespray의 편의성 스크립트를 활용합니다.

- 오프라인 파일 다운로드 (Download offline files)

- OS 패키지 저장소: Yum/Deb 저장소 파일 다운로드

- 컨테이너 이미지: Kubespray에서 사용하는 모든 컨테이너 이미지 다운로드

- Python 패키지: Kubespray의 PyPI 미러 파일 다운로드

- 배포 서버(Admin) 지원 스크립트 (Support scripts for deployment server)

- Container Runtime 설치: 로컬 파일에서 containerd 설치

- 웹 서버 구동: Nginx 컨테이너를 통한 Yum/Deb 저장소 및 PyPI 미러 서비스 제공

- 프라이빗 레지스트리: Docker 프라이빗 레지스트리 구동

- 이미지 배포: 모든 컨테이너 이미지를 로드하고 프라이빗 레지스트리에 업로드

- 시스템 요구사항

- RHEL 계열: RHEL / AlmaLinux / Rocky Linux 9.x (10.x)

- Debian 계열: Ubuntu 22.04 / 24.04

kubespary-offline 설치 실습kubespary-offline 설치 실습

기본 환경을 준비합니다.

[0] git clone 후 download-all.sh 로 설치에 필요한 파일들 다운로드 수행 (3.3GB 정도) 17분 소요

# Kubespray 오프라인 배포를 위한 저장소 클론

git clone https://github.com/kubespray-offline/kubespray-offline.git

cd kubespray-offline/

################################

# download-all.sh : 아래 후속 실행되는 스크립트들 확인 (최상위 스크립트)

# 오프라인 배포에 필요한 모든 파일들을 순차적으로 다운로드하는 마스터 스크립트

################################

#!/bin/bash

# 스크립트 실행 함수 (에러 발생 시 종료)

run() {

echo "=> Running: $*"

$* || {

echo "Failed in : $*"

exit 1

}

}

# 실습을 위해서는 한줄씩 실행해보면 됨

source ./config.sh # 설정 파일 로드

#run ./install-docker.sh # Docker 설치 (선택사항)

#run ./install-nerdctl.sh # nerdctl 설치 (선택사항)

run ./precheck.sh # 사전 요구사항 검증

run ./prepare-pkgs.sh || exit 1 # 필수 패키지 설치

run ./prepare-py.sh # Python 환경 준비

run ./get-kubespray.sh # Kubespray 소스코드 다운로드

if $ansible_in_container; then

run ./build-ansible-container.sh # Ansible 컨테이너 빌드

else

run ./pypi-mirror.sh # PyPI 미러 생성

fi

run ./download-kubespray-files.sh # Kubespray 필수 파일들 다운로드

run ./download-additional-containers.sh # 추가 컨테이너 이미지 다운로드

run ./create-repo.sh # RPM/DEB 저장소 생성

run ./copy-target-scripts.sh # 타겟 스크립트들 복사

echo "Done."

################################

# config.sh → target-scripts/config.sh

# 설치되는 버전 정보 변수 설정 : 버전 변경 시에는 이 단계에서 수정 필요!, 버전 변수에 파일 다운로드 됨

# 모든 컴포넌트의 버전을 중앙에서 관리하는 핵심 설정 파일

################################

#!/bin/bash

source ./target-scripts/config.sh # 타겟 스크립트 설정 로드

# container runtime for preparation node (준비 노드용 컨테이너 런타임 선택)

docker=${docker:-podman} # 기본값: podman 사용

#docker=${docker:-docker} # 대안: Docker 사용

#docker=${docker:-/usr/local/bin/nerdctl} # 대안: nerdctl 사용

# Run ansible in container? (Ansible을 컨테이너에서 실행할지 여부)

ansible_in_container=${ansible_in_container:-false}

cat ./target-scripts/config.sh

#!/bin/bash

# Kubespray version to download. Use "master" for latest master branch.

KUBESPRAY_VERSION=${KUBESPRAY_VERSION:-2.30.0} # Kubespray 버전 (안정 버전)

#KUBESPRAY_VERSION=${KUBESPRAY_VERSION:-master} # 최신 개발 버전 사용 시

# Versions of containerd related binaries used in `install-containerd.sh`

# These version must be same as kubespray.

# Refer `roles/kubespray_defaults/vars/main/checksums.yml` of kubespray.

RUNC_VERSION=1.3.4 # OCI 런타임 버전

CONTAINERD_VERSION=2.2.1 # containerd 버전

NERDCTL_VERSION=2.2.1 # nerdctl (Docker CLI 대체) 버전

CNI_VERSION=1.8.0 # CNI 플러그인 버전

# Some container versions, must be same as ../imagelists/images.txt

NGINX_VERSION=1.29.4 # 웹서버용 Nginx 버전

REGISTRY_VERSION=3.0.0 # 프라이빗 레지스트리 버전

# container registry port (컨테이너 레지스트리 포트)

REGISTRY_PORT=${REGISTRY_PORT:-35000} # 기본 포트: 35000

# Additional container registry hosts (추가 컨테이너 레지스트리 호스트)

ADDITIONAL_CONTAINER_REGISTRY_LIST=${ADDITIONAL_CONTAINER_REGISTRY_LIST:-"myregistry.io"}

# Architecture of binary files (바이너리 파일 아키텍처 감지)

# Detect OS type and get architecture accordingly

map_arch() {

case "$1" in

x86_64)

echo "amd64" # Intel/AMD 64비트

;;

aarch64)

echo "arm64" # ARM 64비트 (Apple Silicon 등)

;;

*)

echo "$1" # 기타 아키텍처

;;

esac

}

# OS별 아키텍처 감지 로직

if [ -e /etc/redhat-release ]; then

# RHEL/AlmaLinux/Rocky Linux

ARCH=$(uname -m)

IMAGE_ARCH=$(map_arch "$ARCH")

elif command -v dpkg >/dev/null 2>&1; then

# Ubuntu/Debian

IMAGE_ARCH=$(dpkg --print-architecture)

else

# Fallback: use uname -m

ARCH=$(uname -m)

IMAGE_ARCH=$(map_arch "$ARCH")

fi

################################

# precheck.sh - 사전 요구사항 검증 스크립트

# 시스템 환경이 Kubespray 오프라인 설치에 적합한지 확인

################################

#!/bin/bash

source /etc/os-release # OS 정보 로드

source ./config.sh # 설정 파일 로드

# Docker/Podman 설치 여부 확인

if [ "$docker" != "podman" ]; then

if ! command -v $docker >/dev/null 2>&1; then

echo "No $docker installed" # 지정된 컨테이너 런타임이 설치되지 않음

exit 1

fi

fi

# RHEL7/CentOS7에서 SELinux 확인 (더 이상 지원하지 않지만 호환성 체크)

if [ -e /etc/redhat-release ] && [[ "$VERSION_ID" =~ ^7.* ]]; then

if [ "$(getenforce)" == "Enforcing" ]; then

echo "You must disable SELinux for RHEL7/CentOS7" # SELinux 비활성화 필요

exit 1

fi

fi

################################

# prepare-pkgs.sh : 필수 패키지 설치 스크립트

# OS별로 Kubespray 오프라인 배포에 필요한 패키지들을 설치

################################

#!/bin/bash

echo "==> prepare-pkgs.sh"

. /etc/os-release # OS 정보 로드

. ./scripts/common.sh # 공통 스크립트 로드

# Select python version (Python 버전 선택)

. ./target-scripts/pyver.sh

# Install required packages (필수 패키지 설치)

if [ -e /etc/redhat-release ]; then

echo "==> Install required packages"

$sudo dnf check-update # 패키지 업데이트 확인

# 핵심 빌드 도구 및 컨테이너 런타임 설치

$sudo dnf install -y rsync gcc libffi-devel createrepo git podman || exit 1

case "$VERSION_ID" in

7*)

# RHEL/CentOS 7 (더 이상 지원하지 않음)

echo "FATAL: RHEL/CentOS 7 is not supported anymore."

exit 1

;;

8*)

# RHEL/CentOS 8 - 모듈 메타데이터 도구 설치

if ! command -v repo2module >/dev/null; then

echo "==> Install modulemd-tools"

$sudo dnf install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

$sudo dnf copr enable -y frostyx/modulemd-tools-epel

$sudo dnf install -y modulemd-tools

fi

;;

9*)

# RHEL 9 - 모듈 메타데이터 도구 설치

if ! command -v repo2module >/dev/null; then

$sudo dnf install -y modulemd-tools

fi

;;

10*)

# RHEL 10 - createrepo_c 도구 설치

if ! command -v repo2module >/dev/null; then

$sudo dnf install -y createrepo_c

fi

;;

*)

echo "Unknown version_id: $VERSION_ID"

exit 1

;;

esac

# Python 및 관련 도구 설치

$sudo dnf install -y python${PY} python${PY}-pip python${PY}-devel || exit 1

else

# Ubuntu/Debian 계열 패키지 설치

$sudo apt update

if [ "$1" == "--upgrade" ]; then

$sudo apt upgrade # 시스템 업그레이드 (선택사항)

fi

# 기본 개발 도구 설치

$sudo apt -y install lsb-release curl gpg gcc libffi-dev rsync git software-properties-common || exit 1

case "$VERSION_ID" in

20.04)

# Ubuntu 20.04 - Podman 저장소 추가

echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/xUbuntu_${VERSION_ID}/ /" | $sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list

curl -SL https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable/xUbuntu_${VERSION_ID}/Release.key | $sudo apt-key add -

# 최신 Python3 저장소 추가

sudo add-apt-repository ppa:deadsnakes/ppa -y || exit 1

$sudo apt update

;;

esac

# Python 및 컨테이너 런타임 설치

$sudo apt install -y python${PY} python${PY}-venv python${PY}-dev python3-pip python3-selinux podman || exit 1

fi

################################

# prepare-py.sh → target-scripts/venv.sh : Python 가상환경 설정 및 패키지 설치

# Ansible 실행을 위한 Python 환경을 준비

################################

#!/bin/bash

# Create python3 env (Python3 환경 생성)

echo "==> prepare-py.sh"

. /etc/os-release # OS 정보 로드

. ./target-scripts/venv.sh # 가상환경 설정 스크립트 실행

source ./scripts/set-locale.sh # 로케일 설정

echo "==> Update pip, etc"

pip install -U pip setuptools # pip 및 setuptools 업그레이드

#if [ "$(getenforce)" == "Enforcing" ]; then

# pip install -U selinux # SELinux 활성화 시 selinux 패키지 설치

#fi

echo "==> Install python packages"

pip install -r requirements.txt # Kubespray 필수 Python 패키지 설치

################################

# target-scripts/venv.sh - Python 가상환경 생성 및 활성화

################################

#!/bin/bash

source /etc/os-release # OS 정보 로드

# Select python version (Python 버전 선택)

source "$(dirname "${BASH_SOURCE[0]}")/pyver.sh"

python3=python${PY} # Python 실행 파일 경로

VENV_DIR=${VENV_DIR:-~/.venv/${PY}} # 가상환경 디렉터리 (기본값: ~/.venv/3.x)

echo "python3 = $python3"

echo "VENV_DIR = ${VENV_DIR}"

if [ ! -e ${VENV_DIR} ]; then

$python3 -m venv ${VENV_DIR} # 가상환경 생성

fi

source ${VENV_DIR}/bin/activate # 가상환경 활성화

################################

# get-kubespray.sh - Kubespray 소스코드 다운로드 및 준비

# 지정된 버전의 Kubespray를 다운로드하고 필요한 패치를 적용

################################

#!/bin/bash

CURRENT_DIR=$(cd $(dirname $0); pwd) # 현재 디렉터리 경로

source config.sh # 설정 파일 로드

KUBESPRAY_TARBALL=kubespray-${KUBESPRAY_VERSION}.tar.gz # 압축 파일명

KUBESPRAY_DIR=./cache/kubespray-${KUBESPRAY_VERSION} # 캐시 디렉터리

umask 022 # 파일 권한 설정

mkdir -p ./cache # 캐시 디렉터리 생성

mkdir -p outputs/files/ # 출력 파일 디렉터리 생성

# 기존 Kubespray 캐시 디렉터리 제거 함수

remove_kubespray_cache_dir() {

if [ -e ${KUBESPRAY_DIR} ]; then

/bin/rm -rf ${KUBESPRAY_DIR}

fi

}

# If KUBESPRAY_VERSION looks like a git commit hash, check out that commit

# Git 커밋 해시로 지정된 경우 해당 커밋 체크아웃

if [[ $KUBESPRAY_VERSION =~ ^[0-9a-f]{7,40}$ ]]; then

remove_kubespray_cache_dir

echo "===> Checkout kubespray commit: $KUBESPRAY_VERSION"

git clone https://github.com/kubernetes-sigs/kubespray.git ${KUBESPRAY_DIR}

cd ${KUBESPRAY_DIR}

git checkout $KUBESPRAY_VERSION || {

echo "Error: commit $KUBESPRAY_VERSION not found"

exit 1

}

cd - >/dev/null

tar czf outputs/files/${KUBESPRAY_TARBALL} -C ./cache kubespray-${KUBESPRAY_VERSION}

echo "Done (commit checkout)."

exit 0

fi

# 브랜치 이름으로 지정된 경우 (master, release-x.x 등)

if [ $KUBESPRAY_VERSION == "master" ] || [[ $KUBESPRAY_VERSION =~ ^release- ]]; then

remove_kubespray_cache_dir

echo "===> Checkout kubespray branch : $KUBESPRAY_VERSION"

if [ ! -e ${KUBESPRAY_DIR} ]; then

git clone -b $KUBESPRAY_VERSION https://github.com/kubernetes-sigs/kubespray.git ${KUBESPRAY_DIR}

tar czf outputs/files/${KUBESPRAY_TARBALL} -C ./cache kubespray-${KUBESPRAY_VERSION}

fi

exit 0

fi

# 릴리스 태그로 지정된 경우 (기본값)

if [ ! -e outputs/files/${KUBESPRAY_TARBALL} ]; then

echo "===> Download ${KUBESPRAY_TARBALL}"

# GitHub 릴리스에서 압축 파일 다운로드

curl -SL https://github.com/kubernetes-sigs/kubespray/archive/refs/tags/v${KUBESPRAY_VERSION}.tar.gz >outputs/files/${KUBESPRAY_TARBALL} || exit 1

remove_kubespray_cache_dir

fi

# 압축 파일 해제 및 패치 적용

if [ ! -e ${KUBESPRAY_DIR} ]; then

echo "===> Extract ${KUBESPRAY_TARBALL}"

tar xzf outputs/files/${KUBESPRAY_TARBALL}

mv kubespray-${KUBESPRAY_VERSION} ${KUBESPRAY_DIR}

sleep 1 # ad hoc, for vagrant shared directory (공유 디렉터리 동기화 대기)

# Apply patches (버전별 패치 적용)

patch_dir=${CURRENT_DIR}/target-scripts/patches/${KUBESPRAY_VERSION}

if [ -d $patch_dir ]; then

for patch in ${patch_dir}/*.patch; do

echo "===> Apply patch $patch"

(cd $KUBESPRAY_DIR && patch -p1 < $patch) || exit 1

done

fi

fi

echo "Done."

################################

# pypi-mirror.sh : Python 패키지 미러 생성 스크립트

# 오프라인 환경에서 Python 패키지 설치를 위한 PyPI 미러 저장소 구축

################################

#!/bin/bash

source config.sh # 설정 파일 로드

KUBESPRAY_DIR=./cache/kubespray-${KUBESPRAY_VERSION}

if [ ! -e $KUBESPRAY_DIR ]; then

echo "No kubespray dir at $KUBESPRAY_DIR"

exit 1

fi

source /etc/os-release # OS 정보 로드

source ./target-scripts/venv.sh # Python 가상환경 활성화

source ./scripts/set-locale.sh # 로케일 설정

umask 022 # 파일 권한 설정

echo "==> Create pypi mirror for kubespray"

#set -x

pip install -U pip python-pypi-mirror # PyPI 미러 도구 설치

DEST="-d outputs/pypi/files" # 다운로드 대상 디렉터리

PLATFORM="--platform manylinux2014_$(uname -m)" # PEP-599 (호환성 플랫폼)

#PLATFORM="--platform manylinux_2_17_$(uname -m)" # PEP-600 (최신 플랫폼)

# Kubespray 요구사항 파일 준비

REQ=requirements.tmp

#sed "s/^ansible/#ansible/" ${KUBESPRAY_DIR}/requirements.txt > $REQ # Ansible은 바이너리 패키지 제공하지 않음

cp ${KUBESPRAY_DIR}/requirements.txt $REQ

echo "PyYAML" >> $REQ # Ansible 의존성

echo "ruamel.yaml" >> $REQ # Inventory builder 의존성

# Python 버전별 바이너리 패키지 다운로드

for pyver in 3.11 3.12; do

echo "===> Download binary for python $pyver"

pip download $DEST --only-binary :all: --python-version $pyver $PLATFORM -r $REQ || exit 1

done

/bin/rm $REQ # 임시 파일 정리

echo "===> Download source packages"

# 소스 패키지 다운로드 (바이너리가 없는 경우 대비)

pip download $DEST --no-binary :all: -r ${KUBESPRAY_DIR}/requirements.txt

echo "===> Download pip, setuptools, wheel, etc"

pip download $DEST pip setuptools wheel || exit 1

pip download $DEST pip setuptools==40.9.0 || exit 1 # RHEL 호환성을 위한 구버전

echo "===> Download additional packages"

PKGS=selinux # SELinux 지원을 위해 필요 (#4)

PKGS="$PKGS flit_core" # pyparsing 빌드 의존성 (#6)

PKGS="$PKGS cython<3" # PyYAML이 Python 3.10에서 Cython 필요 (Ubuntu 22.04)

pip download $DEST pip $PKGS || exit 1

# PyPI 미러 생성

pypi-mirror create $DEST -m outputs/pypi

echo "pypi-mirror.sh done"

################################

# (가장 중요) download-kubespray-files.sh : Kubespray 필수 파일 및 이미지 다운로드

# 1. 파일과 이미지 목록 작성 (generate_list.sh 사용)

# 2. 바이너리 파일들 다운로드

# 3. 컨테이너 이미지 다운로드 (download-images.sh 실행)

################################

#!/bin/bash

umask 022 # 파일 권한 설정

source ./config.sh # 설정 파일 로드

source scripts/common.sh # 공통 함수 로드

source scripts/images.sh # 이미지 관련 함수 로드

KUBESPRAY_DIR=./cache/kubespray-${KUBESPRAY_VERSION} # Kubespray 소스 디렉터리

if [ ! -e $KUBESPRAY_DIR ]; then

echo "No kubespray dir at $KUBESPRAY_DIR"

exit 1

fi

FILES_DIR=outputs/files # 파일 저장 디렉터리

# URL에서 상대 디렉터리 경로 결정 함수

# 각 도구별로 버전에 따른 디렉터리 구조를 정의

#

# kubernetes/vx.x.x : kubeadm/kubectl/kubelet

# kubernetes/etcd : etcd

# kubernetes/cni : CNI plugins

# kubernetes/cri-tools : crictl

# kubernetes/calico/vx.x.x : calico

# kubernetes/calico : calicoctl

# runc/vx.x.x : runc

# cilium-cli/vx.x.x : cilium-cli

# gvisor/{ver}/{arch} : gvisor (runsc, containerd-shim)

# skopeo/vx.x.x : skopeo

# yq/vx.x.x : yq

#

decide_relative_dir() {

local url=$1

local rdir

rdir=$url

# Kubernetes 바이너리 (kubeadm, kubectl, kubelet)

rdir=$(echo $rdir | sed "s@.*/\(v[0-9.]*\)/.*/kube\(adm\|ctl\|let\)@kubernetes/\1@g")

# etcd 바이너리

rdir=$(echo $rdir | sed "s@.*/etcd-.*.tar.gz@kubernetes/etcd@")

# CNI 플러그인

rdir=$(echo $rdir | sed "s@.*/cni-plugins.*.tgz@kubernetes/cni@")

# CRI 도구 (crictl)

rdir=$(echo $rdir | sed "s@.*/crictl-.*.tar.gz@kubernetes/cri-tools@")

# Calico 도구

rdir=$(echo $rdir | sed "s@.*/\(v.*\)/calicoctl-.*@kubernetes/calico/\1@")

# runc 런타임

rdir=$(echo $rdir | sed "s@.*/\(v.*\)/runc.${IMAGE_ARCH}@runc/\1@")

# Cilium CLI

rdir=$(echo $rdir | sed "s@.*/\(v.*\)/cilium-linux-.*@cilium-cli/\1@")

# gVisor 런타임

rdir=$(echo $rdir | sed "s@.*/\([^/]*\)/\([^/]*\)/runsc@gvisor/\1/\2@")

rdir=$(echo $rdir | sed "s@.*/\([^/]*\)/\([^/]*\)/containerd-shim-runsc-v1@gvisor/\1/\2@")

# Skopeo 도구

rdir=$(echo $rdir | sed "s@.*/\(v[^/]*\)/skopeo-linux-.*@skopeo/\1@")

# yq 도구

rdir=$(echo $rdir | sed "s@.*/\(v[^/]*\)/yq_linux_*@yq/\1@")

if [ "$url" != "$rdir" ]; then

echo $rdir

return

fi

# Calico 일반 파일들

rdir=$(echo $rdir | sed "s@.*/calico/.*@kubernetes/calico@")

if [ "$url" != "$rdir" ]; then

echo $rdir

else

echo "" # 매칭되지 않으면 루트 디렉터리

fi

}

# URL에서 파일 다운로드 함수 (재시도 로직 포함)

get_url() {

url=$1

filename="${url##*/}" # URL에서 파일명 추출

rdir=$(decide_relative_dir $url) # 저장할 디렉터리 결정

if [ -n "$rdir" ]; then

if [ ! -d $FILES_DIR/$rdir ]; then

mkdir -p $FILES_DIR/$rdir # 디렉터리 생성

fi

else

rdir="." # 기본 디렉터리

fi

if [ ! -e $FILES_DIR/$rdir/$filename ]; then

echo "==> Download $url"

# 3회 재시도 로직

for i in {1..3}; do

curl --location --show-error --fail --output $FILES_DIR/$rdir/$filename $url && return

echo "curl failed. Attempt=$i"

done

echo "Download failed, exit : $url"

exit 1

else

echo "==> Skip $url" # 이미 존재하면 스킵

fi

}

# Kubespray의 오프라인 목록 생성 스크립트 실행

generate_list() {

#if [ $KUBESPRAY_VERSION == "2.18.0" ]; then

# export containerd_version=${containerd_version:-1.5.8}

# export host_os=linux

# export image_arch=amd64

#fi

# Kubespray 내장 스크립트로 필요한 파일과 이미지 목록 생성

LANG=C /bin/bash ${KUBESPRAY_DIR}/contrib/offline/generate_list.sh || exit 1

#if [ $KUBESPRAY_VERSION == "2.18.0" ]; then

# # roles/download/default/main.yml에서 버전 확인

# snapshot_controller_tag=${snapshot_controller_tag:-v4.2.1}

# sed -i "s@\(.*/snapshot-controller:\)@\1${snapshot_controller_tag}@" ${KUBESPRAY_DIR}/contrib/offline/temp/images.list || exit 1

#fi

}

. ./target-scripts/venv.sh # Python 가상환경 활성화

generate_list # 목록 작성 스크립트 실행

mkdir -p $FILES_DIR # 파일 저장 디렉터리 생성

# 생성된 목록 파일들을 출력 디렉터리로 복사

cp ${KUBESPRAY_DIR}/contrib/offline/temp/files.list $FILES_DIR/

cp ${KUBESPRAY_DIR}/contrib/offline/temp/images.list $IMAGES_DIR/

# 바이너리 파일들 다운로드

files=$(cat ${FILES_DIR}/files.list)

for i in $files; do

get_url $i # 각 파일 다운로드

done

# 컨테이너 이미지들 다운로드

./download-images.sh || exit 1

################################

# 위 스크립트로 생성된 파일과 이미지 목록 파일 내용

################################

cat outputs/files/files.list

https://dl.k8s.io/release/v1.34.3/bin/linux/arm64/kubelet

https://dl.k8s.io/release/v1.34.3/bin/linux/arm64/kubectl

https://dl.k8s.io/release/v1.34.3/bin/linux/arm64/kubeadm

https://github.com/etcd-io/etcd/releases/download/v3.5.26/etcd-v3.5.26-linux-arm64.tar.gz

https://github.com/containernetworking/plugins/releases/download/v1.8.0/cni-plugins-linux-arm64-v1.8.0.tgz

https://github.com/projectcalico/calico/releases/download/v3.30.6/calicoctl-linux-arm64

https://github.com/projectcalico/calico/archive/v3.30.6.tar.gz

https://github.com/cilium/cilium-cli/releases/download/v0.18.9/cilium-linux-arm64.tar.gz

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.34.0/crictl-v1.34.0-linux-arm64.tar.gz

https://storage.googleapis.com/cri-o/artifacts/cri-o.arm64.v1.34.4.tar.gz

https://get.helm.sh/helm-v3.18.4-linux-arm64.tar.gz

https://github.com/opencontainers/runc/releases/download/v1.3.4/runc.arm64

https://github.com/containers/crun/releases/download/1.17/crun-1.17-linux-arm64

https://github.com/youki-dev/youki/releases/download/v0.5.7/youki-0.5.7-aarch64-gnu.tar.gz

https://github.com/kata-containers/kata-containers/releases/download/3.7.0/kata-static-3.7.0-arm64.tar.xz

https://storage.googleapis.com/gvisor/releases/release/20260112.0/aarch64/runsc

https://storage.googleapis.com/gvisor/releases/release/20260112.0/aarch64/containerd-shim-runsc-v1

https://github.com/containerd/nerdctl/releases/download/v2.2.1/nerdctl-2.2.1-linux-arm64.tar.gz

https://github.com/containerd/containerd/releases/download/v2.2.1/containerd-2.2.1-linux-arm64.tar.gz

https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.23/cri-dockerd-0.3.23.arm64.tgz

https://github.com/lework/skopeo-binary/releases/download/v1.16.1/skopeo-linux-arm64

https://github.com/mikefarah/yq/releases/download/v4.42.1/yq_linux_arm64

https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.4.1/standard-install.yaml

https://github.com/prometheus-operator/prometheus-operator/releases/download/v0.84.0/stripped-down-crds.yaml

cat outputs/images/images.list

docker.io/mirantis/k8s-netchecker-server:v1.2.2

docker.io/mirantis/k8s-netchecker-agent:v1.2.2

quay.io/coreos/etcd:v3.5.26

quay.io/cilium/cilium:v1.18.6

quay.io/cilium/operator:v1.18.6

quay.io/cilium/hubble-relay:v1.18.6

quay.io/cilium/certgen:v0.2.4

quay.io/cilium/hubble-ui:v0.13.3

quay.io/cilium/hubble-ui-backend:v0.13.3

quay.io/cilium/cilium-envoy:v1.34.10-1762597008-ff7ae7d623be00078865cff1b0672cc5d9bfc6d5

ghcr.io/k8snetworkplumbingwg/multus-cni:v4.2.2

docker.io/flannel/flannel:v0.27.3

docker.io/flannel/flannel-cni-plugin:v1.7.1-flannel1

quay.io/calico/node:v3.30.6

quay.io/calico/cni:v3.30.6

quay.io/calico/kube-controllers:v3.30.6

quay.io/calico/typha:v3.30.6

quay.io/calico/apiserver:v3.30.6

docker.io/kubeovn/kube-ovn:v1.12.21

docker.io/cloudnativelabs/kube-router:v2.1.1

registry.k8s.io/pause:3.10.1

ghcr.io/kube-vip/kube-vip:v1.0.3

docker.io/library/nginx:1.28.0-alpine

docker.io/library/haproxy:3.2.4-alpine

registry.k8s.io/coredns/coredns:v1.12.1

registry.k8s.io/dns/k8s-dns-node-cache:1.25.0

registry.k8s.io/cpa/cluster-proportional-autoscaler:v1.8.8

docker.io/library/registry:2.8.1

registry.k8s.io/metrics-server/metrics-server:v0.8.0

registry.k8s.io/sig-storage/local-volume-provisioner:v2.5.0

docker.io/rancher/local-path-provisioner:v0.0.32

registry.k8s.io/ingress-nginx/controller:v1.13.3

docker.io/amazon/aws-alb-ingress-controller:v1.1.9

quay.io/jetstack/cert-manager-controller:v1.15.3

quay.io/jetstack/cert-manager-cainjector:v1.15.3

quay.io/jetstack/cert-manager-webhook:v1.15.3

registry.k8s.io/sig-storage/csi-attacher:v4.4.2

registry.k8s.io/sig-storage/csi-provisioner:v3.6.2

registry.k8s.io/sig-storage/csi-snapshotter:v6.3.2

registry.k8s.io/sig-storage/snapshot-controller:v7.0.2

registry.k8s.io/sig-storage/csi-resizer:v1.9.2

registry.k8s.io/sig-storage/livenessprobe:v2.11.0

registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.4.0

registry.k8s.io/provider-os/cinder-csi-plugin:v1.30.0

docker.io/amazon/aws-ebs-csi-driver:v0.5.0

docker.io/kubernetesui/dashboard:v2.7.0

docker.io/kubernetesui/metrics-scraper:v1.0.8

quay.io/metallb/speaker:v0.13.9

quay.io/metallb/controller:v0.13.9

registry.k8s.io/kube-apiserver:v1.34.3

registry.k8s.io/kube-controller-manager:v1.34.3

registry.k8s.io/kube-scheduler:v1.34.3

registry.k8s.io/kube-proxy:v1.34.3

cat outputs/images/additional-images.list

nginx:1.29.4

registry:3.0.0

################################

# download-images.sh : Kubespray 기본 컨테이너 이미지 다운로드

# generate_list.sh로 생성된 이미지 목록을 기반으로 컨테이너 이미지들을 다운로드

################################

#!/bin/bash

source ./config.sh # 설정 파일 로드

source scripts/common.sh # 공통 함수 로드

source scripts/images.sh # 이미지 관련 함수 로드

# 이미지 다운로드 스킵 옵션 확인

if [ "$SKIP_DOWNLOAD_IMAGES" = "true" ]; then

exit 0

fi

# 이미지 목록 파일 존재 여부 확인

if [ ! -e "${IMAGES_DIR}/images.list" ]; then

echo "${IMAGES_DIR}/images.list does not exist. Run download-kubespray-files.sh first."

exit 1

fi

# Kubespray 필수 컨테이너 이미지들 다운로드

images=$(cat ${IMAGES_DIR}/images.list)

for i in $images; do

get_image $i # scripts/images.sh의 get_image 함수 호출

done

################################

# download-additional-containers.sh : 추가 컨테이너 이미지 다운로드

# 오프라인 환경 구축을 위한 추가 이미지들 (nginx, registry 등) 다운로드

################################

#!/bin/bash

# 이미지 다운로드 스킵 옵션 확인